Understanding Machine Learning Concepts: Linear Classification and Logistic Regression

Explore the fundamentals of machine learning through concepts such as Deterministic Learning, Linear Classification, and Logistic Regression. Gain insights on linear hyperplanes, margin computation, and the uniqueness of functions found in logistic regression. Enhance your understanding of these key

6 views • 62 slides

Understanding Multiple Linear Regression: An In-Depth Exploration

Explore the concept of multiple linear regression, extending the linear model to predict values of variable A given values of variables B and C. Learn about the necessity and advantages of multiple regression, the geometry of best fit when moving from one to two predictors, the full regression equat

4 views • 31 slides

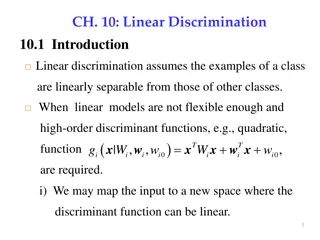

Understanding Linear Discrimination for Classification

Linear discrimination is a method for classifying data where examples from one class are separable from others. It involves using linear models or high-order functions like quadratic to map inputs to class separable spaces. This approach can be further categorized as class-based or boundary-based, e

3 views • 37 slides

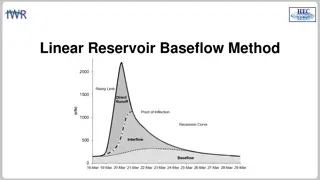

Understanding Linear Reservoir Baseflow Method

The linear reservoir baseflow method utilizes linear reservoirs to simulate the movement of water infiltrated into the soil. This method models water movement from the land surface to the stream network by integrating a linear relationship between storage and discharge. Users can select from one, tw

0 views • 11 slides

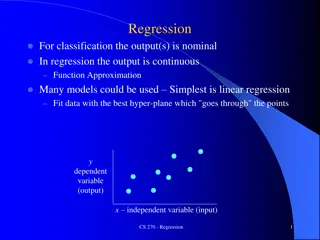

Understanding Regression in Machine Learning

Regression in machine learning involves fitting data with the best hyper-plane to approximate a continuous output, contrasting with classification where the output is nominal. Linear regression is a common technique for this purpose, aiming to minimize the sum of squared residues. The process involv

0 views • 34 slides

Understanding Linear Transformations and Matrices in Mathematics

Linear transformations play a crucial role in the study of vector spaces and matrices. They involve mapping vectors from one space to another while maintaining certain properties. This summary covers the introduction to linear transformations, the kernel and range of a transformation, matrices for l

0 views • 85 slides

Understanding Multiple Regression in Statistics

Introduction to multiple regression, including when to use it, how it extends simple linear regression, and practical applications. Explore the relationships between multiple independent variables and a dependent variable, with examples and motivations for using multiple regression models in data an

0 views • 19 slides

Overview of Linear Regression in Machine Learning

Linear regression is a fundamental concept in machine learning where a line or plane is fitted to a set of points to model the input-output relationship. It discusses fitting linear models, transforming inputs for nonlinear relationships, and parameter estimation via calculus. The simplest linear re

0 views • 14 slides

Understanding Curve Fitting Techniques

Curve fitting involves approximating function values using regression and interpolation. Regression aims to find a curve that closely matches target function values, while interpolation approximates points on a function using nearby data. This chapter covers least squares regression for fitting a st

0 views • 48 slides

Understanding Interpolation Techniques in Computer Analysis & Visualization

Explore the concepts of interpolation and curve fitting in computer analysis and visualization. Learn about linear regression, polynomial regression, and multiple variable regression. Dive into linear interpolation techniques and see how to apply them in Python using numpy. Uncover the basics of fin

2 views • 44 slides

Understanding Least-Squares Regression Line in Statistics

The concept of the least-squares regression line is crucial in statistics for predicting values based on two-variable data. This regression line minimizes the sum of squared residuals, aiming to make predicted values as close as possible to actual values. By calculating the regression line using tec

0 views • 15 slides

Understanding Regression Analysis: Meaning, Uses, and Applications

Regression analysis is a statistical tool developed by Sir Francis Galton to measure the relationship between variables. It helps predict unknown values based on known values, estimate errors, and determine correlations. Regression lines and equations are essential components of regression analysis,

0 views • 10 slides

Introduction to Binary Logistic Regression: A Comprehensive Guide

Binary logistic regression is a valuable tool for studying relationships between categorical variables, such as disease presence, voting intentions, and Likert-scale responses. Unlike linear regression, binary logistic regression ensures predicted values lie between 0 and 1, making it suitable for m

7 views • 17 slides

Understanding Correlation and Regression in Data Analysis

Correlation and Regression play vital roles in investigating relationships between quantitative variables. Pearson's r correlation coefficient measures the strength of association between variables, whether positive or negative, linear or non-linear. Learn about different types of correlation, such

0 views • 26 slides

Understanding Linear Regression: Concepts and Applications

Linear regression is a statistical method for modeling the relationship between a dependent variable and one or more independent variables. It involves estimating and predicting the expected values of the dependent variable based on the known values of the independent variables. Terminology and nota

0 views • 30 slides

Understanding Binary Logistic Regression and Its Importance in Research

Binary logistic regression is an essential statistical technique used in research when the dependent variable is dichotomous, such as yes/no outcomes. It overcomes limitations of linear regression, especially when dealing with non-normally distributed variables. Logistic regression is crucial for an

0 views • 20 slides

Arctic Sea Ice Regression Modeling & Rate of Decline

Explore the rate of decline of Arctic sea ice through regression modeling techniques. The presentation covers variables, linear regression, interpretation of scatterplots and residual plots, quadratic regression, and the comparison of models. Discover the decreasing trend in Arctic sea ice extent si

1 views • 9 slides

Understanding Overdispersed Data in SAS for Regression Analysis

Explore the concept of overdispersion in count and binary data, its causes, consequences, and how to account for it in regression analysis using SAS. Learn about Poisson and binomial distributions, along with common techniques like Poisson regression and logistic regression. Gain insights into handl

0 views • 61 slides

Understanding Linear Dependent and Independent Vectors

In linear algebra, when exploring systems of linear equations and vector sets, it is crucial to distinguish between linear dependent and independent vectors. Linear dependence occurs when one vector can be expressed as a combination of others, leading to various solutions or lack thereof in the give

0 views • 20 slides

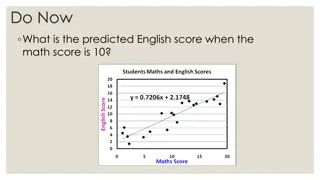

Understanding Regression Lines for Predicting English Scores

Learn how to utilize regression lines to predict English scores based on math scores, recognize the dangers of extrapolation, calculate and interpret residuals, and understand the significance of slope and y-intercept in regression analysis. Explore the process of making predictions using regression

0 views • 34 slides

Examples of Data Analysis Techniques and Linear Regression Models

In these examples, we explore data analysis techniques and linear regression models using scatter plots, linear functions, and residual calculations. We analyze the trends in recorded music sales, antibiotic levels in the body, and predicted values in a linear regression model. The concepts of slope

0 views • 11 slides

Conditional and Reference Class Linear Regression: A Comprehensive Overview

In this comprehensive presentation, the concept of conditional and reference class linear regression is explored in depth, elucidating key aspects such as determining relevant data for inference, solving for k-DNF conditions on Boolean and real attributes, and developing algorithms for conditional l

0 views • 33 slides

Exploring Curve Fitting and Regression Techniques in Neural Data Analysis

Delve into the world of curve fitting and regression analyses applied to neural data, including topics such as simple linear regression, polynomial regression, spline methods, and strategies for balancing fit and smoothness. Learn about variations in fitting models and the challenges of underfitting

0 views • 33 slides

Converting Left Linear Grammar to Right Linear Grammar

Learn about linear grammars, left linear grammars, and right linear grammars. Discover why left linear grammars are considered complex and how right linear grammars offer a simpler solution. Explore the process of converting a left linear grammar to a right linear grammar using a specific algorithm.

0 views • 44 slides

Understanding Linear Regression and Gradient Descent

Linear regression is about predicting continuous values, while logistic regression deals with discrete predictions. Gradient descent is a widely used optimization technique in machine learning. To predict commute times for new individuals based on data, we can use linear regression assuming a linear

0 views • 30 slides

Understanding Multiclass Logistic Regression in Data Science

Multiclass logistic regression extends standard logistic regression to predict outcomes with more than two categories. It includes ordinal logistic regression for hierarchical categories and multinomial logistic regression for non-ordered categories. By fitting separate models for each category, suc

0 views • 23 slides

Predicting Quality of Wine Using Linear Regression Analysis

Linear regression is a powerful method to analyze data and make predictions in the context of wine quality, particularly focusing on Bordeaux wines. This approach involves modeling the age of the wine, weather-related factors, and other independent variables to approximate quality and predict price

0 views • 18 slides

Understanding Linear Regression and Classification Methods

Explore the concepts of line fitting, gradient descent, multivariable linear regression, linear classifiers, and logistic regression in the context of machine learning. Dive into the process of finding the best-fitting line, minimizing empirical loss, vanishing of partial derivatives, and utilizing

0 views • 17 slides

Methods for Handling Collinearity in Linear Regression

Linear regression can face issues such as overfitting, poor generalizability, and collinearity when dealing with multiple predictors. Collinearity, where predictors are linearly related, can lead to unstable model estimates. To address this, penalized regression methods like Ridge and Elastic Net ca

0 views • 70 slides

Advanced Methods and Analysis for the Learning and Social Sciences

This presentation covers topics on regression analysis, linear regression, non-linear inputs, and the basic principles of predicting labels using different features in the field of learning and social sciences. It emphasizes the application of various regression methods to predict numerical values b

0 views • 52 slides

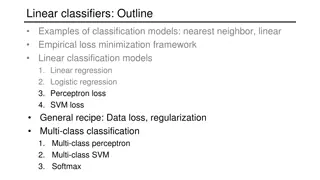

Overview of Linear Classifiers and Perceptron in Classification Models

Explore various linear classification models such as linear regression, logistic regression, and SVM loss. Understand the concept of multi-class classification, including multi-class perceptron and multi-class SVM. Delve into the specifics of the perceptron algorithm and its hinge loss, along with d

0 views • 51 slides

Understanding Linear Regression Analysis: Testing for Association Between X and Y Variables

The provided images and text explain the process of testing for association between two quantitative variables using Linear Regression Analysis. It covers topics such as estimating slopes for Least Squares Regression lines, understanding residuals, conducting T-Tests for population regression lines,

0 views • 26 slides

Data Analysis and Regression Quiz Overview

This quiz covers topics related to traditional OLS regression problems, generalized regression characteristics, JMP options, penalty methods in Elastic Net, AIC vs. BIC, GINI impurity in decision trees, and more. Test your knowledge and understanding of key concepts in data analysis and regression t

0 views • 14 slides

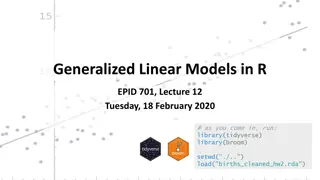

Association Between Maternal Education and Maternal Age in GLM Analysis

In this lecture on Generalized Linear Models in R, the focus is on examining the association between maternal education and maternal age using a dataset on births. The process involves creating a factor variable for maternal education levels, filtering a smaller dataset, visualizing the univariate r

0 views • 43 slides

Understanding Survival Analysis: Hazard Function and Cox Regression

Survival analysis examines hazards, such as the risk of events occurring over time. The Hazard Function and Cox Regression are essential concepts in this field. The Hazard Function assesses the risk of an event in a short time interval, while Cox Regression, named after Sir David Cox, estimates the

0 views • 20 slides

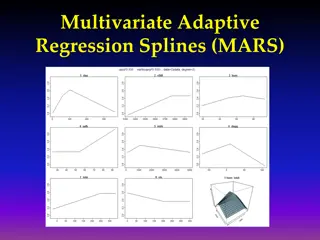

Understanding Multivariate Adaptive Regression Splines (MARS)

Multivariate Adaptive Regression Splines (MARS) is a flexible modeling technique that constructs complex relationships using a set of basis functions chosen from a library. The basis functions are selected through a combination of forward selection and backward elimination processes to build a smoot

0 views • 13 slides

R Short Course Session 5 Overview: Linear and Logistic Regression

In this session, Dr. Daniel Zhao and Dr. Sixia Chen from the Department of Biostatistics and Epidemiology at the College of Public Health, OUHSC, cover topics on linear regression including fitting models, checking results, examining normality, outliers, collinearity, model selection, and comparison

0 views • 44 slides

Multivariate Adaptive Regression Splines (MARS) in Machine Learning

Multivariate Adaptive Regression Splines (MARS) offer a flexible approach in machine learning by combining features of linear regression, non-linear regression, and basis expansions. Unlike traditional models, MARS makes no assumptions about the underlying functional relationship, leading to improve

0 views • 42 slides

Understanding Overfitting and Inductive Bias in Machine Learning

Overfitting can hinder generalization on novel data, necessitating the consideration of inductive bias. Linear regression struggles with non-linear tasks, highlighting the need for non-linear surfaces or feature pre-processing. Techniques like regularization in linear regression help maintain model

0 views • 37 slides

Introduction to Machine Learning: Model Selection and Error Decomposition

This course covers topics such as model selection, error decomposition, bias-variance tradeoff, and classification using Naive Bayes. Students are required to implement linear regression, Naive Bayes, and logistic regression for homework. Important administrative information about deadlines, mid-ter

0 views • 42 slides