Understanding Multicollinearity in Regression Analysis

Multicollinearity in regression occurs when independent variables have strong correlations, impacting coefficient estimation. Perfect multicollinearity leads to regression model issues, while imperfect multicollinearity affects coefficient estimation. Detection methods and consequences, such as increased standard errors of coefficients, are crucial in addressing multicollinearity in statistical analysis.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Perfect Multicollinearity: When two (or more) indep.vars. have a functional, deterministic relationship For ex: x1 is height in inches and x2 is height in cm x1 and x2 will never move independently of one another

Another ex: Imagine that you are running a housing price model and you are interested in the effect of having a swimming pool, the effect of granite counter tops, amongst other characteristics. Now imagine that every single house that has a pool also has granite, and every one that has granite also has a swimming pool.

Would you ever be able to disentangle the effects of pool vs granite? Would need some obs with pool and no granite and with granite but no pool Pool and granite are perfectly collinear in the above example Computer will refuse to run this regression Or will drop one of the perfectly collinear vars

Imperfect Multicollinearity: A correlation between two indep.vars that is not perfect, but strong enough that it noticeably effects the estimation of the coefs. For ex: Sqft of house and # of bedrooms Age of student and number of years of education Age and labor market experience

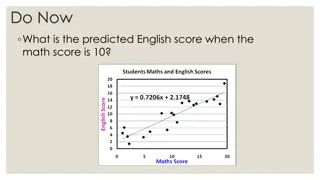

If the two vars almost always more together (positively or neg), it become difficult for the reg.equation to separate the effects Note that this is only an issue when the correlation is strong Maybe when coef of correlation (in abs val) is >0.8?

How do I detect Multicollinearity? Look at the coef of correlation between each of the indep vars This is often summed up in a correlation matrix

What are the consequences of multicollinearity? The std errors of the estimates for each slope coef will rise Why is this an issue? Recall the equation for the t-stat What happens as the std error gets bigger? What impact does this have on the t-stat?

Note that the estimates for the coefs are still unbiased This is a good thing Means if we run this regression a million times with a million different samples drawn from the pop and take the avg of all the estimates, we will be right Overall fit of the model will be unaffected R squared and F-test are good, as is So a classic symptom of multicol. is a high f-stat but low t-stats on the individual vars

Solutions? Maybe just drop one of the collinear vars? For ex, drop either pool or granite , and know that whichever one we keep is picking up the effects of both! Increase the sample size will usually help Maybe just do nothing? Especially if the vars are just controls

Another example: Unit of observation: A US state Research question: Do lower speed limits reduce highway fatalities (Highway fatalities per 100,000 people in 2022)= B0+B1(max HWY speed limit)+ (% of state living in urban areas)+(avg # of mile driven per person in 2022)