Data Augmentation Techniques for Deep Learning-Based Medical Image Analyses

Various data augmentation techniques for improving deep learning-based medical image analyses. It covers topics such as overfitting, data labeling, and the use of generative adversarial networks (GANs).

6 views • 14 slides

Model Evaluation in Artificial Intelligence

Exploring the evaluation stage in AI model development, how to assess model reliability, avoid overfitting and underfitting, and understand key terminologies like True Positive, True Negative, and False Positive in a forest fire prediction scenario.

7 views • 26 slides

Deep Transfer Learning and Multi-task Learning

Deep Transfer Learning and Multi-task Learning involve transferring knowledge from a source domain to a target domain, benefiting tasks such as image classification, sentiment analysis, and time series prediction. Taxonomies of Transfer Learning categorize approaches like model fine-tuning, multi-ta

5 views • 26 slides

Bias and Variance in Machine Learning Models

Explore the concepts of overfitting, underfitting, bias, and variance in machine learning through visualizations and explanations by Geoff Hulten. Learn how bias error and variance error impact model performance, with tips on finding the right balance for optimal results.

2 views • 22 slides

Optimization Techniques in Neural Networks

Optimization is essential in neural networks to find the minimum value of a function. Techniques like local search, gradient descent, and stochastic gradient descent are used to minimize non-linear objectives with multiple local minima. Challenges such as overfitting and getting stuck in local minim

5 views • 9 slides

Strategies for Improving Generalization in Neural Networks

Overfitting in neural networks occurs due to the model fitting both real patterns and sampling errors in the training data. The article discusses ways to prevent overfitting, such as using different models, adjusting model capacity, and controlling neural network capacity through various methods lik

1 views • 39 slides

Sources of Error in Machine Learning

This comprehensive overview covers key concepts in machine learning, such as sources of error, cross-validation, hyperparameter selection, generalization, bias-variance trade-off, and error components. By delving into the intricacies of bias, variance, underfitting, and overfitting, the material hel

3 views • 13 slides

Visualization of Process Behavior Using Structured Petri Nets

Explore the concept of mining structured Petri nets for visualizing process behavior, distinguishing between overfitting and underfitting models, and proposing a method to extract structured slices from event logs. The approach involves constructing LTS from logs, synthesizing Petri nets, and presen

1 views • 26 slides

Utilizing Machine Learning for Conversion and Bounce Analysis

Machine learning techniques such as deep learning and random forest are employed to analyze drivers of bounce and conversion rates in a Velocity 2016 New York event. The process involves vectorizing and balancing the data, smoothing it for optimal performance, and validating on separate datasets to

2 views • 41 slides

Cross-Validation in Machine Learning

Cross-validation is a crucial technique in machine learning used to evaluate model performance. It involves dividing data into training and validation sets to prevent overfitting and assess predictive accuracy. Mean Squared Error (MSE) and Root Mean Squared Error (RMSE) quantify prediction accuracy,

7 views • 19 slides

Curve Fitting and Regression Techniques in Neural Data Analysis

Delve into the world of curve fitting and regression analyses applied to neural data, including topics such as simple linear regression, polynomial regression, spline methods, and strategies for balancing fit and smoothness. Learn about variations in fitting models and the challenges of underfitting

2 views • 33 slides

Optimizing SG Filter Parameters for Power Calibration in Experimental Setup

In this investigation, the aim is to find the optimal SG filter parameters to minimize uncertainty in power calibration while avoiding overfitting. Analyzing power calibration measurements and applying SG filter techniques, the process involves comparing different parameters to enhance filter perfor

4 views • 21 slides

Overfitting in Data Mining Models

Overfitting is a common issue in data mining models where the model performs exceptionally well on the training data but fails to generalize to new data. This content discusses how overfitting can occur, its impact on model performance, and strategies to mitigate it. Through examples and visualizati

1 views • 30 slides

Cross-Validation and Overfitting in Machine Learning

Overfitting is a common issue in machine learning where a model fits too closely to the training data, capturing noise instead of the underlying pattern. Cross-validation is a technique used to assess a model's generalizability by splitting data into subsets for training and testing. Strategies to r

21 views • 24 slides

Methods for Handling Collinearity in Linear Regression

Linear regression can face issues such as overfitting, poor generalizability, and collinearity when dealing with multiple predictors. Collinearity, where predictors are linearly related, can lead to unstable model estimates. To address this, penalized regression methods like Ridge and Elastic Net ca

4 views • 70 slides

Best Practices for Dataset Handling in Machine Learning Projects

Proper dataset handling is crucial in machine learning projects. Use publicly available datasets with train/dev/test splits or create your own. Be cautious of overfitting by utilizing independent validation and test sets. Avoid touching the test set until final evaluation to prevent overfitting. Mai

7 views • 13 slides

Core Methods in Educational Data Mining - EDU691 Spring 2019

Delve into the world of educational data mining with Core Methods in Educational Data Mining course content from Spring 2019. From basic homework assignments to analyzing the impacts of variables on decision tree algorithms, discover the challenges and differences between Python and RapidMiner tools

3 views • 57 slides

Combining Neural Networks for Reduced Overfitting

Combining multiple models in neural networks helps reduce overfitting by balancing the bias-variance trade-off. Averaging predictions from diverse models can improve overall performance, especially when individual models make different predictions. By combining models with varying capacities, we can

2 views • 41 slides

Support Vector Machines in Quadratic Programming for Heart Disease Prediction

This case study explores the application of support vector machines in quadratic programming for predicting heart disease in patients. The process involves fitting a linear classification model to real patient data, splitting the dataset into training and test sets, optimizing model parameters, and

3 views • 19 slides

The Importance of Calibration in Hydrological Modeling

Hydrological models require calibration to adjust parameters for better representation of real-world processes, as they are conceptual and parameters are not physically measurable. Calibration involves manual trial and error or automatic optimization algorithms to improve model accuracy. Objective f

3 views • 12 slides

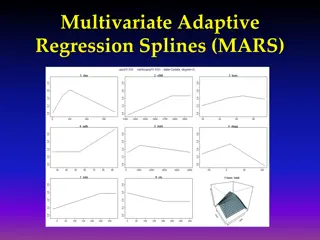

Multivariate Adaptive Regression Splines (MARS)

Multivariate Adaptive Regression Splines (MARS) is a flexible modeling technique that constructs complex relationships using a set of basis functions chosen from a library. The basis functions are selected through a combination of forward selection and backward elimination processes to build a smoot

3 views • 13 slides

Easy Data Augmentation for Language Models

Data augmentation plays a crucial role in enhancing model performance, especially for tasks like sentiment analysis, topic labeling, and language detection. By generating more training data and reducing overfitting, techniques like Synonym Replacement, Random Insertion, Random Swap, and Random Delet

2 views • 12 slides

Machine Learning: Decision Trees and Overfitting

Foundations of Artificial Intelligence delve into the intricacies of Machine Learning, focusing on Decision Trees, generalization, overfitting, and model selection. The extensions of the Decision Tree Learning Algorithm address challenges such as noisy data, model overfitting, and methods like cross

0 views • 13 slides

Overfitting and Inductive Bias in Machine Learning

Overfitting can hinder generalization on novel data, necessitating the consideration of inductive bias. Linear regression struggles with non-linear tasks, highlighting the need for non-linear surfaces or feature pre-processing. Techniques like regularization in linear regression help maintain model

6 views • 37 slides

Model Bias and Optimization in Machine Learning

Learn about the concepts of model bias, loss on training data, and optimization issues in the context of machine learning. Discover strategies to address model bias, deal with large or small losses, and optimize models effectively to improve performance and accuracy. Gain insights into splitting tra

1 views • 29 slides

Introduction to Advanced Topics in Data Analysis and Machine Learning

Explore advanced topics in data analysis by Shai Carmi, covering machine learning basics, regression, overfitting, bias-variance tradeoff, classifiers, cross-validation, with additional readings in statistical learning and pattern recognition. Discover the motivation behind using data for medical di

0 views • 75 slides

Introduction to Machine Learning: Model Selection and Error Decomposition

This course covers topics such as model selection, error decomposition, bias-variance tradeoff, and classification using Naive Bayes. Students are required to implement linear regression, Naive Bayes, and logistic regression for homework. Important administrative information about deadlines, mid-ter

6 views • 42 slides

Maximum Likelihood Estimation in Machine Learning

In the realm of machine learning, Maximum Likelihood Estimation (MLE) plays a crucial role in estimating parameters by maximizing the likelihood of observed data. This process involves optimizing log-likelihood functions for better numerical stability and efficiency. MLE aims to find parameters that

6 views • 18 slides

Concept Development and Implementation of Ridge Regression in Genomic Selection

This presentation delves into the concept development and implementation of ridge regression in genomic selection, emphasizing the importance of avoiding overfitting by regulating parameters and distinguishing between fixed and random effects. The pioneers of ridge regression and Bayesian methods ar

1 views • 26 slides

Avoiding Model Selection Pitfalls in Regression Analysis

Explore the dangers of P-hacking and overfitting in multiple regression models, uncovering how sample size impacts statistical significance and the risks of drawing conclusions from weak correlations. Learn to navigate the complexities of model selection to ensure accurate results.

4 views • 10 slides

Neural Network Optimization and Loss Functions

Explore the concepts of neural network optimization, forward and back propagation, loss functions, overfitting, and solutions to parameter overfitting. Understand the importance of model order, training data, and testing loss for accurate machine learning system performance.

2 views • 10 slides

Biostatistics Predictor Selection and Prediction Error Analysis

Explore the methods for predictor selection in regression models based on inferential goals in biostatistics, focusing on prediction error measures for model validation and the bias-variance tradeoff to avoid overfitting. An example prediction tool development process is also highlighted, emphasizin

1 views • 63 slides

Tuning ANNs for Improved Performance

ANNs face challenges like over-parameterization and non-convex optimization. Learn how to optimize ANN models with considerations in starting values, avoiding overfitting, and selecting hidden units. Discover the impact of starting values, likelihood of overfitting, weight decay, and scaling inputs

2 views • 43 slides

Neural Networks in Machine Learning Organization

Explore the organization of neural networks in machine learning, covering topics like artificial neural networks, model evaluation, and support vector machines. Discover insights into supervised learning, overfitting, and deep learning, with added slides and lecture notes from Dr. Eick's presentatio

3 views • 30 slides

Exploring Theory of Learning in Machine Learning and AI

Understanding the theory of learning in machine learning and artificial intelligence involves formal frameworks and mathematical principles that explain how learning systems improve based on data, experience, or feedback. It encompasses components like tasks, environments, hypothesis spaces, data, a

6 views • 11 slides

Overfitting and Decision Trees in Data Mining

Explore the concepts of overfitting, classification errors, and decision trees in data mining through practical examples and visualizations. Learn about the impact of increasing the number of nodes in decision trees and how it affects model performance. Stay updated on the latest news related to dat

0 views • 19 slides

Avoiding Overfitting in Business Intelligence and Analytics

In the realm of Business Intelligence and Analytics, it is crucial to navigate the fine line between overfitting and generalization. Overfitting occurs when models capture noise in training data rather than underlying patterns. This session delves into concepts of generalization, overfitting, and st

1 views • 41 slides

Model Overfitting in Data Mining

Explore the concept of model overfitting in data mining, including classification errors, generalization errors, decision trees, and the impact of model complexity on training and test errors. Learn how to identify and address overfitting and underfitting issues for better model performance.

3 views • 17 slides

Preventing Overfitting in Neural Networks with Dropout Technique

Learn about the Dropout technique proposed by Srivastava et al. to prevent overfitting in neural networks. The technique involves randomly dropping units during training to prevent co-adaptation, leading to improved performance on various supervised learning tasks in vision, speech recognition, and

0 views • 21 slides

Understanding Overfitting in Machine Learning

Learn about overfitting in machine learning, how to detect it, prevent it, and the role of regularization in reducing generalization error. Explore techniques to avoid overfitting and improve model performance through proper training and testing sets.

0 views • 10 slides