Root Causes of Intrusion Detection False Negatives: Methodology and Case Study

This study, presented at IEEE MILCOM 2019 by Eric Ficke, Kristin M. Schweitzer, Raymond M. Bateman, and Shouhuai Xu, delves into the analysis of root causes of intrusion detection false negatives. The researchers explore methodologies and present a case study to illustrate their findings.

Uploaded on Sep 23, 2024 | 0 Views

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Analyzing Root Causes of Intrusion Analyzing Root Causes of Intrusion Detection False Negatives: Detection False Negatives: Methodology and Case Study Methodology and Case Study Eric Ficke1 Kristin M. Schweitzer2 Raymond M. Bateman2 Shouhuai Xu1 IEEE MILCOM 2019 1 2

Motivating Scenarios Motivating Scenarios Alice gets hit with a ransomware attack [139:1:1] (spp_sdf) SDF Combination Alert Alice calls her boss in a panic Alice has to undergo incident response training

Motivating Scenarios Motivating Scenarios Bob gets an alert: [1:2008705:5] ET NETBIOS Microsoft Windows NETAPI Stack Overflow Inbound - MS08-067 Bob has been attacked! Bob s servers are safe to serve his customers another day

So, whats the difference? So, what s the difference? Bob was lucky Bob s IDS gave him better alerts Alice was unlucky Alice s IDS did not help her solve the problem

The Point The Point Why do IDSs not catch every attack? What causes incident response to be slow? What other weaknesses exist in IDSs? How do we improve future generations of IDS?

Outline Outline Background Contributions Methodology Case Study Conclusion 6

Background Background IDS Taxonomies IDS Taxonomies Detection target Malicious use detection Anomaly detection Detection method Signature matching (rule based) Data mining Detection granularity Packet level Flow level 7

Outline Outline Background Contributions Methodology Case Study Conclusion 8

Contributions Contributions Methodology Enables analysis of false-negatives Generalizable to other IDSs Case study Validates methodology for malicious use detectors Provides insight for next generation of IDSs 9

Outline Outline Background Contributions Methodology Case Study Conclusion 10

Methodology Methodology Existing Methods Original Contributions IDS Design Dataset Selection Ground Truth Packet Database Flow Database IDS Alerts Results Analysis 11

Analysis Types Methodology Methodology Distri- bution Results Alerts Attacks Develop- ment Results True Positives False Positives False Negatives Design Results Flow Database 12

Outline Outline Background Contributions Methodology Case Study Conclusion 13

Case Study Case Study - - Snort Snort Malicious use Signature-based Packet level alerts Design Principles Catch vulnerabilities, not exploits Consider protocol oddities 14

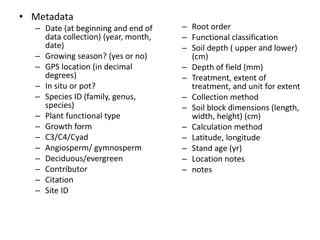

Case Study Case Study - - Dataset Dataset Information Security Centre of Excellence (ISCX 2012) 9 distinct attack types Flow-level ground truth tags 15

Case Study Case Study Attack Categorization Attack Categorization Vulnerability-leveraging exploits Auxiliary attacks Brute force attacks 16

Case Study Case Study Vulnerability Vulnerability- -Leveraging Exploits Leveraging Exploits Adobe printf buffer overflow MS08_067 SMB stack overflow SQL injection HTTP DoS attack ( Slowloris ) 17

Case Study Case Study Auxiliary Attacks Auxiliary Attacks Reverse shell Network portscan ( nmap ) IRC command & control 18

Case Study Case Study Brute Force Attacks Brute Force Attacks HTTP DDoS attack SSH brute force login attempts 19

Case Study Case Study Adobe Insight 1: Multiple rulesets should be used, to ensure full coverage of attack landscape (rule distribution) Adobe printf printf Buffer Overflow Buffer Overflow Workstation 5 attacked via POP3 email server 422 malicious flows 10 alerts 2x [139:1:1] (spp_sdf) SDF Combination Alert 8x [129:12:1] Consecutive TCP small segments exceeding threshold Alternate ruleset (Emerging Threats) includes relevant signature 2800385 - ETPRO WEB_CLIENT Adobe Reader and Acrobat util.printf Stack Buffer Overflow Insight 2: Alert output must provide meaningful information to human operators (rule development) 20

Case Study Case Study SMB Stack Overflow SMB Stack Overflow 0 Alerts on relevant traffic Snort has 1 rule intended to detect MS08_067 165 deleted (historic) 3 disabled by default Suricata has 33 active rules for MS08_067 These correctly identify the attack to other factors (engine development) Insight 3: Even within the same class of IDS, detection capabilities vary according 21

Case Study Case Study SQL Injection SQL Injection 62 malicious flows 4 True Positives ([129:12:1] Consecutive TCP small segments exceeding threshold) Not representative of SQL injection SQL injection rules exist ([1:19439:8] SQL 1 = 1 possible sql injection) However, 1=1 is exploit specific Recall Snort design principle, catch vulnerabilities, not exploits to prevent a false sense of security (engine/rule development) Insight 4: IDS developers (and contributors) must follow design principles 22

Case Study Case Study HTTP HTTP DoS DoS ( ( Slowloris Slowloris ) ) 1969 attack flows [139:1:1] (spp_sdf) SDF Combination Alert Indicates sensitive data in traffic Not indicative of attack No payload used in this version of Slowloris limited by their dependence on payload inspection (engine development) Insight 5: signature-based IDSs are 23

Principles Principles IDS specifications should be clear Type of vulnerability Input expected Design principles IDS output should be intuitive Clear guidance for responders Alerts should be given priority levels Intrusion impact quantification 24