Dependability of College Student Ratings on Teaching and Learning Quality

The study investigates the dependability of college student ratings on teaching and learning quality using Teaching and Learning Quality Scales (TALQ). The research aims to develop more predictive and reliable items to address accountability concerns in higher education, focusing on factors like Academic Learning Time (ALT) and First Principles of Instruction. Results from previous studies suggest a strong correlation between student experiences, instructor methods, and mastery of course objectives. The TALQ scales are based on existing theory and empirical research to enhance the evaluation of student learning achievement.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

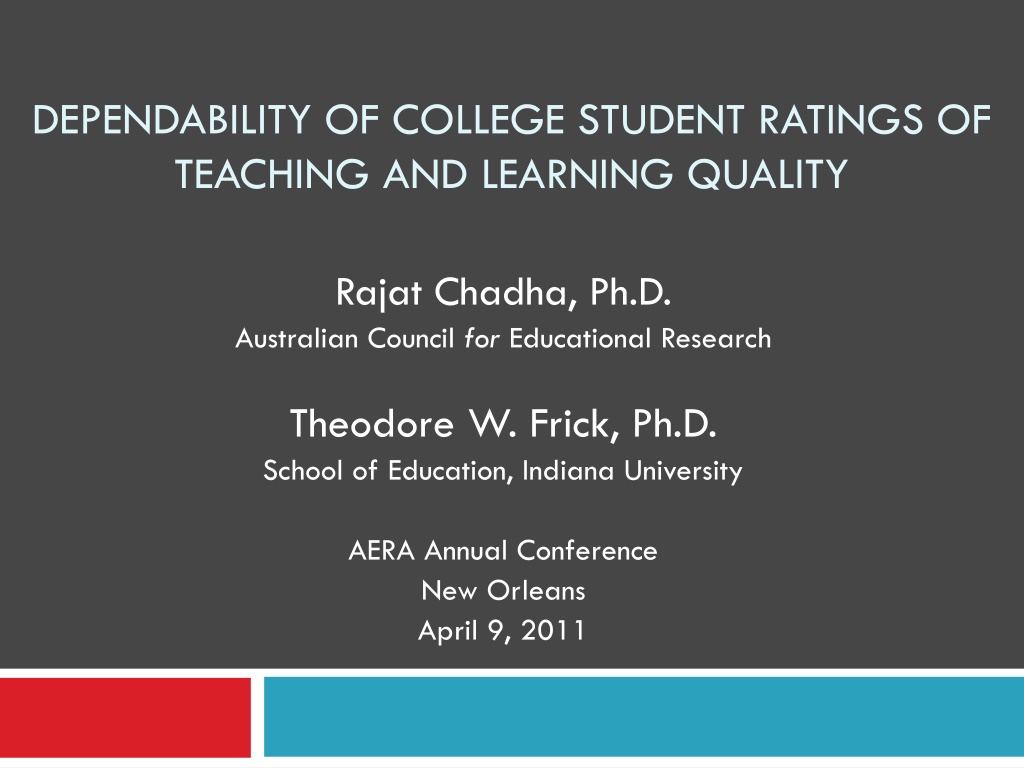

DEPENDABILITY OF COLLEGE STUDENT RATINGS OF TEACHING AND LEARNING QUALITY Rajat Chadha, Ph.D. Australian Council for Educational Research Theodore W. Frick, Ph.D. School of Education, Indiana University AERA Annual Conference New Orleans April 9, 2011

Background Problem: Beyond global ratings of quality, few items on course evaluation instruments are predictive of student learning achievement in higher education (Cohen, 1981; Kulik, 2001). If better items or scales can be developed which are reliable, valid, and more predictive of student learning, then use of course evaluations may better address accountability concerns in higher education.

Background (contd) Frick, Chadha, Watson, et al. have developed Teaching and Learning Quality Scales (TALQ) to address this issue. To date, 3 empirical studies have been conducted on TALQ. Study 1: 140 students in 89 courses at multiple institutions. Study 2: 193 students in 111 courses at multiple institutions Study 3: 464 students in 12 courses at one institution

Background (contd) Results from these 3 studies of TALQ revealed similar patterns of correlations among scales. In study #3, the major finding: If students agreed that they experienced Academic Learning Time (ALT) and they agreed that their instructors used First Principles of Instruction, then they were 5 times more likely to be high masters of course objectives according to independent instructor ratings.

Nine a priori TALQ Scales are based on extant theory and empirical research Academic Learning Time scale Learning progress scale Student satisfaction scale Global course and instructor quality items Authentic problems scale (Principle 1, First Principles of Instruction) Activation scale (Principle 2, First Principles of Instruction) Demonstration scale (Principle 3, First Principles of Instruction) Application scale (Principle 4, First Principles of Instruction) Integration scale (Principle 5, First Principles of Instruction)

Purpose of present study Since the TALQ appears to have promise for predicting student academic learning time (ALT), And ALT is predictive of student learning achievement: We next wanted to study in depth the dependability of TALQ scales in order to possibly: Shorten the instrument Improve problematic items

TALQ Course Evaluation Instrument 40 items scrambled into a random order. No information about the scales to students. 6 faculty members reviewed TALQ and suggested changing real world problems to authentic problems . Explanation about authentic problems was added. Note: In the items below, authentic problems or authentic tasks are meaningful learning activities that are clearly relevant to you at this time, and which may be useful to you in the future (e.g., in your chosen profession or field of work, in your life, etc.).

Participants 8 volunteer professors teaching 12 courses from diverse subject areas: business; philosophy; history; kinesiology; social work; computer science; nursing; and health, physical education and recreation. Administered during 13thto 15thweek of fall semester. 464 students: 52 freshmen 104 sophomore 115 juniors 185 seniors Class participation rates ranged form 49% to 100%.

Dependability (Reliability) of Student Ratings Classical Test Theory Generalizability Theory Total Variance Total Variance True Score Variance (Object of Measurement) Error Source #1 Variance (Facet#1) Error Source #2 Variance (Facet#2) Error Source #n Variance (Facet#3) True Score Variance Error Source Variance Only for relative decision For both relative and absolute decision

Generalizability Theory A measurement is a sample from the universe of admissible observations. Similar conditions are grouped to form a facet. Universe of generalization: universe of conditions to which a decision maker wishes to generalize. Universe score: mean of scores over the universe of generalization. Dependability: accuracy of generalizing from observed score to universe score. Index of Dependability (? coefficient) is analogous to classical test theory reliability coefficient. Estimation of number of conditions in each facet to yield dependable scores.

Authentic Problems Scale ? ? = 0.794 (3 items, 15 students) 3. I performed a series of increasingly complex authentic problems in this course. 3. I was expected to perform a series of increasingly complex authentic problems in this course. 17. My instructor directly compared problems or tasks that we did, so that I could see how they were similar or different. 22. I solved authentic problems or completed authentic tasks in this course. 22. I was expected to solve authentic problems or to complete authentic tasks in this course. 27. In this course I solved a variety of authentic problems that were organized from simple to complex. 27. In this course I was expected to solve a variety of authentic problems that were organized from simple to complex. 29. Assignments, tasks, or problems I did in this course are clearly relevant to my professional goals or field of work. Drop this item. -Confounding of the relevance of tasks to a student s professional goals and instructor selection of specific tasks.

Activation Scale ? ? = 0.875 (5 items, 25 students) ? ? = 0.794 (3 items, 25 students) 9. I engaged in experiences that subsequently helped me learn ideas or skills that were new and unfamiliar to me. 26. My instructor provided a learning structure that helped me to mentally organize new knowledge and skills. 19. In this course I was able to recall, describe or apply my past experience so that I could connect it to what I was expected to learn. -More similar to each other than the other items on the scale. - Use 1 out of 19 and 35 and drop item 36 since it is negatively worded. 35. In this course I was able to connect my past experience to new ideas and skills I was learning. 36. In this course I was not able to draw upon my past experience nor relate it to new things I was learning.

Demonstration Scale ? ? = 0.674 (5 items, 25 students) 5. My instructor demonstrated skills I was expected to learn in this course. - Moderate dependability because of low variability (SD=0.27) across classes. 14. Media used in this course (texts, illustrations, graphics, audio, video, computers) were helpful in learning. -Acceptable within-class agreement 16. My instructor gave examples and counter- examples of concepts that I was expected to learn. - Drop item 31 since it contains information covered in item 5 and item 31 is negatively worded. 31. My instructor did not demonstrate skills I was expected to learn. 38. My instructor provided alternative ways of understanding the same ideas or skills.

Application Scale ? ? = 0.753 (3 items, 25 students) 7. My instructor detected and corrected errors I was making when solving problems, doing learning tasks or completing assignments. 32. I had opportunities to practice or try out what I learned in this course. 37. My course instructor gave me personal feedback or appropriate coaching on what I was trying to learn. 37. My instructor gave me feedback on what I was trying to learn. -Low within-class agreement - Feedback and coaching do not necessarily mean the same. - Use of personal may be problematic.

Integration Scale ? ? = 0.896 (5 items, 25 students) ? ? = 0.854 (3 items, 25 students) 11. I had opportunities in this course to explore how I could personally use what I have learned. -Use one of these 2 items since the scale yields dependable scores with just 3 items. - These items are more similar to each other than the other items on the scale. 24. I see how I can apply what I learned in this course to real life situations. 30. I was able to publicly demonstrate to others what I learned in this course. 33. In this course I was able to reflect on, discuss with others, and defend what I learned. 39. I do not expect to apply what I learned in this course to my chosen profession or field of work. - Drop this item - Low within-class agreement - Possible confounding between relevance to a student s professional goals and instructor selection of tasks.

Academic Learning Time Scale ? ? = 0.736 (5 items) 1. I did not do very well on most of the tasks in this course, according to my instructor s judgment of the quality of my work. -No change OR break compound item stems into simple ones and use specialized scoring technique by combining item pairs, since ALT is a compound theoretical construct. 12. I frequently did very good work on projects, assignments, problems and/or learning activities for this course. 13. I spent a lot of time doing tasks, projects and/or assignments, and my instructor judged my work as high quality. -Alternatively, additional items may be generated to improve dependability 21. I put a great deal of effort and time into this course, and it has paid off I believe that I have done very well overall. 25. I did a minimum amount of work and made little effort in this course.

Learning Progress Scale ? ? = 0.952 (5 items) ? ? = 0.887 (2 items) 20. Looking back to when this course began, I have made a big improvement in my skills and knowledge in this subject. 4. Compared to what I knew before I took this course, I learned a lot. -These items are more similar to each other than the other items on the scale. - Use either item 4 or item 10 and drop items 23 and 28 since these items are negatively worded. 10. I learned a lot in this course. 23. I learned very little in this course. 28. I did not learn much as a result of taking this course.

Global Instructor and Course Quality Scale ? ? = 0.765 (3 items, 15 students) ? ? = 0.815 (3 items, 25 students) 8. Overall, I would rate the quality of this course as outstanding. - No change. Note that these are items that have been traditionally used at this university for many years for merit review, promotion and tenure decisions. 15. Overall, I would rate this instructor as outstanding. 34. Overall, I would recommend this instructor to others.

Student Satisfaction Scale ? ? = 0.883(3 items, 25 students) ? ? = 0.883(3 items, 25 students) 2. I am very satisfied with how my instructor taught this class. 18. This course was a waste of time and money. 6. I am dissatisfied with this course. - More similar than other items - Drop item 6 since it is negatively worded. 40. I am very satisfied with this course.

Final TALQ Instrument Scale No of Items in Final TALQ Instrument 4 3 4 3 3 5 2 3 No of Items in Original TALQ Instrument 5 5 5 3 5 5 5 3 Authentic Problems Activation Demonstration Application Integration Academic Learning Time Learning Progress Global Instructor and Course Quality Student Satisfaction Total 3 30 4 40

Implications First Principles of Instruction synthesized from instructional theories and models in the literature empirically related to Academic Learning Time Academic Learning Time is related empirically to student learning achievement. ALT is under control of students and thus instructors should not be held accountable. However, instructors could be held accountable for using First Principles of Instruction (Frick, et al., 2010): If students agreed that First Principles occurred, they were 3 times more likely to agree that they experienced ALT; If students agreed that both First Principles and ALT occurred, they were 5 times more likely to be independently rated as HIGH masters of course objectives by their instructors; If students did NOT agree that both First Principles and ALT occurred, they were26 times more likely to be independently rated as LOW masters of course objectives by their instructors.