Regression Diagnostics for Model Evaluation

Regression diagnostics involve analyzing outlying observations, standardized residuals, model errors, and identifying influential cases to assess the quality of a regression model. This process helps in understanding the accuracy of the model predictions and identifying potential issues that may affect the model's performance.

- Regression Diagnostics

- Model Evaluation

- Outlying Observations

- Standardized Residuals

- Influential Cases

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Outlying Y Observations Standardized Residuals Model Errors (unobserved): = + ( ) = + + = = = 2 2 ... 0 , 0 Y X X E i j 0 1 1 , i i i p i p i i i j -1 th P = X(X'X) X' Residuals (observed) where ( , ) element of i j : v ij n n ^ ^ ^ ^ = = + + + ... e Y Y Y X X i 0 1 p 1 , i i i i i p E e Standardized Residual (Residual divided by its estimated standard error): e r s MSE s v e ( ) = ( 1 = = 2 2 2 0 1 , v e e v i j i i ii i j ij ) = = 2 , s e MSE v s e e v MSE i j i ii i j ij = = i ( ) i 1 ii Studentized Residual (Estimate of does not involve current e r s MSE s v observation): = = * where is from regression on -1 remaining cases: i MSE n ( ) ( ) i ( ) i ( ) i i 1 ( ) i ii * Under normality of errors, ~ r n p t ' 1 i

Outlying Y Observations Studentized Deleted Residuals Deleted Residual (Observed value minus fitted value when regression is fit on the other -1 cases): n ^ ^ ^ ^ ^ = = + + + ... d Y Y Y X X ( ) i i ( ) i i 0( ) 1( ) ( ) i i p i 1 , i i i i p ^ regression coefficient of when case is deleted X i ( ) k i k th Studentized Deleted Residual (makes use of having predicted d r t n p = response from regression based on other -1 cases): i n ( ) * ~ ' 1 i s d i i ( ) e -1 d = = + = 2 2 X ' X 'X X X ' pred 1 1 s s MSE X X ( ) i ( ) i i (i) i i 1 , i i i i p 2 i ( ) ( ) = = ' 1 + Note : ' SSE n p MSE n p MSE ( ) i 1 v ii 1/2 ' 1 v n p = * Computed without re-fitting regressions r e n ( ) i i 2 i 1 SSE e ii ' 1 * Test for outliers (Bonferroni adjustment): Outlier if 1 , r t n p i 2 n

Outlying X-Cases Hat Matrix Leverage Values 1 x v v v v v v 11 12 1 n ( ) ( ) -1 -1 1 i 21 22 2 n = = = P = X X'X X' x ' X'X x x v i j i ij x v v v , i p 1 2 n n nn ( ) ( ) n ( ) ( ) P ( ) ( ) -1 -1 = = = = = X X'X X' X'X X'X I Notes: 0 1 ' v v trace trace trace trace p p ' ii ii = 1 i Cases with X-levels close to the center of the sampled X-levels will have small leverages. Cases with extreme levels have large leverages, and have the potential to pull the regression equation toward their observed Y-values. Large leverage values are > 2p /n (2 times larger than the mean) 1 n i n ^ ^ = = + + Y = PY Y v Y v Y ii ii v Y v Y i ij j ij j ij j = = j i = + 1 1 1 j j ( ) -1 = ' new X X'X X Leverage values for new observations: new,new v new New cases with leverage values larger than those in original dataset are extrapolations

Identifying Influential Cases I Fitted Values Influential Cases in Terms of Their Own Fitted Values - DFFITS: ^ ^ Y MSE v Y ( ) i i i = # of standard errors own fitted value is shifted when case included vs excluded DFFITS i ( ) i ii Computational Formula (avoids fitting all deleted models): 1/2 1/2 1/2 ' 1 v v v n p = = * ii ii DFFITS e r ( ) i i i 2 i 1 1 1 SSE e v v ii ii ii ' p n Problem cases are >1 for small to medium sized datasets, 2 for larger ones Influential Cases in Terms of All Fitted Values - Cook's Distance: 2 n ^ ^ ^ ^ ^ ^ Y Y Y-Y ' Y-Y ( ) j i j (i) (i) 2 r p v = 1 j = = = i ii D ( ) i ' ' ' 1 p MSE p MSE v ii ( ) Problem cases are > 0.50; ', ' F p n p

Influential Cases II Regression Coefficients = Influential Cases in Terms of Regression Coefficients (One for each case for each coefficient, 0,1,..., ): k p ^ ^ ( ) ( ) k k i = # of standard errors coefficient is shifted when case inclu ded vs excluded DFBETAS ( ) k i MSE c ( ) i kk c c c c c c 00 01 0, p ( ) -1 10 11 1, p = X'X where c c c ,0 ,1 , p p p p 2 Problem cases are >1 for small to medium sized datasets, for larger ones n Influential Cases in Terms of Vector of Regre ssion Coefficients - Cook's Distance: 2 n ^ ^ ^ ^ ^ ^ ^ ^ ^ ^ ( ) Y Y Y-Y ' Y-Y - ' X'X - ( ) j i j (i) (i) 2 (i) (i) r p v = 1 j = = = = i ii D ( ) i ' ' ' 1 ' p MSE p MSE v p MSE ii ( ) Problem cases are > 0.50; ', ' F p n p When so me cases are highly influential, should check and see if they affect inferences regarding model.

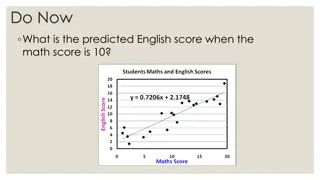

Standardized Regression Model - I Useful in removing round-off errors in computing (X X)-1 Makes easier comparison of magnitude of effects of predictors measured on different measurement scales Coefficients represent changes in Y (in standard deviation units) as each predictor increases 1 SD (holding all others constant) Since all variables are centered, no intercept term

Standardized Regression Model - II Standardized Random Variables: Scaled to have mean = 0, SD = 1: ( ) ( ) 2 2 Y Y X X k i ik Y Y X X k = = = 1,..., 1 i i ik i s s k p Y k 1 1 s n s n Y k Correlation Transformation: = 1 1 Y Y X X k = = * * ik 1,..., 1 i ik Y X k p i s s 1 1 n n Y k Sta Y ndardized Regression Model: ... X = + + + * * 1 * 1 i * p * , i p * i X 1 1 i s s = = * k Note: 1,..., 1 k p Y k k = ... Y X X 1 1 p 0 1 1 p

Standardized Regression Model - III Standardized Regression Model: ... i Y X = + + + * * 1 * 1 i * p * , i p * i X 1 1 1 r r r r r r * Y Y 12 1 1, 1 1 p Y * 11 * 1, 1 X X 1 p * 21 1, 1 2 p Y = = = = = = * * *' * *' * 1 X Y X X r X Y r XX YX 1) ( 1) 1 ( 1) 1 ( 1) ( n p n p p p * n * n p X X 1 , 1 1 r r Y p r * Y 1,1 1,2 , 1 p p 1 This results from: ( ) 2 X X 2 k ik 1 1 s 1 X X X ( ) 2 k = = = = * ik 2 1 ik i X s X k 2 k 2 1 s n s 1 n i i k k ( )( ) X X X X ' k k , s X s X X ' ik ik 1 1 1 X X X X ( )( ) ' k k = = = = ' k k * ik * ik ' ik ik i X X r s X ' ' kk 1 s s k k s s n 1 1 n n i i ' ' ' k k k k ( )( ) Y Y X X k , s Y X i ik 1 Y Y 1 X X 1 ( )( ) k = = = = k * * ik i ik i Y X Yk r s Y s X i 1 s s s s n 1 1 n n i i Y k Y k k ( ) 1 = = = *' * *' * *' * *' * * * * 1 X X b X Y b X X X Y b XX YX r r 1) ( 1) ( 1) 1 ( ( p p p 1) 1 p s s = = = * k 1,..., 1 ... b b k p b Y b X b X Y 1 1 p 0 1 1 k p k

Multicollinearity Consider model with 2 Predictors (this generalizes to any number of predictors) Yi = 0+ 1Xi1+ 2Xi2+ i When X1 and X2 are uncorrelated, the regression coefficients b1 and b2 are the same whether we fit simple regressions or a multiple regression, and: SSR(X1) = SSR(X1|X2) SSR(X2) = SSR(X2|X1) When X1 and X2 are highly correlated, their regression coefficients become unstable, and their standard errors become larger (smaller t-statistics, wider CIs), leading to strange inferences when comparing simple and partial effects of each predictor Estimated means and Predicted values are not affected

Multicollinearity - Variance Inflation Factors Problems when predictor variables are correlated among themselves Regression Coefficients of predictors change, depending on what other predictors are included Extra Sums of Squares of predictors change, depending on what other predictors are included Standard Errors of Regression Coefficients increase when predictors are highly correlated Individual Regression Coefficients are not significant, although the overall model is Width of Confidence Intervals for Regression Coefficients increases when predictors are highly correlated Point Estimates of Regression Coefficients arewrong sign (+/-)

Variance Inflation Factor ^ ( ) -1 = 2 2 Original Units for ,..., , : X X Y X'X 1 p 1 1 X X Y Y k = = * * Correlation Transformed Values: ik i X Y s s 1 1 n n ik i k Y * * ( ) ( ) ( ^ ^ 2 2 ) = = 2 -1 * 2 * VIF r k k XX 1 ( ) = where: VIF 2 k 1 k R 2 k with Coefficien ( ) VIF t of Determination for regression of on the other = 1 predictors = R X p k ( ) ( ) = = 2 k 2 k 2 k 0 1 0 1 1 1 R R VIF R VIF k k k Multicollinearity is considered problematic wrt least squares estimates if: p = ( ) VIF ( ) ( ) k ( ) ( ) = 1 max ,..., 10 or if is much larger than 1 k VIF VIF VIF 1 p p