A Model for Application Slowdown Estimation in On-Chip Networks

Problem of inter-application interference in on-chip networks in multicore processors due to NoC contention causes unfair slowdowns. The goal is to estimate NoC-level slowdowns in runtime and improve system fairness and performance. The approach includes NoC Application Slowdown Model (NAS) and Fairness-Aware Source Throttling (FAST), which significantly enhance system fairness and performance.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

A Model for Application Slowdown Estimation in On-Chip Networks and Its Use for Improving System Fairness and Performance Xiyue Xiang*, Saugata Ghose , Onur Mutlu , Nian-Feng Tzeng* *University of Louisiana at Lafayette Carnegie Mellon University ETH Z rich ul_logo

Executive Summary Problem: inter-application interference in on-chip networks (NoCs) In a multicore processor, interference can occur due to NoC contention Interference causes applications to slow down unfairly Goal: estimate NoC-level slowdown at runtime, and use slowdown information to improve system fairness and performance Our Approach NoC Application Slowdown Model (NAS): first online model to quantify inter-application interference in NoCs Fairness-Aware Source Throttling (FAST): throttle network injection rate of processor cores based on slowdown estimate from NAS Results NAS is very accurate and scalable: 4.2% error rate on average (8 8 mesh) FASTimproves system fairness by 9.5%, and performance by 5.2% (compared to a baseline without source throttling on a 8 8 mesh) 2

Motivation: Interference in NoCs 3 2.7 Slowdown 2 1.6 1 0 lbm 16 copies of each application run concurrently on a 64-core processor leslie3d mcf GemsFDTD Root cause: ????????????? ???_???????????? =??????? ?????? ???????? = NoC bandwidth is shared Interference slows down applications and increases system unfairness 3

NAS: NoC Application Slowdown Model talone: unknown at runtime ?? ???? ?? ???? ?????? tshared: measured directly ???????? =?? ???? = ?????? Online estimation of ??????: application stall time due to interference Challenges: Node D Node S request Flit-level delay slowdown response Each request involves multiple packets 4

NAS: NoC Application Slowdown Model talone: unknown at runtime ?? ???? ?? ???? ?????? tshared: measured directly ???????? =?? ???? = ?????? Online estimation of ??????: application stall time due to interference Challenges: Node D Node S Flit-level delay slowdown Random and distributive Overlapped delay A packet is formed by multiple flits Basic idea: track delay and calculate tstall 4

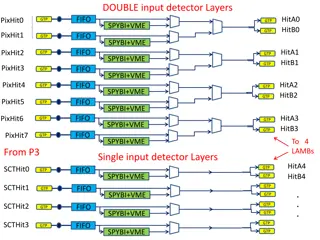

Flit-Level Interference 12 14 15 13 Threeinterference events 9 10 11 8 Injection 5 6 7 4 Virtual channel arbitration 1 0 2 3 Switch arbitration MSHRs Core Each flit carries an additional field tflit Shared LLC Slice L1 If arbitration loses, tflit = tflit + 1 Router Node Sum up arbitration delays due to interference 5

Packet-Level Interference 1 2 3 4 5 treassembly = M cycles (M=5) Alone run: f1 f2 f3 f4 f5 Packet s flits arrive consecutively when there is no interference 1 2 3 4 5 6 7 8 9 10 11 Shared run: f3 f5 f1 f2 f4 ??????_???? =2 M-cycle reassembly ??????????? Tfirst_arrival =3 ???????= 2 + 11 3 5 = 5 ?????? Tlast_arrival=11 treassembly= ?????_??????? ??????_??????? ? Track increase in packet reassembly time ???????= ??????_????+ ??????????? 6

Request-Level Interference Request packet delayed by 5 cycles due to inter-application interference Node D Node S 0 1 Leverage closed-loop packet behavior to accumulate tpacket Inheritance Table: lump sum of tpacket for associated packets 7

Request-Level Interference Request packet delayed by 5 cycles due to inter-application interference Node D Node S 0 1 2Register request packet info in inheritance table ( tpacket = 5) 5 LLC Slice NI Inheritance Table reqID mshrID tpacket ... 3Cache access ... ... 4Generate response packet, inheriting tpacket from table 5 Leverage closed-loop packet behavior to accumulate tpacket Inheritance Table: lump sum of tpacket for associated packets 7

Request-Level Interference Request packet delayed by 5 cycles due to inter-application interference Node D Node S 1 2Register request packet info in inheritance table ( tpacket = 5) 5 LLC Slice NI Inheritance Table reqID mshrID tpacket ... 3Cache access access 3Cache ... ... 5Response packet delayed by 3 cycles due to inter-application interference 4Generate response packet, inheriting tpacket from table 5 Leverage closed-loop packet behavior to accumulate tpacket Inheritance Table: lump sum of tpacket for associated packets 7

Request-Level Interference Request packet delayed by 5 cycles due to inter-application interference Node D Node S 1 2Register request packet info in inheritance table ( tpacket = 5) 5 LLC Slice NI Inheritance Table reqID mshrID tpacket ... 3Cache 3Cache access access Final value of tpacket is 8 cycles ... ... 5Response packet delayed by 3 cycles due to inter-application interference 8 4Generate response packet, inheriting tpacket from table Leverage closed-loop packet behavior to accumulate tpacket Inheritance Table: lump sum of tpacket for associated packets Sum up delays of all associated packets ????????= ????????_??????+ ?????????_?????? 7

Application Stall Time ILP, MLP App. stalls Latency is hidden ignored Latency of critical request ??????_???_??????? Tcritical Tservice A memory request becomes critical if 1) It is the oldest instruction at ROB and ROB is full, and/or 2) It is the oldest instruction at LSQ and LSQ is full when the next is a memory instruction For all critical requests ??????_???_???????= ???(???????? ?????????,????????) Count only request delays on critical path of execution time ??????= ??????_???_???????,? ? 8

Using NAS to Improve Fairness NAS provides online estimation of slowdown Sum up flit-level arbitration delays due to interference Track increase in packet reassembly time Sum up delays of all associated packets Determine which request delays causes application stall Goal Use NAS to improve system fairness and performance FAST: Fairness-Aware Source Throttling 9

A New Metric: NoC Stall-Time Criticality Slowdown Network Intensity Interference in NoCs 3.0 120 Network Intensity (MPKI) 2.5 100 has uneven impact Slowdown 2.0 80 1.5 60 1.0 1.0 40 NoC Stall-Time Criticality 0.5 20 ??????=???????? 0.0 0 lbm leslie3d mcf GemsFDTD ?? ???? Lower STCnoc <==> Less sensitive to NoC-level interference Good candidate to be throttled down FAST utilizes STCnoc to proactively estimate the expected impact of each L1 miss 10

Key Knobs of FAST Rank based on slowdown Classification based on network intensity Latency-sensitive: spends more time in the core Throughput-sensitive: network intensive Throttle Up Latency-sensitive applications: improve system performance Slower applications: optimize system fairness Throttle Down Throughput sensitive application with lower STCnoc: reduce interference with lower negative impact on performance Avoid throttling down the slowest application 11

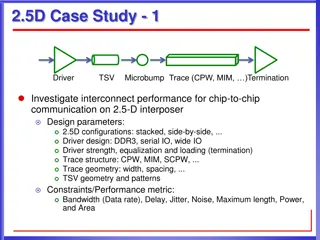

Methodology Processor Out-of-order, ROB / instruction window = 128 Caches L1: 64KB, 16 MSHRs L2: perfect shared NoCs Topology: 4 4 and 8 8 mesh Router: conventional VC router with 8 VCs, 4 flits/VC Workloads: multiprogrammed SPEC CPU2006 90 randomly-chosen workloads Categorized by network intensity (i.e., MPKI) 12

NAS is Accurate Network saturation 31.7% 4.2% 2.6% 15% 4x4 8x8 10% Estimation Slowdown Error 5% 0% Slowdown estimation error: 4.2% (2.6%) for 8 8 (4 4) Low estimated slowdown error consistently NAS is highly accurate and scalable Good scalability 13

FAST Improves Performance 1.10 1.10 +5.0% +5.2% 1.05 1.05 Weighted Speedup Weighted Speedup Normalized Normalized 1.00 1.00 0.95 0.95 0.90 0.90 NoST HAT FAST NoST HAT FAST NoST HAT FAST NoST HAT FAST 4 4 8 8 4 4 8 8 (b) Heavy workloads (a) Mixed workloads FAST has better performance than both HAT and NoST Inter-application interference is reduced Only throttles applications with low negative impact (i.e., lower STCnoc) 14

FAST Reduces Unfairness 1.10 1.10 1.05 1.05 1.00 1.00 Normalized Unfairness - 4.7% Normalized Unfairness 0.95 0.95 -9.5% 0.90 0.90 0.85 0.85 NoST HAT FAST NoST HAT FAST NoST HAT FAST NoST HAT FAST 4 4 8 8 4 4 8 8 (a) Mixed workloads (b) Heavy workloads FAST can improve fairness Source throttling allows slower applications to catch up Uses runtime slowdown to identify and avoid throttling the slowest application 15

Conclusion Problem: inter-application interference in on-chip networks (NoCs) In a multicore processor, interference can occur due to NoC contention Interference causes applications to slow down unfairly Goal: estimate NoC-level slowdown at runtime, and use slowdown information to improve system fairness and performance Our Approach NoC Application Slowdown Model (NAS): first online model to quantify inter-application interference in NoCs Fairness-Aware Source Throttling (FAST): throttle network injection rate of processor cores based on slowdown estimate from NAS Results NAS is very accurate and scalable: 4.2% error rate on average (8 8 mesh) FASTimproves system fairness by 9.5%, and performance by 5.2% (compared to a baseline without source throttling on a 8 8 mesh) 16

A Model for Application Slowdown Estimation in On-Chip Networks and Its Use for Improving System Fairness and Performance Xiyue Xiang*, Saugata Ghose , Onur Mutlu , Nian-Feng Tzeng* *University of Louisiana at Lafayette Carnegie Mellon University ETH Z rich ul_logo

Backup Slides Xiyue Xiang*, Saugata Ghose , Onur Mutlu , Nian-Feng Tzeng* *University of Louisiana at Lafayette Carnegie Mellon University ETH Z rich ul_logo

Related Works Slowdown modeling Fine grained: [Mutlu+ MICRO 07], [Ebrahimi+ ASPLOS 10], [Bois+ TACO 13] Coarse grained: [Subramanian+ HPCA 13], [Subramanian MICRO 15] Source throttling [Chang+ SBAC-PAD 12], [Nychis+ SIGCOMM 12], [Nychis+ HotNet 10] Application mapping [Chou+ ICCD 08], [Das+ HPCA 13] Prioritization [Das+ MICRO 09], [Das ISCA 10] Scheduling [Kim+ MICRO 10] QoS [Grot+ MICRO 09], [Grot+ ISCA 11], [Lee+ ISCA 08] 19

Hardware Cost of NAS Location Components Costs Router Interference delay of each flit 5.3% wider data path Timestamp of the first and last arrival flit of a packet Inheritance table (16+16) 16 bits NI (6+4+8) 20 bits Interference delay of the request 8 bits Core Timestamp when processor stalls 16 bits Estimated application stall time 16 bits Total cost of NAS per node 114 Bytes + 5.3% router area 20

NAS Error Distribution Plot 7,200 application instances 50% 66.0% of application instances with < 10% error 40% 84.3% of application instances with < 20% error Application Instances 30% Fraction of 5.6% of application instances with 40% error 20% 10% 0% 0% 10% 20% 30% 40% 50% 60% 70% 80% 90% 100% Slowdown Estimation Error (Binned) Plot 7,200 application instance NAS exhibits high accuracy most of the time 21