Universal Two-Qubit Computational Register for Trapped Ion Quantum Processors

Universal two-qubit computational register for trapped ion quantum processors, including state preparation, gates, and benchmarking. The experimental setup and results are discussed.

0 views • 14 slides

Towards Single-Event Upset Detection in Hardware Secure RISC-V Processors

This research focuses on detecting single-event upsets (SEUs) in hardware-secure RISC-V processors in radiation environments, such as high-energy physics and space applications. Motivated by the potential data errors, unpredictable behavior, or crashes caused by SEUs, the study explores fault inject

4 views • 17 slides

Understanding Superscalar Processors in Processor Design

Explore the concept of superscalar processors in processor design, including the ability to execute instructions independently and concurrently. Learn about the difference between superscalar and superpipelined approaches, instruction-level parallelism, and the limitations and design issues involved

0 views • 55 slides

Understanding Computer Architecture: A Comprehensive Overview by Prof. Dr. Nizamettin AYDIN

Explore the realm of computer architecture through the expertise of Prof. Dr. Nizamettin AYDIN, covering topics like RISC characteristics, major advances in computers, comparison of processors, and the driving force for CISC. Delve into the evolution of processors, register optimization, and the tra

0 views • 42 slides

Understanding Multicore Processors: Hardware and Software Perspectives

This chapter delves into the realm of multicore processors, shedding light on both hardware and software performance issues associated with these advanced computing systems. Readers will gain insights into the evolving landscape of multicore organization, spanning embedded systems to mainframes. The

1 views • 36 slides

Introduction to Intel Assembly Language for x86 Processors

Intel Assembly Language is a low-level programming language designed for Intel 8086 processors and their successors. It features a CISC instruction set, special purpose registers, memory-register operations, and various addressing modes. The language employs mnemonics to represent instructions, with

2 views • 12 slides

Understanding Shared Memory Systems in Computer Architecture

Shared memory systems in computer architecture allow all processors to have direct access to common physical memory, enabling efficient data sharing and communication among processors. These systems consist of a global address space accessible by all processors, facilitating parallel processing but

1 views • 19 slides

Understanding Computer System Architectures

Computer systems can be categorized into single-processor and multiprocessor systems. Single-processor systems have one main CPU but may also contain special-purpose processors. Multiprocessor systems have multiple processors that share resources, offering advantages like increased throughput, econo

2 views • 25 slides

Understanding System Management Mode (SMM) in x86 Processors

System Management Mode (SMM) is a highly privileged mode in x86 processors that provides an isolated environment for critical system operations like power management and hardware control. When the processor enters SMM, it suspends all other tasks and runs proprietary OEM code. Protecting SMM is cruc

1 views • 26 slides

PowerPC Architecture Overview and Evolution

PowerPC is a RISC instruction set architecture developed by IBM in collaboration with Apple and Motorola in the early 1990s. It is based on IBM's POWER architecture, offering both 32-bit and 64-bit processors popular in embedded systems. The architecture emphasizes a reduced set of pipelined instruc

2 views • 13 slides

Contrasting RISC and CISC Architectures

Contrasting RISC (Reduced Instruction Set Computing) and CISC (Complex Instruction Set Computing) architectures, the images and descriptions elaborate on their advantages and disadvantages, with a focus on multiplying two numbers in memory using a CISC approach. CISC processors aim to complete tasks

0 views • 35 slides

Trends in Computer Organization and Architecture

This content delves into various aspects of computer organization and architecture, covering topics such as multicore computers, alternative chip organization, Intel hardware trends, processor trends, power consumption projections, and performance effects of multiple cores. It also discusses the sca

5 views • 28 slides

Understanding ARM RISC Design Philosophy and Its Impact

Delve into the world of ARM processors, exploring the RISC design philosophy that underpins their efficiency and widespread application. Learn about key principles, compare RISC with CISC, and discover how ARM's simplicity, orthogonality, and efficient architecture contribute to its dominance in mob

0 views • 12 slides

Design and Implementation of Shifters in ALU for Single-Cycle Processors

The detailed discussion covers the construction of a multifunction Arithmetic Logic Unit (ALU) for computer processors, specifically focusing on the design and implementation of shifters. Shift operations such as SLL, SRL, SRA, and ROR are explained, with insights into shifting processes and data ex

0 views • 5 slides

Efficient Dynamic Memory Management for Embedded Multicore Systems

This content delves into the challenges of dynamic memory management in embedded multicore systems, emphasizing the importance of transaction-friendly approaches. It covers parallel data structures, the role of operating systems/libraries, and principles of memory allocation. Through illustrations a

0 views • 24 slides

k-Ary Search on Modern Processors

The presentation discusses the importance of searching operations in computer science, focusing on different types of searches such as point queries, nearest-neighbor key queries, and range queries. It explores search algorithms including linear search, hash-based search, tree-based search, and sort

0 views • 18 slides

Enhancing I/O Performance on SMT Processors in Cloud Environments

Improving I/O performance and efficiency on Simultaneous Multi-Threading (SMT) processors in virtualized clouds is crucial for maximizing system throughput and resource utilization. The vSMT-IO approach focuses on efficiently scheduling I/O workloads on SMT CPUs by making them "dormant" on hardware

0 views • 31 slides

Fast Multicore Key-Value Storage Study

Explore Cache Craftiness for Fast Multicore Key-Value Storage in a comprehensive study on building a high-performance KV store system. Learn about the feature wishlist, challenges with hard workloads, initial attempts with binary trees, and advancements with Masstree. Discover the contributions and

0 views • 38 slides

Dynamic Load Balancing on Graphics Processors: A Detailed Study

In this comprehensive study by Daniel Cederman and Philippas Tsigas from Chalmers University of Technology, the focus is on dynamic load balancing on graphics processors. The research delves into the motivation, methods, experimental evaluations, and conclusions related to this critical area. It cov

0 views • 57 slides

Scaling Multi-Core Network Processors Without the Reordering Bottleneck

This study discusses the challenges in packet ordering within parallel network processors and proposes solutions to reduce reordering delay. Various approaches such as static mapping, single SN approach, and per-flow sequencing are explored to optimize processing efficiency in multi-core NP architec

0 views • 22 slides

- Understanding Exceptions in Modern High-Performance Processors

- Overview of exceptions in pipeline processors, including conditions halting normal operation, handling techniques, and example scenarios triggering exception detection during fetch and memory stages. Emphasis on maintaining exception ordering and performance analysis in out-of-order execution proc

0 views • 28 slides

Understanding Cache Memory in Computer Systems

Explore the intricate world of cache memory in computer systems through detailed explanations of how it functions, its types, and its role in enhancing system performance. Delve into the nuances of associative memory, valid and dirty bits, as well as fully associative examples to grasp the complexit

0 views • 15 slides

A Model for Application Slowdown Estimation in On-Chip Networks

Problem of inter-application interference in on-chip networks in multicore processors due to NoC contention causes unfair slowdowns. The goal is to estimate NoC-level slowdowns in runtime and improve system fairness and performance. The approach includes NoC Application Slowdown Model (NAS) and Fair

0 views • 25 slides

Understanding Interrupt Processing Sequence in X86 Processors

X86 processors have 256 software interrupts, functioning similarly to a CALL instruction. When an INT n instruction is executed, the processor follows a sequence involving pushing the flag register, clearing flags, finding the correct ISR address, and transferring CPU control. Special interrupts lik

0 views • 10 slides

Understanding Shared Memory, Distributed Memory, and Hybrid Distributed-Shared Memory

Shared memory systems allow multiple processors to access the same memory resources, with changes made by one processor visible to all others. This concept is categorized into Uniform Memory Access (UMA) and Non-Uniform Memory Access (NUMA) architectures. UMA provides equal access times to memory, w

0 views • 22 slides

Should Ghana Provide Discounts on Cocoa Beans for Local Processors? A Case Study

Ghana's cocoa sector plays a significant role in the country's economy, yet less than 25% of cocoa beans are processed locally, limiting its market share. This case study explores the impact of local processing on Ghana's cocoa industry and discusses the dilemma of value addition. The question of wh

0 views • 14 slides

Understanding Shared Memory Coherence, Synchronization, and Consistency in Embedded Computer Architecture

This content delves into the complexities of shared memory architecture in embedded computer systems, addressing key issues such as coherence, synchronization, and memory consistency. It explains how cache coherence ensures the most recent data is accessed by all processors, and discusses methods li

0 views • 47 slides

Understanding Multi-Processing in Computer Architecture

Beginning in the mid-2000s, a shift towards multi-processing emerged due to limitations in uniprocessor performance gains. This led to the development of multiprocessors like multicore systems, enabling enhanced performance through parallel processing. The taxonomy of Flynn categories, including SIS

0 views • 46 slides

Constructive Computer Architecture: Multistage Pipelined Processors

Explore the concepts of multistage pipelined processors and modular refinement in computer architecture as discussed by Arvind and his team at the Computer Science & Artificial Intelligence Lab, Massachusetts Institute of Technology. The content delves into the design and implementation of a 3-stage

0 views • 28 slides

Data Hazards in Pipelined Processors: Understanding and Mitigation

Explore the concept of data hazards in pipelined processors, focusing on read-after-write (RAW) hazards and their impact on pipeline performance. Learn strategies to mitigate data hazards, such as using a scoreboard to track instructions and stall the Fetch stage when necessary. Discover how adjusti

0 views • 23 slides

Understanding Instruction Flow Techniques in High-IPC Processors

Explore the intricate processes involved in optimizing instruction flow within high-IPC processors, tackling challenges such as control dependences, branch speculation, and branch direction prediction. Learn about the goals, impediments, branch types, and implementations that shape the efficient exe

0 views • 80 slides

Supercomputing in Plain English: Multicore Madness Workshop Details

Prepare for the "Supercomputing in Plain English: Multicore Madness" workshop with important instructions such as muting yourself, downloading slides in advance, and accessing the session via Zoom or YouTube. The session, led by Henry Neeman from the University of Oklahoma, covers supercomputing top

0 views • 97 slides

Overview of Nested Data Parallelism in Haskell

The paper by Simon Peyton Jones, Manuel Chakravarty, Gabriele Keller, and Roman Leshchinskiy explores nested data parallelism in Haskell, focusing on harnessing multicore processors. It discusses the challenges of parallel programming, comparing sequential and parallel computational fabrics. The evo

0 views • 55 slides

Study of Garbage Collector Scalability on Multicores

This study delves into the scalability challenges faced by garbage collectors on multicore hardware. It highlights how the performance of garbage collection does not scale effectively with the increasing number of cores, leading to bottlenecks in applications. The shift from centralized to distribut

0 views • 23 slides

Leveraging Graphics Processors for Accelerating Sonar Imaging via Backpropagation

Utilizing graphics processors to enhance synthetic aperture sonar imaging through backpropagation is a key focus in high-performance embedded computing workshops. The backpropagation process involves transmitting sonar pulses, capturing returns, and reconstructing images based on recorded samples. T

0 views • 18 slides

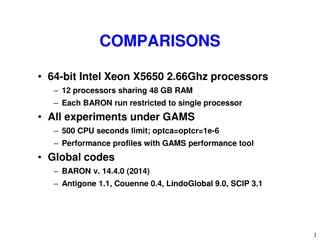

Performance Comparison of Optimization Solvers on Intel Xeon X5650 Processors

Experiment results comparing the performance of optimization solvers (BARON, Antigone, LindoGlobal, SCIP, Couenne) on Intel Xeon X5650 2.66Ghz processors with 48GB RAM. The study includes 369 NLPs from various libraries and an aggregate analysis of 1740 NLPs and MINLPs. Performance profiles generate

0 views • 6 slides

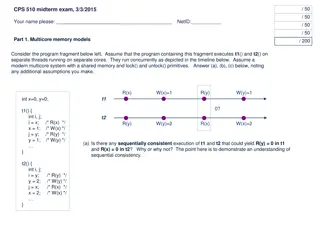

Multicore Memory Models and CPU Protection in Operating Systems

This content covers topics related to multicore memory models, synchronization, CPU protection levels in Dune-enabled Linux systems, and concurrency control in multithreaded programs. The material includes scenarios, questions, and diagrams to test understanding of these concepts in the context of t

0 views • 10 slides

Software Design Considerations for Multicore CPUs

Discussion on performance issues with modern multi-core CPUs, focusing on higher-end chips and boards. Exploring the concept of cores, chips, and boards in the context of multicore CPUs and their memory architectures.

0 views • 31 slides

Understanding KeyStone Multicore Navigator for Efficient Data Transport

This lesson provides insights into the KeyStone Multicore Navigator, explaining its advantages, architecture, functional components like descriptors and queues, and how to configure it for optimal performance. It covers the motivation behind its design, basic elements such as descriptors and queues,

0 views • 55 slides

Cooperative Cache Scrubbing for Efficient Memory Management in Multicore Systems

Cooperative Cache Scrubbing optimizes memory management in multicore systems by efficiently handling short-lived application objects and reducing unnecessary data writes to memory. By communicating semantic information to hardware caches, dead lines are scrubbed, dirty bits unset, and unnecessary fe

0 views • 40 slides