Statistical Text Analysis Techniques Overview

The content covers key concepts in statistical text analysis, including maximum likelihood estimation, N-gram language model smoothing, and perplexity calculation. It then delves into Latent Semantic Analysis and the practical application of vector space models, highlighting considerations like synonymy and polysemy. The discussion extends to building concept spaces for text mining through methods like automatic term expansion and word sense disambiguation.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

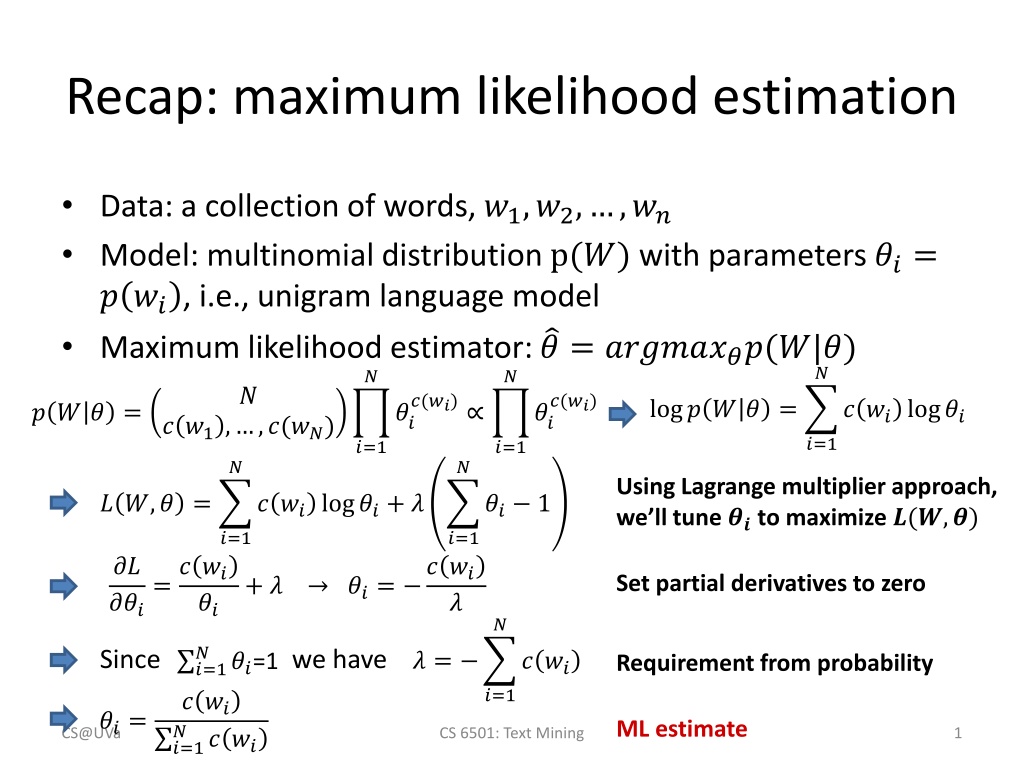

Recap: maximum likelihood estimation Data: a collection of words, ?1,?2, ,?? Model: multinomial distribution p(?) with parameters ??= ? ??, i.e., unigram language model Maximum likelihood estimator: ? = ????????(?|?) ? ?(??) ? ? ? ?(??) log? ? ? = ? ?? log?? ? ? ? = ? ?1, ,?(??) ?? ?? ?=1 ?=1 ?=1 ? ? Using Lagrange multiplier approach, we ll tune ?? to maximize ?(?,?) ? ?,? = ? ?? log??+ ? ?? 1 ?=1 ?=1 ?? ??? =? ?? + ? ??= ? ?? Set partial derivatives to zero ?? ? ? ? Since we have ?=1 ? ?? ?=1 ? = ? ?? ??=1 Requirement from probability ?=1 ??= ML estimate ? CS@UVa CS 6501: Text Mining 1 ? ??

Recap: illustration of N-gram language model smoothing ? ???? 1, ,?? ?+1 Max. Likelihood Estimate ? ???? 1, ,?? ?+1 =?(??,?? 1, ,?? ?+1) ?(?? 1, ,?? ?+1) Smoothed LM w Assigning nonzero probabilities to the unseen words Discount from the seen words CS@UVa CS 6501: Text Mining 2

Recap: perplexity The inverse of the likelihood of the test set as assigned by the language model, normalized by the number of words 1 ? ?? ?1, ,?? = ? ?=1 ?(??|?? 1, ,?? ?+1) N-gram language model CS@UVa CS 6501: Text Mining 3

Latent Semantic Analysis Hongning Wang CS@UVa

VS model in practice Document and query are represented by term vectors Terms are not necessarily orthogonal to each other Synonymy: car v.s. automobile Polysemy: fly (action v.s. insect) CS@UVa CS6501: Text Mining 5

Choosing basis for VS model A concept space is preferred Semantic gap will be bridged Finance D2 D4 D3 Sports Query D1 D5 Education CS@UVa CS6501: Text Mining 6

How to build such a space Automatic term expansion Construction of thesaurus WordNet Clustering of words Word sense disambiguation Dictionary-based Relation between a pair of words should be similar as in text and dictionary s description Explore word usage context CS@UVa CS6501: Text Mining 7

How to build such a space Latent Semantic Analysis Assumption: there is some underlying latent semantic structure in the data that is partially obscured by the randomness of word choice with respect to text generation It means: the observed term-document association data is contaminated by random noise CS@UVa CS6501: Text Mining 8

How to build such a space Solution Low rank matrix approximation Imagine this is *true* concept-document matrix Imagine this is our observed term-document matrix Random noise over the word selection in each document CS@UVa CS6501: Text Mining 9

Latent Semantic Analysis (LSA) Low rank approximation of term-document matrix ?? ? Goal: remove noise in the observed term- document association data Solution: find a matrix with rank ? which is closest to the original matrix in terms of Frobenius norm ? = argmin ?|???? ? =? ? ?? 2 ? ?=1 ? ?=1 = argmin ?|???? ? =? ??? ??? CS@UVa CS6501: Text Mining 10

Basic concepts in linear algebra Symmetric matrix ? = ?? Rank of a matrix The number of linearly independent rows (columns) in a matrix ?? ? ???? ?? ? min(?,?) CS@UVa CS6501: Text Mining 11

Basic concepts in linear algebra Eigen system For a square matrix ?? ? If ?? = ??, ? is called the right eigenvector of ? and ? is the corresponding eigenvalue For a symmetric full-rank matrix ?? ? We have its eigen-decomposition as ? = ? ?? where the columns of ? are the orthogonal and normalized eigenvectors of ? and is a diagonal matrix whose entries are the eigenvalues of ? CS@UVa CS6501: Text Mining 12

Basic concepts in linear algebra Singular value decomposition (SVD) For matrix ?? ? with rank ?, we have ? = ? ?? where ?? ? and ?? ? are orthogonal matrices, and is a ? ? diagonal matrix, with ??= are the eigenvalues of ??? We define ?? ? = ?? ? ? ??? ? where we place ?? in a descending order and set ??= ?? for ? ?, and ??= 0 for ? > ? ?? and ?1 ?? ? ? CS@UVa CS6501: Text Mining 13

Latent Semantic Analysis (LSA) Solve LSA by SVD Map to a lower dimensional space ? = argmin ?|???? ? =? ? ?? 2 ? ?=1 ? ?=1 = argmin ?|???? ? =? ? ??? ??? = ?? ? Procedure of LSA 1. Perform SVD on document-term adjacency matrix 2. Construct ?? ? by only keeping the largest ? singular values in non-zero ? CS@UVa CS6501: Text Mining 14

Latent Semantic Analysis (LSA) Another interpretation ?? ? is the document-term adjacency matrix ?? ?= ?? ? ?? ? ???: document-document similarity by counting how many terms co-occur in ?? and ?? ? = ? ?? ? ?? ?= ? 2?? Eigen-decomposition of document-document similarity matrix ?? In the lower dimensional space, we will only use the first ? elements in ? ? to represent ?? The same analysis applies to ?? ?= ?? ? ? s new representation is then ? ? in this system(space) ? ?? ? CS@UVa CS6501: Text Mining 15

Geometric interpretation of LSA ? ?? ? ?? and ??in the ?-dimensional space Therefore As ?? ? = ?? ? ? ??? ? (i,j) measures the relatedness between ? ? 1 2 ?? is represented as ?? ? ? ? ? 1 2 ?? is represented as ?? ? ? ? ? CS@UVa CS6501: Text Mining 16

Latent Semantic Analysis (LSA) HCI Visualization Graph theory CS@UVa CS6501: Text Mining 17

What are those dimensions in LSA Principle component analysis CS@UVa CS6501: Text Mining 18

Latent Semantic Analysis (LSA) What we have achieved via LSA Terms/documents that are closely associated are placed near one another in this new space Terms that do not occur in a document may still close to it, if that is consistent with the major patterns of association in the data A good choice of concept space for VS model! CS@UVa CS6501: Text Mining 19

LSA for retrieval Project queries into the new document space ? = ??? ? ? ? Treat query as a pseudo document of term vector Cosine similarity between query and documents in this lower-dimensional space 1 CS@UVa CS6501: Text Mining 20

LSA for retrieval HCI Graph theory q: human computer interaction CS@UVa CS6501: Text Mining 21

Discussions Computationally expensive Time complexity ?(??2) Optimal choice of ? Difficult to handle dynamic corpus Difficult to interpret the decomposition results We will come back to this later! CS@UVa CS6501: Text Mining 22

LSA beyond text Collaborative filtering CS@UVa CS6501: Text Mining 23

LSA beyond text Eigen face CS@UVa CS6501: Text Mining 24

LSA beyond text Cat from deep neuron network One of the neurons in the artificial neural network, trained from still frames from unlabeled YouTube videos, learned to detect cats. CS@UVa CS6501: Text Mining 25

What you should know Assumptions in LSA Interpretation of LSA Low rank matrix approximation Eigen-decomposition of co-occurrence matrix for documents and terms CS@UVa CS6501: Text Mining 26

Todays reading Introduction to information retrieval Chapter 13: Matrix decompositions and latent semantic indexing Deerwester, Scott C., et al. "Indexing by latent semantic analysis." JAsIs 41.6 (1990): 391-407. CS@UVa CS6501: Text Mining 27

Happy Lunar New Year! CS@UVa CS6501: Text Mining 28