Reliability and Failure Analysis in Engineering Systems

Introduction to failure data analysis, goodness of fit testing, stress-strength modeling, and reliability evaluation in engineering systems. Learn about failure rate estimation, MTBF/MTTF calculations, and practical examples illustrating reliability metrics in industrial applications.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Chapter Four Failure Data and Goodness of Fit Analysis Topics: Introduction Failure Trend Analysis Goodness of fit test (Kolmogorov/Smirnov test) Introduction of stress-strength modeling Homogeneous Markov models Reliability evaluation of cold, warm, and failure interactions and Markov analysis

Introduction The failure rate h(t) is a measure of proneness to failure as a function of age, t. The purpose for quantitative reliability measurements is to define the rate of failure relative to time and to model that failure rate in a mathematical distribution for the purpose of understanding the quantitative aspects of failure. The most basic building block is the failure rate, which is estimated using the following equation:

Where: = Failure rate (sometimes referred to as the hazard rate) T = Total running time/cycles/miles/etc. during an investigation period for both failed and non-failed items. r = The total number of failures occurring during the investigation period.

For example, if five electric motors operate for a collective total time of 50 years with five functional failures during the period, the failure rate is 0.1 failures per year.

MTBF/MTTF The only difference between MTBF and MTTF is that we employ MTBF when referring to items that are repaired when they fail. For items that are simply thrown away and replaced, we use the term MTTF. The computations are the same. The basic calculation to estimate MTBF and MTTF is simply the reciprocal of the failure rate function.

The MTBF for our industrial electric motor example is 10 years, which is the reciprocal of the failure rate for the motors. Incidentally, we would estimate MTBF for electric motors that are rebuilt upon failure. For smaller motors that are considered disposable, we would state the measure of central tendency as MTTF.

Example Example Five oil pumps were tested with failure hours of 45, 33, 62, 94 and 105. What is the MTTF and failure rate? Note: considering pumps as non repairable systems, Use MTTF. MTTF = (45+33+62+94+105) / 5 = 67.8 hours Failure rate = 5 / (45+33+62+94+105) = 0.0147 per hour.

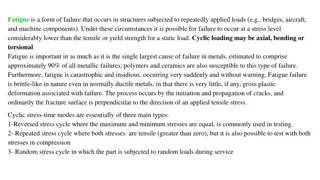

Failure Trend Analysis The failure rate is a basic component of many more complex reliability calculations. The failure rate is expected to vary over the life of a product a good example is a BathtubCurve

A-B Early Failure / Infant mortality / Debugging / Break-in Teething problems. Caused by design/material flaws Eg: Joints, Welds, Contamination, Misuse, Misassembly B-C Constant Failure / Useful life. Lower than initial failure rate and more or less constant until end of life C-D End of life failure / Wear out phase. Failure rate rises again due to components reaching end of life Eg: Corrosion, Cracking, Wear, Friction, Fatigue, Erosion, Lack of PM

However, depending upon the mechanical/electrical design, operating context, environment and/or maintenance effectiveness, a machine s failure rate as a function of time may decline, remain constant, increase linearly or increase geometrically (Figure).

The failure rates of various components can be estimated using historical information from records at site. For example, if you had 5 hydraulic pumps in standby mode and each ran for 2000 hours in standby and 3 failed during the standby time The failure rate during standby mode is: Total standby hours = 5(2000 hours) = 10000 hours Failure rate in standby mode = 3 / 10,000 = 0.0003 failures per hour

Relationship Between h(t), f(t), F(t) and R(t) Relationship Between h(t), f(t), F(t) and R(t) h(t) = failure rate f(t) = pdf F(t) = cdf R(t) = reliability function ?(?) ?(?) = ?(?) ? ?(?) ? ? =

Goodness of fit test (Kolmogorov/Smirnov test) Goodness of fit test (Kolmogorov/Smirnov test) This test is used in situations where a comparison has to be made between an observed sample distribution and theoretical distribution.

Suppose that we have observations X1,...,Xn, which we think come from a distribution P. The Kolmogorov-Smirnov Test is used to test H0 : the samples come from P, against H1 : the samples do not come from P. The null hypothesis assumes no difference between the observed and theoretical distribution.

Given: observed sample distribution Given: observed sample distribution -0.16 -0.68 -0.32 -0.85 0.89 -2.28 0.63 0.41 0.15 0.74 1.30 -0.13 0.80 -0.75 0.28 -1.00 0.14 -1.38 -0.04 -0.25 -0.17 1.29 0.47 1.23 0.21 -0.04 0.07 -0.08 0.32 -0.17 0.13 -1.94 0.78 0.19 -0.12 -0.19 0.76 -1.48 -0.01 0.20 -1.97 -0.37 3.08 -0.40 0.80 0.01 1.32 -0.47 2.29 -0.26 -1.52 -0.06 -1.02 1.06 0.60 1.15 1.92 -0.06 -0.19 0.67 0.29 0.58 0.02 2.18 -0.04 -0.13 -0.79 -1.28 -1.41 -0.23 0.65 -0.26 -0.17 -1.53 -1.69 -1.60 0.09 -1.11 0.30 0.71 -0.88 -0.03 0.56 -3.68 2.40 0.62 0.52 -1.25 0.85 -0.09 -0.23 -1.16 0.22 -1.68 0.50 -0.35 -0.35 -0.33 -0.24 0.25 Do they come from N(0,1)?

Cumulative Distribution Function and Empirical Distribution Function The cumulative distribution function F(x) of a random variable X, is F(x) = P(X x). The cumulative distribution function uniquely characterizes a probability distribution. Given observations x1,...,xn the empirical distribution function Fobs(x) gives the proportion of the data that lies below x, ?????? ?? ???????????? ????? ? ?????? ?? ???????????? Fobs(x) = If we order the observations y1 y2 yn, then .

Compute the empirical distribution function If our data is ordered, x1 being the least and xn being the largest, then

For each observation xi compute Fexp(xi) = P(Z xi). Result is given below. In this case, we use the standard normal table to determine the expected distribution function.

We want to compare the empirical distribution function of the data, Fobs, with the cumulative distribution function associated with the null hypothesis, Fexp (expected CDF). The Kolmogorov-Smirnov statistic is Dn = max|Fexp(x) Fobs(x)|. x The Kolmogorov-Smirnov statistic Dn = 0.092 is the maximum shown in the table.

What is the Critical Value? At the 95% level the critical value is approximately given by Here we have a sample size of n = 100 so Dcrit = 0.136. Acceptance criteria: if calculated value is less than critical value accept the null hypothesis Since 0.092 < 0.136 do not reject the null hypothesis. Rejection criteria: if calculated value is greater than table value reject null hypothesis.

Given two samples, test if their distributions are the same. Compute the observed cumulative distribution functions of the two samples and compute their maximum difference. X : 1.2,1.4,1.9,3.7,4.4,4.8,9.7,17.3,21.1,28.4 Y : 5.6,6.5,6.6,6.9,9.2,10.4,10.6,19.3.

We sort the combined sample, in order to compute the empirical cdfs: The Kolmogorov-Smirnov statistic is again the maximum absolute difference of the two observed distribution functions. Here Dn = 0.6. For two samples, the 95% critical value can be approximated by the formula: In our case nx = 10 and ny = 8 and thus Dcrit = 0.645 So we retain the null hypothesis.

Statistical Type I and Type II Errors Type I error: H0 is rejected, although it s true ( false alarm ). Type II error: H0 isn t rejected, although it s false. The actual attributes of the population distribution(s) and the error types divide the results to four cases: H0 is true H0 is false H0 isn t rejected The right decision Type II error H0 is rejected Type I error The right decision

Type I error, also known as a falsepositive : the error of rejecting a null hypothesis when it is actually true. It is the error of accepting an alternative hypothesis (the real hypothesis of interest) when the results can be attributed to chance. It occurs when we are observing a difference when in truth there is none (or more specifically - no statistically significant difference).

Type II error, also known as a "false negative": the error of not rejecting a null hypothesis when the alternative hypothesis is the true state of nature It is the error of failing to accept an alternative hypothesis when you don't have adequate power. It occurs when we are failing to observe a difference when in truth there is one. So the probability of making a type II error in a test with rejection region R is 1 P R H( | a is true). The power of the test can be P R H( | a is true).

The probability of type I error is called the risk or the level of significance of the test and it is often denoted by . The greatest allowed level of significance is often a starting point of hypothesis testing. The probability of type II error can t often be calculated, for H0 may be false in many ways. Often some sort of an (over) estimate is calculated by assuming a typical relatively insignificant way for H0 to break down. This probability is usually denoted by . The value 1 is called the power of the test. The more powerful a test is, the smaller deviation it notices from H0.