Improvements and Performance Analysis of GATE Simulation on HPC Cluster

This report covers the status of GATE-related projects presented in May 2017 by Liliana Caldeira, Mirjam Lenz, and U. we Pietrzyk at the Helmholtz-Gemeinschaft. It focuses on running GATE on a high-performance computing (HPC) cluster, particularly on the JURECA supercomputer at the Juelich Supercomputing Center. The adaptation of GATE tools to support the Slurm workload manager for both CPU and GPU simulations is discussed, along with comparisons between CPU and GPU versions in terms of true, scatter, and random coincidence events.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Mitglied der Helmholtz-Gemeinschaft Part I Status of GATE-related projects (Clermont-Ferrand; May 2017) Liliana Caldeira Mirjam Lenz & U we Pietrzyk Institute of Neurosciences and Medicine (INM), Research Center Juelich, Germany School of Mathematics and Natural Sciences, University of Wuppertal, Germany

List of Activities FZ-J lich / Uni Wuppertal Liliana Caldeira, PhD Improvements for running GATE on a HPC Cluster (CPU/GPU) Mitglied der Helmholtz-Gemeinschaft

JURECA JURECA (Juelich Research on Exascale Cluster Architectures) supercomputer at the J lich Supercomputing Center: 1872 compute nodes with two Intel Xeon E5-2680 v3 Haswell CPUs 75 of these nodes are additionally equipped with two NVIDIA K80 GPUs. workload manager Slurm (Simple Linux Utility for Resource Management). Mitglied der Helmholtz-Gemeinschaft

GATE on JURECA GATE v7.2 Additionally the cluster tools of GATE were adapted to support the Slurm workload manager for both the CPU and GPU simulation The GPU simulations were performed with a new version of the existing GPU code that additionally produces phantom scatter information Mitglied der Helmholtz-Gemeinschaft 1) Phantom scatter flag is missing in output 2) Source position is also at the borders of phantom ( BOX instead of Brain-like shape)

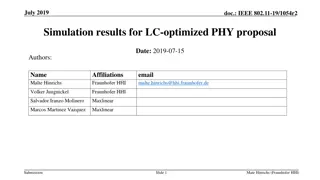

Work in Progress Comparison of the CPU and GPU version of GATE on the number of true, scatter, and random coincidence events Differences between CPU and GPU are always under 2% for all cases (true, random and scatter) # detected coincidences Time Point 3599 -3600s CPU GPU Total Coincidences: - True - Scatter - Random 1122090 407310 376153 338627 1122396 408369 377198 336829 Mitglied der Helmholtz-Gemeinschaft Note: Now trues and scatter are separated, before, scatter looked like trues

Work in Progress Provide an overview for the full runtime of the simulation. Compare the runtime of the CPU and GPU simulations. Configuration PER NODE code runtime 1) 3600s simulation was split in one-second frames. 2) Weak scaling tests optimal: CPU 24 Jobs per node GPU 48 Jobs per node 3) GPU does not scale as well as CPU! 4) GPU group in Juelich is looking at it! GATE 7.2 CPU: 1 simulation GPU: 1 simulation 2:20:52 0:20:05 GATE 7.2 with changes/sc CPU: 24 simulations (in parallel) GATE 7.2 3:01:57 GATE 7.2 with changes/sc GATE 7.2 GPU: 48 simulations GPU: 48 simulations 8:48:02 7:02:22* Mitglied der Helmholtz-Gemeinschaft * Full runtime GPU = 3600s/48s * 7 hours ? Full runtime CPU = 3600s/24s * 3 hours ?

Work in Progress Code Bug - GPU simulation stops / crashes before end of simulation time (increasing time and simulation will go pass that point). 168s 169s, duration 1 s crashes at 168.9s, duration 0.9s 168s -170s, duration 2s crashes at 169.8s, duration 1.75s Incorporate the phantom scatter flag in ROOT output directly in GATE (and not a posteriori as it is now) someone who knows could help us. Mitglied der Helmholtz-Gemeinschaft

Contact Prof. Dr. Uwe Pietrzyk Institute of Neuroscience and Medicine (INM-4) - Medical Imaging Physics - Forschungszentrum J lich GmbH U.Pietrzyk@fz-juelich.de http://www.fz-juelich.de and School of Mathematics and Natural Science - Medical Physics - Bergische Universit t Wuppertal Uwe.Pietrzyk@uni-wuppertal.de http://www.medizinphysik.uni-wuppertal.de Germany Mitglied der Helmholtz-Gemeinschaft