Scaling Puppet and Foreman for HPC by Trey Dockendorf

Introduction to Puppet configuration management and Hiera YAML data for Foreman provisioning in an HPC environment, emphasizing the motivation and requirements for scaling provisioning and management in large HPC centers using Foreman's host life cycle management, key-value storage, NFS root provisioning, and boot workflow manipulation capabilities.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

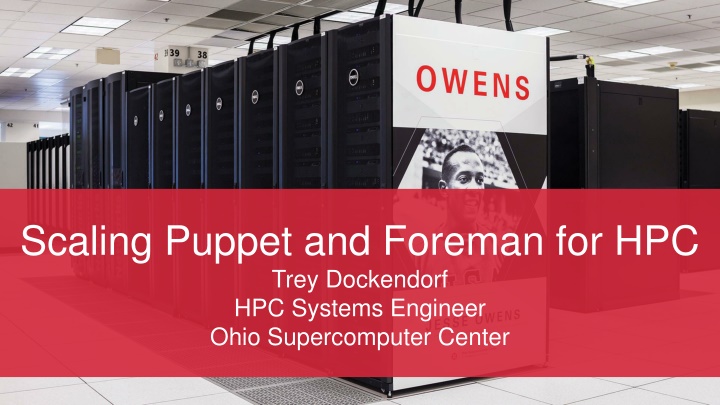

Scaling Puppet and Foreman for HPC Trey Dockendorf HPC Systems Engineer Ohio Supercomputer Center

Introduction Puppet configuration management Hiera YAML data for Puppet Foreman provisioning HPC Environment using NFS root Deployed to 1000 HPC and Infrastructure systems First used on Owens 824 node cluster 2

Motivation Requirement of any large HPC center is scaling the provisioning and management of HPC clusters Common provisioning and configuration management between compute and infrastructure Unified management of PXE, DHCP and DNS Audit networking interfaces Support testing configuration changes Unit and system 3

Foreman Host life cycle management DNS, DHCP, TFTP both Infrastructure and HPC Tuning: PassengerMaxPoolSize NFS root support required custom provisioning templates Local Boot PXE override to always network boot Workflow change for HPC no Build , use key-value 4

Foreman Key-Value Storage Key-value stored in Foreman as Parameters Change behavior during boot nfsroot_build Change TFTP templates nfsroot_path nfsroot_host nfsroot_kernel_version Hierarchical storage provides inheritance base/owens -> base/owens/compute -> FQDN Managed using web UI and scripts via API host-parameter.py & hostgroup-parameter.py 5

Foreman NFS Root Provisioning Provisioning handled by read/write host Support by Foreman written from scratch Read-only hosts have specific locations writable defined by /etc/statetab & /etc/rwtab statetab persists through reboots rwtab does not persist through reboots Read-only rebuild: nfsroot_build parameter osc-partition service Partition scripts generated by Puppet Defined using a partition schema in Hiera partition-wait script if nfsroot_build=false 6

Parameter Manipulation Timing Operation host get host list host set host set /w TFTP sync host delete host delete w/ TFTP sync hostgroup get hostgroup list hostgroup set hostgroup delete Avg Time (sec) 0.522 0.467 0.581 1.321 0.433 1.148 0.510 0.514 0.526 0.489 StdDev (sec) 0.021 0.025 0.024 0.057 0.026 0.038 0.026 0.034 0.030 0.030 8

Scaling Puppet Standalone Hosts Typically master compiles catalog for agents Scaling achieved by load balancing between masters Subject Alternative Name certificates any master can be CA Masters synced with mcollective and r10k Environments isolated by r10k control repo and git branching Foreman acts as ENC (External Node Classifier) to Puppet 9

Puppet Performance Standalone Hosts Min 644 Max 9093 82 Mean 1313 21 Std 806 9 Resource Count Compile Times (sec) 14 10

Scaling Puppet HPC Systems Scaling achieved by removing master and using masterless Primary bottleneck is performance reading manifests and modules then compiling locally /opt/puppet manifest and modules synced by mcollective and r10k Read-write hosts still use puppet masters Masterless puppet run via papply Environment isolation defaults to read-write host s Stateful - use PuppetDB and Foreman ENC Stateless - uses minimal catalog run in two stages at boot Stateless - manages locations in rwtab 11

Puppet Performance - Masterless Min (sec) 27 87 3 Max (sec) 257 414 19 Mean (sec) 115 185 9 Std (sec) 57 73 3 Early Late Compile Late contains 71 managed resources Late contains 60 second wait for filesystems Times collected from data of system bring-up after maintenance 13

Cluster Definitions - YAML YAML files define cluster Script used to sync YAML with Foreman Loaded into Puppet as Hiera data Make Puppet aware of cluster nodes and their properties Populates clustershell, pdsh, Torque, conman, powerman, SSH host based auth, etc YAML deployed to root filesystem as Python pickle and Ruby marshall YAML on root filesystem loaded to populate facts Informational such as node location Determine behavior when Puppet runs Ruby and Python based facts 14

Repositories Foreman Templates https://github.com/treydock/osc-foreman-templates Foreman Plugin https://github.com/treydock/foreman_osc NFS Root Module https://github.com/treydock/puppet-nfsroot Puppet Masterless Module https://github.com/treydock/puppet-puppet_masterless Cluster Facts module https://github.com/treydock/puppet-osc_facts 16