Huffman Codes for Data Compression: Analysis and Implementation

Huffman codes are a widely used technique for data compression, assigning binary codes to characters based on their frequency of occurrence. This lecture series delves into fixed-length codes, variable-length codes, and prefix codes, explaining their optimization and implementation for efficient data storage and transmission. Dive into the world of encoding and decoding with binary character codes, and understand how prefix codes simplify the decoding process, ensuring unambiguous translation of encoded data back to its original form.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Analysis of Algorithms CS 477/677 Instructor: Monica Nicolescu Lecture 21

Huffman Codes Widely used technique for data compression Assume the data to be a sequence of characters Looking for an effective way of storing the data Binary character code Uniquely represents a character by a binary string CS 477/677 - Lecture 21 2

Fixed-Length Codes E.g.: Data file containing 100,000 characters a 45 b 13 c 12 d 16 e 9 f 5 Frequency (thousands) 3 bits needed a = 000, b = 001, c = 010, d = 011, e = 100, f = 101 Requires: 100,000 3 = 300,000 bits CS 477/677 - Lecture 21 3

Huffman Codes Idea: Use the frequencies of occurrence of characters to build a optimal way of representing each character a 45 b 13 c 12 d 16 e 9 f 5 Frequency (thousands) CS 477/677 - Lecture 21 4

Variable-Length Codes E.g.: Data file containing 100,000 characters a 45 b 13 c 12 d 16 e 9 f 5 Frequency (thousands) Assign short codewords to frequent characters and long codewords to infrequent characters a = 0, b = 101, c = 100, d = 111, e = 1101, f = 1100 (45 1 + 13 3 + 12 3 + 16 3 + 9 4 + 5 4) 1,000 = 224,000 bits CS 477/677 - Lecture 21 5

Prefix Codes Prefix codes: Codes for which no codeword is also a prefix of some other codeword Better name would be prefix-free codes We can achieve optimal data compression using prefix codes We will restrict our attention to prefix codes CS 477/677 - Lecture 21 6

Encoding with Binary Character Codes Encoding Concatenate the codewords representing each character in the file E.g.: a = 0, b = 101, c = 100, d = 111, e = 1101, f = 1100 abc = 0 101 100 = 0101100 CS 477/677 - Lecture 21 7

Decoding with Binary Character Codes Prefix codes simplify decoding No codeword is a prefix of another the codeword that begins an encoded file is unambiguous Approach Identify the initial codeword Translate it back to the original character Repeat the process on the remainder of the file E.g.: a = 0, b = 101, c = 100, d = 111, e = 1101, f = 1100 001011101 = 0 0 101 1101 = aabe CS 477/677 - Lecture 21 8

Prefix Code Representation Binary tree whose leaves are the given characters Binary codeword the path from the root to the character, where 0 means go to the left child and 1 means go to the right child Length of the codeword Length of the path from root to the character leaf (depth of node) 100 0 1 100 0 1 86 a: 45 55 14 0 1 1 0 0 30 25 58 28 14 0 1 1 0 0 1 0 1 0 1 c: 12 b: 13 d: 16 14 a: 45 b: 13 c: 12 d: 16 e: 9 f: 5 0 1 f: 5 e: 9 CS 477/677 - Lecture 21 9

Optimal Codes An optimal code is always represented by a full binary tree Every non-leaf has two children Fixed-length code is not optimal, variable-length is How many bits are required to encode a file? Let C be the alphabet of characters Let f(c) be the frequency of character c Let dT(c) be the depth of c s leaf in the tree T corresponding to a prefix code C c = ( ) ( ) Tc ( ) B T f c d the cost of tree T CS 477/677 - Lecture 21 10

Constructing a Huffman Code Let s build a greedy algorithm that constructs an optimal prefix code (called a Huffman code) Assume that: C is a set of n characters Each character has a frequency f(c) Idea: The tree T is built in a bottom up manner Start with a set of |C| = n leaves At each step, merge the two least frequent objects: the frequency of the new node = sum of two frequencies Use a min-priority queue Q, keyed on f to identify the two least frequent objects f: 5 e: 9 c: 12 b: 13 d: 16 a: 45 CS 477/677 - Lecture 21 11

Example c: 12 b: 13 14 d: 16 a: 45 f: 5 e: 9 c: 12 b: 13 d: 16 a: 45 0 1 f: 5 e: 9 14 d: 16 25 a: 45 30 25 a: 45 0 1 0 1 1 0 0 1 f: 5 e: 9 c: 12 b: 13 d: 16 c: 12 b: 13 14 0 1 f: 5 e: 9 100 1 0 a: 45 55 1 0 a: 45 55 0 1 30 25 1 0 1 0 30 25 d: 16 c: 12 b: 13 14 1 1 0 0 1 0 d: 16 c: 12 b: 13 14 f: 5 e: 9 0 1 CS 477/677 - Lecture 21 12 f: 5 e: 9

Building a Huffman Code Alg.: HUFFMAN(C) 1. n C 2. Q C 3. fori 1ton 1 4. do allocate a new node z 5. left[z] x EXTRACT-MIN(Q) 6. right[z] y EXTRACT-MIN(Q) 7. f[z] f[x] + f[y] 8. INSERT (Q, z) 9. return EXTRACT-MIN(Q) Running time: O(nlgn) O(n) O(nlgn) CS 477/677 - Lecture 21 13

Greedy Choice Property Let C be an alphabet in which each character c C has frequency f[c]. Let x and y be two characters in C having the lowest frequencies. Then, there exists an optimal prefix code for C in which the codewords for x and y have the same (maximum) length and differ only in the last bit. CS 477/677 - Lecture 21 14

Proof of the Greedy Choice Idea: Consider a tree T representing an arbitrary optimal prefix code Modify T to make a tree representing another optimal prefix code in which x and y will appear as sibling leaves of maximum depth The codes of x and y will have the same length and differ only in the last bit CS 477/677 - Lecture 21 15

Proof of the Greedy Choice (cont.) T T T a x a b y y x y a b x b a, b two characters, sibling leaves of max. depth in T Assume: f[a] f[b] and f[x] f[y] f[x] and f[y] are the two lowest leaf frequencies, in order f[x] f[a] and f[y] f[b] Exchange the positions of a and x(T ) and of b and y(T ) CS 477/677 - Lecture 21 16

Proof of the Greedy Choice (cont.) T T T a x a b y y x y a b x b c c B(T) B(T ) = ( ) ( ) ( ) ( ) f c d c f c d c ' T T C C = f[x]dT(x) + f[a]dT(a) f[x]dT (x) f[a]dT (a) = f[x]dT(x) + f[a]dT(a) f[x]dT(a) f[a]dT (x) = (f[a] - f[x]) (dT(a) - dT(x)) 0 0 x is a minimum frequency leaf a is a leaf of maximum depth 0 CS 477/677 - Lecture 21 17

Proof of the Greedy Choice (cont.) T T T a x a b y y x y a b x b B(T) B(T ) 0 Similarly, exchanging y and b does not increase the cost: B(T ) B(T ) 0 B(T ) B(T). Also, since T is optimal, B(T) B(T ) Therefore, B(T) = B(T ) T is an optimal tree, in which x and y are sibling leaves of maximum depth CS 477/677 - Lecture 21 18

Discussion Greedy choice property: Building an optimal tree by mergers can begin with the greedy choice: merging the two characters with the lowest frequencies The cost of each merger is the sum of frequencies of the two items being merged Of all possible mergers, HUFFMAN chooses the one that incurs the least cost CS 477/677 - Lecture 21 19

Interval Partitioning Lecture j starts at sj and finishes at fj Goal: find minimum number of classrooms to schedule all lectures so that no two occur at the same time in the same room Ex: this schedule uses 4 classrooms to schedule 10 lectures e j g c d b h a f i 3 3:30 4 4:30 9 9:30 10 10:30 11 11:30 12 12:30 1 1:30 2 2:30 Time CS 477/677 - Lecture 21 20

Interval Partitioning Lecture j starts at sj and finishes at fj Goal: find minimum number of classrooms to schedule all lectures so that no two occur at the same time in the same room Ex: this schedule uses only 3 f c d j i b g a h e 3 3:30 4 4:30 9 9:30 10 10:30 11 11:30 12 12:30 1 1:30 2 2:30 Time CS 477/677 - Lecture 21 21

Interval Partitioning: Lower Bound on Optimal Solution The depth of a set of open intervals is the maximum number that contain any given time Key observation: The number of classrooms needed depth Ex: Depth of schedule below = 3 schedule below is optimal Does there always exist a schedule equal to depth of intervals? c d a, b, c all contain 9:30 f j i b g a h e 3 3:30 4 4:30 9 9:30 10 10:30 11 11:30 12 CS 477/677 - Lecture 21 12:30 1 1:30 2 2:30 22 Time

Greedy Strategy Consider lectures in increasing order of start time: assign lecture to any compatible classroom Labels set {1, 2, 3, , d}, where d is the depth of the set of intervals Overlapping intervals are given different labels Assign a label that has not been assigned to any previous interval that overlaps it CS 477/677 - Lecture 21 23

Greedy Algorithm Sort intervals by start times, such that s1 s2 ... sn (let I1, I2, .., Indenote the intervals in this order) forj = 1 ton 1. 2. Exclude from set {1, 2, , d} the labels of preceding and overlapping intervals Ii from consideration for Ij if there is any label from {1, 2, , d} that was not excluded assign that label to Ij 3. 4. 5. else leave Ij unlabeled 6. CS 477/677 - Lecture 21 24

Example a b c d e f g h j i 3 3:30 4 4:30 9 9:30 10 10:30 11 11:30 12 12:30 1 1:30 2 2:30 Time 3 f c d j i 2 b g 1 a h e 3 3:30 4 4:30 9 9:30 10 10:30 11 11:30 12 12:30 CS 477/677 - Lecture 21 1 1:30 2 2:30 Time 25

Claim Every interval will be assigned a label For interval Ij, assume there are t intervals earlier in the sorted order that overlap it We have t + 1 intervals that pass over a common point on the timeline t + 1 d, thus t d 1 At least one of the d labels is not excluded by this set of t intervals, which we can assign to Ij CS 477/677 - Lecture 21 26

Claim No two overlapping intervals are assigned the same label Consider I and I that overlap, and I precedes I in the sorted order When I is considered, the label for I is excluded from consideration Thus, the algorithm will assign a different label to I CS 477/677 - Lecture 21 27

Greedy Choice Property The greedy algorithm schedules every interval on a resource, using a number of resources equal to the depth of the set of intervals. This is the optimal number of resources needed. Proof: Follows from previous claims Structural proof Discover a simple structural bound asserting that every possible solution must have a certain value Then show that your algorithm always achieves this bound CS 477/677 - Lecture 21 28

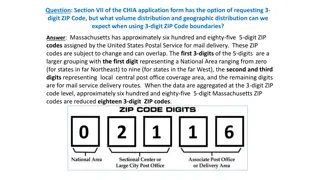

Scheduling to Minimizing Lateness Single resource processes one job at a time Job j requires tj units of processing time, is due at time dj If j starts at time sj, it finishes at time fj = sj + tj Lateness: j = max { 0, fj - dj } Goal: schedule all jobs to minimize maximum lateness L = max j Example: dj 6 8 1 2 3 4 5 6 tj 3 2 1 4 3 2 9 9 14 15 lateness = 2 lateness = 0 max lateness = 6 d3 = 9 d2 = 8 d6 = 15 d1 = 6 d5 = 14 d4 = 9 12 13 14 15 0 1 2 3 4 5 6 7 8 9 10 11 CS 477/677 - Lecture 21 29

Greedy Algorithms Greedy strategy: consider jobs in some order [Shortest processing time first] Consider jobs in ascending order of processing time tj counterexample 1 2 Choosing t1 first: l2 = 1 Choosing t2 first: l2 = l1 = 0 tj 1 10 dj 100 10 [Smallest slack] Consider jobs in ascending order of slack dj - tj counterexample Choosing t2 first: l1 = 9 Choosing t1 first: l1 = 0 and l2 = 1 1 2 tj 1 10 dj 2 10 CS 477/677 - Lecture 21 30

Readings Chapters 14, 15 CS 477/677 - Lecture 21 31