Enhancing I/O Performance Through Adaptive Data Compression in Climate Simulations

This research focuses on improving I/O performance for climate simulations by employing adaptive data compression techniques. Scientific data compression methods, such as lossy and lossless compression, are explored to reduce data volume and increase effective I/O bandwidth. The study highlights the challenges in selecting the best compression method for different data variables and suggests potential solutions for enhancing compression efficiency in scientific computing applications.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Adaptive Compression to Improve I/O Performance for Climate Simulations Swati Singhal Alan Sussman UMIACS and Department of Computer Science The 2nd International Workshop on Data Reduction for Big Scientific Data 1

Scientific Data Compression? Data reduction is growing concern for scientific computing Motivating Example: Meso-Scale Climate Simulation Application from Department of Atmospheric and Oceanic Sciences at UMD An ensemble simulation with data assimilation on 1.3 million grid points that forecasts for a nine hour period, repeatedly Simulation time: ~65 mins on a cluster for a 9 hour forecast (~60% of the time is spend in I/O and extraneous work) Generates ~238 GB of data per simulation Possible solution Data compression Reduces data volume for I/O and increases effective I/O bandwidth

Scientific Data Compression Scientific data often are multidimensional arrays of floating point numbers, stored in self-describing data formats (e.g., netCDF, HDF, etc.) Difficult to compress -> high entropy in lower order bytes 0.00589 0.00590 00111011 1100000 100000000 11100111 Hard to achieve good compression ratio. 00111011 1100000 101010100 11001010 What methods are available to compress scientific data? Two categories of data compression methods Lossy compression E.g., ZFP (Linstrom), SZ (Di), ISABELA (Lakshminarasimhan) Lossless compression E.g., ZLIB, LZO, BZIP2, FPC (Burtscher), ISOBAR (Schendel) Lossy methods provide high compression but precision is lost Lossless methods retain precision but are not sufficient to achieve high compression - require preprocessing techniques

Scientific Data Compression Which compression method to use for the given data? Typically one-time offline analysis on small subset of data Criteria is based on either compression ratio or compression speed depending on application needs One compression method for all the data variables ISSUES? Manual effort required to select a compression scheme Limited measurements to define performance criteria Loss of compression benefits -> best compression method differs for different variables (and may also change for same variable over time) Can we do better?

Best compression method differs for different variables Results from a single WRF output file COMPRESSION SPEED (MB/S) COMPRESSION RATIO T SR F T SR F 1000 10000.00 100 1000.00 100.00 10 10.00 1.00 1 Prep1 + LZO Prep1 + BZIP2 Prep2 + BZIP2 Prep2 + LZO Prep3 + BZIP2 Prep1 + LZO Prep1 + BZIP2 Prep2 + BZIP2 Prep2 + LZO Prep3 + BZIP2 Log scale Log scale

ACOMPS: Adaptive Compression Scheme An Adaptive compression tool that Supports a set of lossless compression methods combined with different memory preprocessing techniques Automatically selects the best compression method for each variable in the dataset Allows flexible criteria to select the best compression method Allows compressing data in smaller units/chunks for selective decompression to increase effective I/O bandwidth

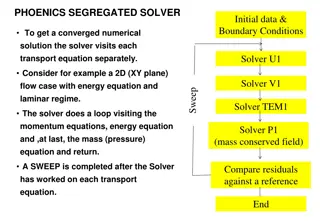

ACOMPS: Adaptive Compression Scheme Preprocessing techniques supported *** Lossless compression methods supported *** Total 9 compression techniques ---------------------- Grid cell 1 Grid cell 2 Bytes segregation (B) Identify and segregate compressible bytes(based on skewness) for compression ---------------------- B - LZO B - ZLIB B - BZIP2 LZO ZLIB Byte-Wise segregation (BW) BW - LZO BW - ZLIB BW - BZIP2 Segregate compressible bytes and group these bytes based on their position in the floating point number. ------------------ BZIP2 ---------- Second compressible byte of all grid cells First compressible byte of all grid cells BWXOR - LZO BWXOR - ZLIB BWXOR - BZIP2 ------------------ Byte-Wise segregation and XOR (BWXOR) ----------------- Byte-Wise segregation + XOR ---------- ***Other preprocessing and compression(both Lossy and Lossless) techniques can be added ----------------- ------

ACOMPS : Adaptive Compression Scheme Criteria to evaluate the performance of any compression technique X performancex = compression_speedx * WCS + compression_ratiox * WCR Variable A to be compressed at time step 0 User tunable parameters: WCR=> compression ratio weighting for deciding best compression method. WCS => compression speed weighting for deciding best compression method. Determine the best technique, Tx. Record BestA = Tx BestPerfA = performanceTx => small delta limit to define acceptance range. Compress the data using technique BestA and record latestPerfA = performanceBestA

ACOMPS : Adaptive Compression Scheme Criteria to evaluate the performance of any compression technique X performancex = compression_speedx * WCS + compression_ratiox * WCR Variable A to be compressed at time step t User tunable parameters: WCR=> compression ratio weighting for deciding best compression method. BestPerfA - < latestPerfA < BestPerfA + YES Compression performance didn t change beyond the limit . Continue to use the current BestA No WCS => compression speed weighting for deciding best compression method. Determine the best technique, Tx. Record BestA = Tx BestPerfA = performanceTx => small delta limit to define acceptance range. Compress the data using technique BestA and record latestPerfA = performanceBestA

WRF-LETKF based climate simulations Merge with the initial conditions to guide the simulation convert initial conditions LETKFm LETKF m WRF n WRF n Binary Binary netCDF Revised state output based on the observed data m parallel LETKF processes OBSERV ATIONS n parallel WRF ensembles

WRF-LETKF based climate simulations Merge with the initial conditions to guide the simulation convert initial conditions LETKFm LETKF m WRF n WRF n Binary Binary netCDF Revised state output based on the observed data m parallel LETKF processes OBSERV ATIONS n parallel WRF ensembles Example: n = 55, m = 400. Max grid size : 181 x 151 x 51 Single cycle WRF + single cycle LETKF (9 hours simulation time) Total simulation time (single cycle WRF + single cycle LETKF) : ~ 65 mins on cluster High conversion cost (9 x 55) files => 36.7 minutes => ~56% of the total simulation time Large output data size : ~283 GB DRBSD-2

WRF-LETKF based climate simulations Merge with the initial conditions to guide the simulation convert initial conditions LETKFm LETKF m WRF n WRF n Binary Binary netCDF Revised state output based on the observed data m parallel LETKF processes OBSERV ATIONS n parallel WRF ensembles Any format supported by ADIOS. No conversion required LETKF ADIOS I/O Plugin WRF ADIOS I/O Plugin ADIOS + ACOMPS data transformation plugin

Experimental Setup Deepthought2 Campus cluster at UMD Number of nodes : 484 with 20 cores/node + 4 nodes with 40 core/node Memory/node ~ 128 GB (DDR3 at 1866 Mhz) Processor : dual Intel Ivy Bridge E5-2680v2 at 2.8 GHz Parallel File system : Lustre Results Climate simulations with WRF-LETKF WRF Ensemble n = 55 => each uses 1 node No. of MPI processes = (55 x 20) = 1100 Domain size : 181 x 151 grid cells Vertical levels : 51 LETKF => uses 20 nodes No. of MPI processes = (20 x 20) = 400 Majority of variables are float type 3D variable : XLAT, XLONG, F, T 4D variables: U, V, W, P, PB, RAINC..

Adaptive Vs Non-adaptive methods : Output sizes OUTPUT SIZES (IN GIGABYTES) 77% improvement in size over original 283 191 13% better than ADIOS + Bzip2 80.03 73 72.6 67.5 62.8 netCDF + Binary ADIOS ADIOS + Zlib ADIOS + Bzip2 ADIOS + LZO ADIOS + ACOMPS (Only CS) ADIOS + ACOMPS (Only CR) Only CR (Best compression ratio, slower) => WCR = 1, Wcs = 0 Only CS (Best speed, not as good compression) => WCR = 0, Wcs = 1 ACOMPS achieves better compression

Best compression method differs for different variables Adaptive Vs Non-adaptive methods : Compression time LETKF END to END TIMES 70.00 conversion 60.00 Lower overhead than ADIOS + Bzip2 with much better compression WRF TIME IN MINUTES 50.00 Close to fastest ADIOS + LZO 40.00 WRF preprocessing 30.00 20.00 10.00 0.00 netCDF + Binary ADIOS ADIOS + Zlib ADIOS + Bzip2 ADIOS + LZO ADIOS + ACOMPS (Only CS) ADIOS + ACOMPS (Only CR) ACOMPS incurs low overhead Only CR(Best compression ratio, slower) => WCR = 1, Wcs = 0 Only CS(Best speed, not as good compression) => WCR = 0, Wcs = 1

Future Directions Extend to support more compression methods including both lossless and lossy compression methods Thoroughly analyze how the best compression method for a given variable changes over time How often it is advantageous to do the re-analysis? How to enhance the criteria to decide when to re-evaluate in order to adapt to the changes quickly Parallelize the analysis phase using threads

Adaptive Compression to Improve I/O Performance for Climate Simulations Alan Sussman als@cs.umd.edu Swati Singhal swati@cs.umd.edu UMIACS and Department of Computer Science The 2nd International Workshop on Data Reduction for Big Scientific Data 17

Related Work Lossless Compression E. R. Schendel, Y. Jin, N. Shah, J. Chen, C. Chang, S.-H. Ku, S. Ethier, S. Klasky, R. Latham, R. Ross, and N. F. Samatova, ISOBAR precon- ditioner for effective and high-throughput lossless data compression, (ICDE. 2012) M. Burtscher and P. Ratanaworabhan, FPC: A high-speed compressor for double-precision floating-point data, IEEE Transactions on Com- puters, 2009 Martin Burtscher and Paruj Ratanaworabhan. gFPC: A Self-Tuning Compression Algorithm. In Data Compression Conference (DCC), 2010 I. H. Witten, R. M. Neal, and J. G. Cleary, Arithmetic coding for data compression, Communications of the ACM 1987. S. Bhattacherjee, A. Deshpande, and A. Sussman, PStore: An efficient storage framework for managing scientific data (SSDBM 2014) S. W. Son, Z. Chen, W. Hendrix, A. Agrawal, W. keng Liao, and A. Choudhary, Data compression for the exascale computing era survey, Journal of Supercomputing Frontiers and Innovations, 2014. Lossy Compression Sheng Di and Franck Cappello. Fast Error-Bounded Lossy HPC Data Compression with SZ. IPDPS, 2016 Woody Austin, Grey Ballard, and Tamara G. Kolda. Parallel tensor compression for large-scale scientific data. IPDPS, 2016 Martin Burtscher, Hari Mukka, Annie Yang, and Farbod Hesaaraki. Real-time synthesis of compression algorithms for scientific data. High Performance Computing, Networking, Storage and Analysis, SC16, 2016