Gradient Boosting and XGBoost in Decision Trees

Dive into the world of Gradient Boosting and XGBoost techniques with a focus on Decision Trees, their applications, optimization, and training methods. Explore the significance of parameter tuning and training with samples to enhance your machine learning skills. Access resources to deepen your understanding and stay updated on the latest advancements in the field.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Gradient B Boosting Decision Tree/eXtremeGradient Boosting 2021.12.29 Jialin Li

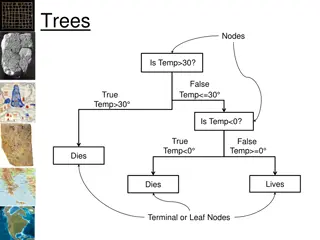

Boosting Y=f(x)+L L:redusial function Samples are strongly relative.

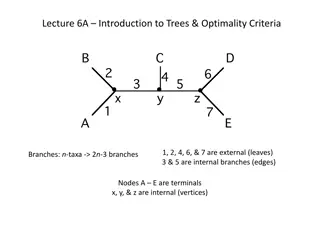

Gradient Boosting Decision Tree fM(x)= m=1 ?m(?) , Tm is the m tree m is number of trees. M

XGboost optimized GBDT 1.L 2. L 3.

Application Import xgboost 1. 2. (train and test) 3. Some examples https://github.com/dmlc/xgboost

Next to do 1. Try to train with our samples 2. Learn the meaning of parameters (important to adjust the params)

Backup GBDT

Backup About XGboost https://indico.cern.ch/event/382895/contributions/910921/attachments/763480/104 7450/XGBoost_tianqi.pdf