Decoupling Learning Rates Using Empirical Bayes: Optimization Strategy

Decoupling learning rates through an Empirical Bayes approach to optimize model convergence: prioritizing first-order features over second-order features improves convergence speed and efficiency. A detailed study on the impact of observation rates on different feature orders and the benefits of sequencing weight learning according to data availability. This strategy enables faster convergence and better model performance in Generalized Linear Models.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

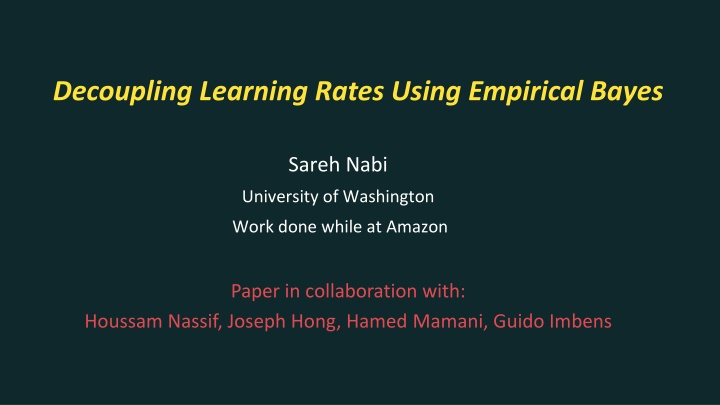

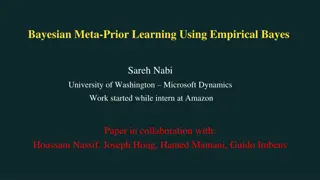

Decoupling Learning Rates Using Empirical Bayes Sareh Nabi University of Washington Work done while at Amazon Paper in collaboration with: Houssam Nassif, Joseph Hong, Hamed Mamani, Guido Imbens

Biking competition Stop biking pointlessly, join team Circle! Year-round carousel rides! Unlimited laps! All you can eat Pi! No corners to hide! Hurry, don t linger around! Join now! Check other teams 2

Multiple-slot template Stop biking pointlessly, join team Circle! Slot Year-round carousel rides! Unlimited laps! All you can eat Pi! No corners to hide! Hurry, don t linger around! Join now! Check other teams 3

Template combinatorics: 48 layouts Stop biking pointlessly, join team Circle! x2 x3 Best way to bicycle! We cycle and re-cycle! No point in not joining! x2 Join now! Check other teams x2 x2 4

Second-order features Stop biking pointlessly, join team Circle! Year-round carousel rides! Unlimited laps! All you can eat Pi! No corners to hide! Hurry, don t linger around! Join now! Check other teams 5

Featurization First-order features, X1: variant values E.g. image1 , title2 , color1 Second-order features, X2: variant combinations/interactions E.g. image1 AND title2 , image1 AND color1 Concatenate for final feature vector representation: X = [X1, X2] 6

Decoupling learning rates Let d: variants per slot Observation rate of 1st order features: 1/?. Converges fast. Observation rate of 2nd order features: 1/?2. Converges slow. We observe: 1st order to have bigger effect than 2nd order 2ndorder s high uncertainty adversely affects convergence Can we converge faster by learning 1st order weights first, then 2nd order weights later as more data becomes available? Empirical Bayes Approach 7

Generalized Linear Models (GLM) Assign one weight wi per feature xi Let r: reward (e.g. click), g link function ? ? ? = ? 1(? ?) 1?? 1+ 2 ??? 2 ? ? = ?0+ ?? ??? ? ? ? intercept + 1st order + 2nd order effect 8

Bayesian GLM At time t, each weight wi is represented by a distribution: 2) ??,? ~ ?( ??,?,??,? ? ? = ?2+ = ?1+ ?1 ?2 ???? Eg: ??????1 1 + ??????2 0 + + ??????3 0 + ??????1 1 + 9

Bayesian GLM weight updates wi,t update: + data = wi,t prior wi,t+1 posterior Starting prior: Non-informative standard normal ??,0 ?(0,1) Bayesian GLM bandits (Thompson Sampling): Sample W, thenargmax? ? ? 10

Impose Bayesian hierarchy Let ??: true mean of weight ?? ??,?,??,? 2: GLM-estimated mean and variance of weight ?? Critical model assumptions: Group features into Ck=2 categories (1st order, 2nd order) Each category ? has a hierarchical meta-prior ?(??,?? True mean ?? ? ??,?? Observed mean ??,?|?? ? ??,??,? 2) 2, ? ?? 2, ?,? 12

Model assumptions Meta prior ???~? ?2,?2 Meta prior 2, ? ?1 2, ?? ?2 ??~? ?1,?1 Empiric al prior helps, eve with one batch Type equation here. ?1,2, ?1,3, ?1, ?2, 2, ?,? ???,?|??? ? ???,???,? 2 ??,?|?? ? ??,??,? , ?,?,? 13

Empirical prior estimation Theoretical variance, based on our assumptions: 2 ? ????,? |??| 2 ??? ??,? = + ?? Empirical variance, using variance formula on GLM estimates: 2 ? ?? ??,? ?? ?? 1 ??? ??,? = Combine both and recover the empirical prior: ? ?? ??,? ?? ?? 1 2 2 2 ? ????,? ?? ? ?? ??,? |??| 2= ?? ; ??= 14

Variance intuition Within-features variance Between-features variance 2 2 ? ?? ??,? ?? ?? 1 ? ????,? ?? 2= ?? Unbiased estimate of true ?? Estimation noise 2 15

Empirical Bayes (EB) algorithm 1. Start Bayesian GLM with non-informative prior ?(0,1) 2. After time t, compute ??,?? for each category 3. Restart model with per-category empirical priors ?(??,?? 4. Retrain using data from elapsed t timesteps 5. Continue using GLM as normal 2) Algorithm used the same data twice, to compute the empirical prior and to train the model Works with online or batch settings 16

Experimental setup Used Bayesian Linear Probit (BLIP) as our Bayesian GLM Grouped features as 1st and 2nd order features batch updates Simulations on classification task (UCI income prediction) Live experiments in Amazon production system ?? had little effect, we focus on ?? 18

When to compute empirical prior Effect of Prior Reset Time 0.318 Longer reset time helps more Log Loss 0.317 0.316 Empirical prior helps, even with one batch 0.315 3 4 5 6 Day BLIP BLIPBayes 1d BLIPBayes 3d 19

Lasting effect on small batches Effect of Small Batch Dataset 0.37 0.36 Log Loss 0.35 0.34 0.33 0.32 0 10 20 30 Day BLIP BLIPBayes BLIPTwice 20

Empirical ? outperforms random ? Effect of Prior Variance 0.40 0.38 Log Loss 0.36 0.34 0.32 2 4 6 Day BLIP Optimal t2=0.01 t2=0.1 t2=5.0 21

Discussion Hierarchical prior clamped each category s weights together Effect of data on posterior increases with ? We always observed ?1> ?2 Model puts more emphasis on 1st order features Shrinks 2nd order effect, decoupling their learning rates Model effectively learns 1st order weights first, then 2nd order Increased stability and convergence speed 23

Takeaways Empirical Bayes prior helps, mostly on low/medium traffic Promising, as low traffic cases are hardest to optimize Effectively decouples learning rates Model converges faster Works for any feature grouping Applies to contextual and personalization use cases 24

Thank you! Questions? Comments? 25