Generalization of Empirical Risk Minimization in Stochastic Convex Optimization by Vitaly Feldman

This study delves into the generalization of Empirical Risk Minimization (ERM) in stochastic convex optimization, focusing on minimizing true objective functions while considering generalization errors. It explores the application of ERM in machine learning and statistics, particularly in supervised learning scenarios, and discusses the significance of optimization algorithms in determining generalization errors. The work addresses Lipschitz-bounded Stochastic Convex Optimization (SCO) and offers insights into error bounds and potential improvements in optimization algorithms.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Generalization of ERM in stochastic convex optimization: Vitaly Feldman 2016

Dataset: ? = ?1,?1, , ??,?? ? ERM Learning problem ? Stochastic convex optimization This work: Generalization error: Err? ? Err? ? = ? Err? ? Err? ? ? ? 2

Stochastic convex optimization Convex body ? ? Class ? of convex functions over ? Given ?1, ,?? sampled i.i.d. from unknown ? over ? Minimize true (expected) objective: ??(?) ?? ?[?(?)]over ?: Find ? s.t. ?? ? min ? ? ??? + ? with high prob. ?1 ?2 ?? ?? ? 3

Applications Machine learning and statistics Supervised learning: Dataset: ?1,?1, , ??,?? ? Hypotheses: ? = ? ? ?} Goal: minimize true loss ??,? ?? ?? ,? over ? ??? = ? ???,?? ??? = ??,? ?? ?? ,? is the true loss of ? unsupervised learning, density estimation etc. 4

ERM (empirical risk minimization) Given ? = ?1, ,?? 1 ? ? ???(?) Minimize empirical objective: ??(?) ?1 ?2 ?? ?? ? ? Often known to be optimal (e.g. VC theory) ?? ? ??( ?) ?? ? min ? ? ??? generalization error 5

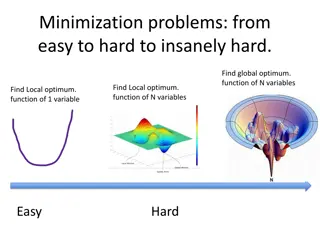

The gap 2 Lipschitz bounded SCO ? = ?2 ? = ? convex ?,? ?, ?1 ? ?2 1} ? ? ? ? ? ?2 ERM error upper bounds: ?/? Special cases: Linear models: Strongly convex: 1/? [Shalev-Shwartz,Shamir,Srebro,Sridharan 09] 1/?[Kakade,Sridharan,Tewari 08] ERMmight have generalization error: log ? ? [Shalev-Shwartz,Shamir,Srebro,Sridharan 09] 6

New result Can the bounds be improved? Thm: ERM might have generalization error: ? ? Can be solved with error: stochastic gradient descent [Robbins,Monro 51; Polyak 90] strongly-convex regularization [Shalev-Shwartz,Shamir,Srebro,Sridharan 09] 1/? Generalization error depends on optimization algorithm! 7

Construction ?1 [0,1] convex and 1-Lipschitz ?:?2 ?? for ? ? ? ?,??? =1 ? ? ?,??? = 0 ? is the set of directions ? ?,? ? =1 ? 2?/6 8 8 8

Distribution ?: ?? where ? is a random subset of ? ? 2 ?? Given: ? = ??1, ,??? If exists ? ?, such that ? ?1 ?? Then ??? = 0 = min ? ???? but ??? = 1/16 What is the probability that ?1 ?? ? ? If ? log ? ?/6 then this prob. 1/2 9

Extensions Same lower bound for ? Lipschitz bounded SCO (? 1) ? = ?? ?? 1} ? = ? convex ?,? ?, ? ? ? ? ?1 ? ? ?? Can be made efficient: ? supported over ? functions computable in ?(?) time 1 regularization does not help 10

Summary Solutions to ERM might have poor generalization Optimization algorithm matters Which optimization algorithms generalize well? o Does gradient descent on ?? generalize? Need new analysis techniques 11