Computational Entropy and Cryptography Innovations

Explore the groundbreaking work on computational entropy and complexity-based cryptography by leading researchers from Harvard University, Microsoft Research SVC, Tel Aviv University, ETH Zurich, and more. Discover how these concepts are revolutionizing digital security protocols and applications, such as digital signatures, zero-knowledge proofs, and private-key encryption, based on the principles of one-way functions and cryptographic primitives.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

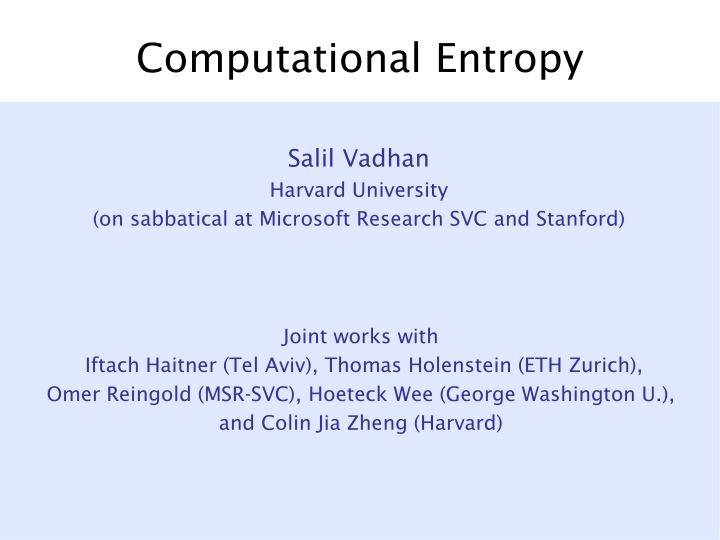

Computational Entropy Salil Vadhan Harvard University (on sabbatical at Microsoft Research SVC and Stanford) Joint works with Iftach Haitner (Tel Aviv), Thomas Holenstein (ETH Zurich), Omer Reingold (MSR-SVC), Hoeteck Wee (George Washington U.), and Colin Jia Zheng (Harvard)

Complexity-Based Cryptography Shannon `49: Information-theoretic security is infeasible. |Key| |All Encrypted Data| On a standard, insecure communication channel. Diffie & Hellman `76: Complexity-based cryptography Assume adversary has limited computational resources Base cryptography on hard computational problems Enables public-key crypto, digital signatures,

One-Way Functions [DH76] easy x f(x) hard Candidate: f(x,y) = x y Formally, a OWF is f : {0,1}n! {0,1}n s.t. f poly-time computable 8 poly-time A Pr[A(f(X))2 f-1(f(X))] = 1/n!(1) for X {0,1}n

OWFs & Cryptography secure protocols & applications digital signatures zero-knowledge proofs private-key encryption statistical ZK arguments MACs [GMW86] statistically binding commitments pseudorandom functions [NY89] [BCC86] [GGM86] [N89] pseudorandom generators statistically hiding commitments target-collision-resistant hash functions (UOWHFs) [R90] [HILL90] [HNORV07] one-way functions

OWFs & Cryptography secure protocols & applications digital signatures zero-knowledge proofs private-key encryption statistical ZK arguments MACs [GMW86] statistically binding commitments pseudorandom functions [NY89] [BCC86] [GGM86] [N89] pseudorandom generators statistically hiding commitments target-collision-resistant hash functions (UOWHFs) [R90] [HILL90] [HNORV07] one-way functions

Computational Entropy [Y82,HILL90,BSW03] Question: How can we use the raw hardness of a OWF to build useful crypto primitives? Answer (today s talk): Every crypto primitive amounts to some form of computational entropy . One-way functions already have a little bit of computational entropy .

Entropy Def: The Shannon entropy of r.v. X is H(X) = Ex X[log(1/Pr[X=x)] H(X) = Bits of randomness in X (on avg) 0 H(X) log|Supp(X)| X uniform on Supp(X) X concentrated on single point Conditional Entropy: H(X|Y) = Ey Y[H(X|Y=y)]

Worst-Case Entropy Measures Min-Entropy: H1(X) = minx log(1/Pr[X=x]) Max-Entropy: H0(X) = log |Supp(X)| H1(X) H(X) H0(X)

Computational Entropy A poly-time algorithm may perceive the entropy of X to be very different from H(X). Example:a pseudorandom generator [BM82,Y82] G: {0,1}m!{0,1}n G(Um) computationally indistinguishable from Un But H(G(Um)) m. Def [GM82]: X c Y iff 8 poly-time T Pr[T(X)=1] Pr[T(Y)=1] e.g. G(N,x) = (lsb(x),lsb(x2 mod N), lsb(x4mod N), ) for N=pq, x2 ZN* is a PRG if factoring is hard [BBS82,ACGS82].

Pseudoentropy Def [HILL90]: X has pseudoentropy k iff there exists a random variable Y s.t. 1. Y c X 2. H(Y) k Interesting when k > H(X), i.e. Pseudoentropy > Real Entropy

OWFs & Cryptography secure protocols & applications digital signatures zero-knowledge proofs private-key encryption statistical ZK arguments MACs [GMW86] statistically binding commitments pseudorandom functions [NY89] [BCC86] [GGM86] [N89] pseudorandom generators statistically hiding commitments target-collision-resistant hash functions (UOWHFs) [R90] [HILL90] pseudoentropy [HNORV07] one-way functions

Application of Pseudoentropy Thm [HILL90]: 9 OWF )9 PRG Proof idea: OWF to discuss X with pseudoentropy H(X)+1/poly(n) repetitions X with pseudo-min-entropy H0(X)+poly(n) hashing PRG

Pseudoentropy from OWF: Intuition Computational Setting: For 1-1 OWF f, X {0,1}n: Information Theory: For jointly distributed (X,Y): H(X|f(X))=0, but 8 function A Pr[A(Y)=X] p 8 poly-time A Pr[A(f(X))=X] 1/n!(1) def [DORS04] def [HLR07] X has unpredictability entropy !(log n) given f(X) X has average min-entropy log(1/p) given Y ? X has pseudoentropy !(log n) given f(X) H(X|Y) log(1/p)

Pseudoentropy from OWF: Intuition Computational Setting: For 1-1 OWF f, X {0,1}n: Challenges: How to convert unpredictability into pseudoentropy? H(X|f(X))=0, but 8 poly-time A Pr[A(f(X))=X] 1/n!(1) When f not 1-1, unpredictability can be trivial. def [HLR07] X has unpredictability entropy !(log n) given f(X) FALSE! T(x,y) = [[f(x)=?y]] distinguishes (X,f(X)) from every (Z,f(X)) with H(Z|f(X))>0 X has pseudoentropy !(log n) given f(X)

Pseudoentropy from OWF Thm [HILL90]: W=(f(X),H,H(X)1, H(X)J) has pseudoentropy H(W)+!(log n)/n H : {0,1}n! {0,1}n a certain kind of hash func. X {0,1}n, J {1, ,n}. Thm [HRV10,VZ11]: (f(X),X1, ,Xn) has next-bit pseudoentropy n+!(log n). No hashing! Total amount of pseudoentropy known & > n. Get full !(log n) bits of pseudoentropy.

Next-bit Pseudoentropy Thm [HRV10,VZ11]: (f(X),X1, ,Xn) has next-bit pseudoentropy n+!(log n). Note: (f(X),X) easily distinguishable from every random variable of entropy > n. Next-bit pseudoentropy: 9 (Y1, ,Yn) s.t. (f(X),X1, ,Xi) c (f(X),X1, ,Xi-1,Yi) H(f(X))+ i H(Yi|f(X),X1, ,Xi-1) = n+!(log n).

Consequences Simpler and more efficient construction of pseudorandom generators from one-way functions. [HILL90,H06]: OWF f of input length n ) PRG G of seed length O(n8). [HRV10,VZ11]: OWF f of input length n ) PRG G of seed length O~(n3).

Pseudoentropy , Unpredictability wrt KL Divergence Thm [VZ11]: Let (Y,Z) 2 {0,1}n {0,1}O(log n). The pseudoentropy of Z given Y is H(Z|Y)+ m There is no probabilistic poly-time A s.t. D((Y,Z)||(Y,A(Y)) . [D = Kullback-Liebler Divergence] Special case: H(Z|Y)=0 Z has pseudoentropy iff hard to predict Z with divergence Can t take Z=f-1(Y) for 1-1 OWF f since |f-1(Y)|=n.

Pseudoentropy , Unpredictability wrt KL Divergence Thm [VZ11]: Let (Y,Z) 2 {0,1}n {0,1}O(log n). The pseudoentropy of Z given Y is H(Z|Y)+ m There is no probabilistic poly-time A s.t. D((Y,Z)||(Y,A(Y)) . [D = Kullback-Liebler Divergence] Analogue of Impagliazzo s Hardcore Thm [I95,N95,H05,BHK09] for Shannon entropy rather than min-entropy.

Next-Bit Pseudoentropy from OWF: Proof Sketch f a one-way function Given f(X), hard to achieve divergence O(log n) from X Given (f(X),X1, ,XJ), hard to achieve divergence O(log n)/n from XJ+1 thm Given (f(X),X1, ,XJ), XJ+1 has pseudoentropy entropy+!(log n)/n (f(X),X1, ,Xn) has next-bit pseudoentropy n+!(log n)

OWFs & Cryptography secure protocols & applications digital signatures zero-knowledge proofs private-key encryption statistical ZK arguments MACs [GMW86] statistically binding commitments pseudorandom functions [NY89] [BCC86] [GGM86] [N89] pseudorandom generators statistically hiding commitments target-collision-resistant hash functions (UOWHFs) next-bit pseudoentropy [HRV10, VZ11] [R90] [HNORV07] one-way functions

Application of Next-Bit Pseudoentropy Thm [HILL90]: 9 OWF )9 PRG Proof outline [HRV10]: OWF done Z=(f(Un),Un) with next-bit pseudoentropy n+!(log n) repetitions Z with next-block pseudo-min-entropy |seed|+poly(n) hashing PRG

OWFs & Cryptography secure protocols & applications digital signatures zero-knowledge proofs private-key encryption statistical ZK arguments MACs [GMW86] statistically binding commitments pseudorandom functions [NY89] [BCC86] [GGM86] [N89] pseudorandom generators statistically hiding commitments target-collision-resistant hash functions (UOWHFs) next-bit pseudoentropy [HRV10, VZ11] [R90] [HNORV07] one-way functions

OWFs & Cryptography secure protocols & applications digital signatures zero-knowledge proofs private-key encryption statistical ZK arguments MACs [GMW86] statistically binding commitments pseudorandom functions [NY89] [BCC86] [GGM86] [N89] pseudorandom generators statistically hiding commitments target-collision-resistant hash functions (UOWHFs) next-bit pseudoentropy [HRV10, VZ11] [HRVW09, HHRVW10] inaccessible entropy one-way functions

Inaccessible Entropy [HRVW09,HHRVW10] Example: if h : {0,1}n! {0,1}n-k is collision- resistant and X {0,1}n, then H(X|h(X)) k, but To an efficient algorithm, once it produces h(X), X is determined ) accessible entropy 0. Accessible entropy Real Entropy! Thm [HRVW09]: f a OWF ) (f(X)1, ,f(X)n,X) has accessible entropy n-!(log n). Cf. (f(X),X1, ,Xn) has pseudoentropy n+!(log n).

Conclusion Complexity-based cryptography is possible because of gaps between real & computational entropy. Secrecy pseudoentropy > real entropy Unforgeability accessible entropy < real entropy

Research Directions Formally unify inaccessible entropy and pseudoentropy. OWF f : {0,1}n! {0,1}n) Pseudorandom generators of seed length O(n)? More applications of inaccessible entropy in crypto or complexity (or mathematics?)