Multiple Sequence Alignment with Hidden Markov Models

Multiple Sequence Alignment (MSA) is essential for various biological analyses like phylogeny estimation and selection quantification. Profile Hidden Markov Models (HMMs) play a crucial role in achieving accurate alignments. This process involves aligning unaligned sequences to create alignments wit

0 views • 29 slides

Markov Chains and Their Applications in Networks

Andrej Markov and his contributions to the development of Markov chains are explored, highlighting the principles, algorithms, and rules associated with these probabilistic models. The concept of a Markov chain, where transitions between states depend only on the current state, is explained using we

20 views • 21 slides

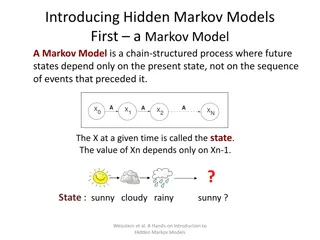

Introduction to Markov Models and Hidden Markov Models

A Markov model is a chain-structured process where future states depend only on the present state. Hidden Markov Models are Markov chains where the state is only partially observable. Explore state transition and emission probabilities in various scenarios such as weather forecasting and genetic seq

2 views • 12 slides

Infinite Horizon Markov Decision Processes

In the realm of Markov Decision Processes (MDPs), tackling infinite horizon problems involves defining value functions, introducing discount factors, and guaranteeing the existence of optimal policies. Computational challenges like policy evaluation and optimization are addressed through algorithms

2 views • 39 slides

Markov Decision Processes in Machine Learning

Markov Decision Processes (MDPs) involve taking actions that influence the state of the world, leading to optimal policies. Components include states, actions, transition models, reward functions, and policies. Solving MDPs requires knowing transition models and reward functions, while reinforcement

0 views • 26 slides

Introduction to Markov Decision Processes and Optimal Policies

Explore the world of Markov Decision Processes (MDPs) and optimal policies in Machine Learning. Uncover the concepts of states, actions, transition functions, rewards, and policies. Learn about the significance of Markov property in MDPs, Andrey Markov's contribution, and how to find optimal policie

1 views • 59 slides

MCMC Algorithms and Gibbs Sampling in Markov Chain Monte Carlo Simulations

Markov Chain Monte Carlo (MCMC) algorithms play a crucial role in generating sequences of states for various applications. One popular MCMC method, Gibbs Sampling, is particularly useful for Bayesian networks, allowing the random sampling of variables based on probability distributions. This process

1 views • 7 slides

Optimal Sustainable Control of Forest Sector with Stochastic Dynamic Programming and Markov Chains

Stochastic dynamic programming with Markov chains is used for optimal control of the forest sector, focusing on continuous cover forestry. This approach optimizes forest industry production, harvest levels, and logistic solutions based on market conditions. The method involves solving quadratic prog

2 views • 27 slides

Complex Probability and Markov Stochastic Process

Discussion on the concept of complex probability in solving real-world problems, particularly focusing on the transition probability matrix of discrete Markov chains. The paper introduces a measure more general than conventional probability, leading to the idea of complex probability. Various exampl

1 views • 10 slides

ETSU Fall 2014 Enrollment Projections Analysis

The ETSU Fall 2014 Enrollment Projections Analysis conducted by Mike Hoff, Director of Institutional Research, utilized a Markov chain model to estimate enrollment. The goal was to reach 15,500 enrollments, with data informing college-level improvement plans. Assumptions included stable recruitment

1 views • 43 slides

Continuous-Time Markov Chains in Manufacturing Systems

Explore the world of Continuous-Time Markov Chains (CTMC) in manufacturing systems through the lens of stochastic processes and performance analysis. Learn about basic definitions, characteristics, and behaviors of CTMC, including homogeneous CTMC and Poisson arrivals. Gain insights into the memoryl

0 views • 50 slides

Modeling the Bombardment of Saturn's Rings and Age Estimation Using Cassini UVIS Spectra

Explore the modeling of Saturn's rings bombardment and aging estimation by fitting to Cassini UVIS spectra. Goals include analyzing ring pollution using a Markov-chain process, applying optical depth correction, using meteoritic mass flux values, and comparing Markov model pollution with UVIS fit to

0 views • 11 slides

Markov Chains and Applications

Markov chains are models used to describe the transition between states in a process, where the future state depends only on the current state. The concept was pioneered by Russian mathematician Andrey Markov and has applications in various fields such as weather forecasting, finance, and biology. T

2 views • 17 slides

Markov Decision Processes in Reinforcement Learning

Markov Decision Processes (MDPs) involve states, actions, transition models, reward functions, and policies to find optimal solutions. This concept is crucial in reinforcement learning, where agents interact with environments based on actions to maximize rewards. MDPs help in decision-making process

0 views • 25 slides

Markov Chain Random Walks in McCTRWs

Delve into the realm of Markov Chain Random Walks and McCTRWs, a method invented by a postdoc in Spain, which has shown robustness in various scenarios. Discover the premise of random walk models, the concept of IID, and its importance, along with classical problems that can be analyzed using CTRW i

1 views • 48 slides

Biomedical Data and Markov Decision Processes

Explore the relationship between Biomedical Data and Markov Decision Processes through the analysis of genetic regulation, regulatory motifs, and the application of Hidden Markov Models (HMM) in complex computational tasks. Learn about the environment definition, Markov property, and Markov Decision

0 views • 24 slides

State Estimation and Probabilistic Models in Autonomous Cyber-Physical Systems

Understanding state estimation in autonomous systems is crucial for determining internal states of a plant using sensors. This involves dealing with noisy measurements, employing algorithms like Kalman Filter, and refreshing knowledge on random variables and statistics. The course covers topics such

1 views • 31 slides

Reinforcement Learning for Long-Horizon Tasks and Markov Decision Processes

Delve into the world of reinforcement learning, where tasks are accomplished by generating policies in a Markov Decision Process (MDP) environment. Understand the concepts of MDP, transition probabilities, and generating optimal policies in unknown and known environments. Explore algorithms and tool

0 views • 11 slides

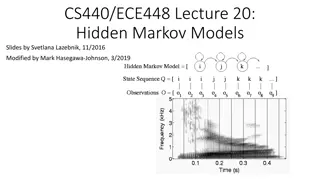

Hidden Markov Models

Delve into the intricacies of Hidden Markov Models (HMMs) in the realm of probability theory with topics ranging from model specification to computational problems like determining optimal hidden sequences. Explore how HMMs are utilized in scenarios where transition and emission probabilities are kn

0 views • 24 slides

What is a Hidden Markov Model?

Hidden Markov Models (HMMs) are versatile machine learning algorithms used in various applications such as genome annotation, speech recognition, and facial recognition. This model assigns nucleotide types to individual nucleotides and calculates transition and emission probabilities to infer hidden

0 views • 12 slides

Hidden Markov Models: Understanding State Transition Probabilities

Hidden Markov Models (HMM) are powerful tools used to model systems where states are not directly observable but can be inferred from behaviors. With a focus on state transition probabilities, HMM allows for the estimation of current states based on previous observations. This concept is illustrated

0 views • 17 slides

Digit Recognizer Construction Using Hidden Markov Model Toolkit

Construct a digit recognizer using monophone models and Hidden Markov Toolkit (HTK). Learn about feature extraction, training flowcharts, and initializing model parameters. Utilize provided resources for training data, testing data, and scripts to build an efficient recognizer.

2 views • 35 slides

Profile Hidden Markov Models

Hidden Markov Models (HMMs) and Profile Hidden Markov Models (PHMMs) are powerful machine learning techniques used in various fields such as speech analysis, music search engines, malware detection, and intrusion detection systems. While HMMs have limitations regarding positional information and mem

0 views • 49 slides

CSCI 5822 Probabilistic Models of Human and Machine Learning

This content delves into probabilistic models of human and machine learning, covering topics such as Hidden Markov Models, Room Wandering, Observations, and The Occasionally Corrupt Casino. It explores how observations over time impact hidden unobserved states, formalizing problems, and understandin

0 views • 28 slides

Introduction to Hidden Markov Models

Hidden Markov Models (HMM) are a key concept in the field of Natural Language Processing (NLP), particularly in modeling sequences of random variables that are not independent, such as weather reports or stock market numbers. This technology involves understanding properties like limited horizon, ti

0 views • 33 slides

Probabilistic Environments: Time Evolution & Hidden Markov Models

In this lecture, we delve into probabilistic environments that evolve over time, exploring Hidden Markov Models (HMMs). HMMs consist of unobservable states and observable evidence variables at each time slice, following Markov assumptions for state transitions and observations. The lecture covers tr

0 views • 28 slides

Speech Recognition and Acoustic Modeling

This presentation delves into the world of speech recognition, covering topics such as Hidden Markov Models, feature extraction, acoustic modeling, and more. Explore the essential elements of processing speech signals, linguistic decoding, constructing language models, and training acoustic models.

0 views • 34 slides

Probabilistic Models for Sequence Data: Foundations of Algorithms and Machine Learning

This content covers topics such as Markov models, state transition matrices, Maximum Likelihood Estimation for Markov Chains, and Hidden Markov Models. It explains the concepts with examples and visuals, focusing on applications in various fields like NLP, weather forecasting, and stock market analy

0 views • 25 slides

Hidden Markov Model and Markov Chain Patterns

In this lecture, the Department of CSE at DIU delves into the intricate concepts of Hidden Markov Models and Markov Chains. Exploring topics such as Markov Chain Model notation, probability calculations, CpG Islands, and algorithms like Forward and Viterbi, this comprehensive guide equips learners w

0 views • 24 slides

Developing Digital Informational Model for Well Productivity Estimation through Markov Processes Tools

Gubkin University presents a study on developing a digital informational model for estimating well productivity using Markov processes tools in oil and gas production. The research covers industrial data processing, reservoir model uncertainty evaluation, and organizational process planning. It emph

0 views • 17 slides

Overview of Sampling Methods in Markov Chain Monte Carlo

This content covers various sampling methods in Markov Chain Monte Carlo including Rejection Sampling, Importance Sampling, and MCMC Sampling. It delves into representing distributions, drawbacks of Importance Sampling, and the motivation behind Markov Chain Monte Carlo Sampling. The illustrations p

0 views • 25 slides

Markov Chains: Workforce Planning Models Section 17.7

Delve into the intricate world of Markov Chains through the lens of workforce planning models in Section 17.7. Understand the dynamics of designing efficient workforce strategies with insights from group members Valerie Bastian, Phillip Liu, and Amandeep Tamber. Explore the applications of Markov Ch

0 views • 38 slides

Understanding Markov Decision Processes in Biomedical Data Analysis

Explore the application of Markov Decision Processes in analyzing biomedical data, focusing on gene regulation, regulatory motifs, and computational modeling techniques. Learn about the key concepts such as the definition of the environment, Markov property, and Markov environments in the context of

0 views • 24 slides

Constrained Reinforcement Learning for Network Slicing in 5G

Explore how Constrained Reinforcement Learning (CRL) is applied to address network slicing challenges in 5G, enabling efficient resource allocation for diverse services like manufacturing, entertainment, and smart cities. Traditional optimization methods are compared with learning-based approaches,

0 views • 23 slides

Wi-Fi Protected Setup Brute-Force Mitigation Using Markov Chains Progress

Explore how Wi-Fi Protected Setup (WPS) technology can be secured against brute-force attacks through the use of Markov Chains. The progress includes defining variables, states for Markov chains, and probabilities to model different wireless router mechanisms for mitigating attacks.

0 views • 5 slides

Understanding Tail Bounds and Markov's Inequality

Tail bounds and Markov's Inequality are crucial concepts in probability theory and statistics. Tail bounds help us approximate probabilities in the tails of distributions, while Markov's Inequality provides a bound on the probability that a non-negative random variable exceeds a certain value. Learn

0 views • 25 slides

Understanding Partially Observable Markov Decision Processes (POMDPs)

Explore the concept of Belief State Update Policies and Optimal Policy in Partially Observable Markov Decision Processes (POMDPs). Learn about Markov Models, Hidden Markov Models, and how to update belief states with examples. Dive deeper into the world of intelligent systems and AI in computer scie

0 views • 24 slides

Algorithmic Methods for Markov Chains in Industrial Engineering Lectures

Explore numerical solutions for equilibrium equations, transient analysis, M/M/1-type models, G/M/1 and M/G/1-type models, and finite Quasi Birth Death processes in Algorithmic Methods for Markov Chains. Learn about stationary Markov chains, irreducibility, absorbing Markov chains, and more.

0 views • 27 slides

Introduction to Hidden Markov Models and Machine Learning

Understand the basics of Hidden Markov Models, Markov Processes, and Probabilistic Calculations in the context of Pattern Recognition and Machine Learning. Explore fundamental concepts and example calculations related to transition probabilities and state sequences.

0 views • 11 slides

Understanding Absorbing and Transient States in Markov Chains

Learn about absorbing and transient states in Markov Chains with examples and sample problems. Understand the concept of canonical form and how to analyze probabilities and absorption in a Markov Chain system.

0 views • 17 slides