Reinforcement Learning

Concepts of reinforcement learning in the context of applied machine learning, with a focus on Markov Decision Processes, Q-Learning, and example applications.

2 views • 32 slides

Understanding Brain Development and Decision-Making Skills

Explore the fascinating realm of brain development and decision-making skills, focusing on how different brain regions activate during decision-making, the evolution of decision-making abilities from adolescence to adulthood, the importance of practicing decision-making skills, and the influence of

6 views • 10 slides

Understanding Multiple Sequence Alignment with Hidden Markov Models

Multiple Sequence Alignment (MSA) is essential for various biological analyses like phylogeny estimation and selection quantification. Profile Hidden Markov Models (HMMs) play a crucial role in achieving accurate alignments. This process involves aligning unaligned sequences to create alignments wit

0 views • 29 slides

Advanced Reinforcement Learning for Autonomous Robots

Cutting-edge research in the field of reinforcement learning for autonomous robots, focusing on Proximal Policy Optimization Algorithms, motivation for autonomous learning, scalability challenges, and policy gradient methods. The discussion delves into Markov Decision Processes, Actor-Critic Algorit

6 views • 26 slides

Enhancing Career Decision Making Process

Explore the importance of good decision-making, types of decision makers, problems faced in decision making, readiness factors for career decisions, decision-making processes, and the CASVE cycle. Understand the significance of effective decision-making skills and how they impact our lives.

0 views • 26 slides

Deep Reinforcement Learning Overview and Applications

Delve into the world of deep reinforcement learning on the road to advanced AI systems like Skynet. Explore topics ranging from Markov Decision Processes to solving MDPs, value functions, and tabular solutions. Discover the paradigm of supervised, unsupervised, and reinforcement learning in various

0 views • 24 slides

Understanding Decision Analysis in Work-related Scenarios

Decision analysis plays a crucial role in work-related decision-making processes, helping in identifying decision makers, exploring potential actions, evaluating outcomes, and considering various values involved in the decision. This module delves into the steps involved in decision analysis, provid

0 views • 76 slides

Understanding Decision Trees in Machine Learning

Decision trees are a popular supervised learning method used for classification and regression tasks. They involve learning a model from training data to predict a value based on other attributes. Decision trees provide a simple and interpretable model that can be visualized and applied effectively.

1 views • 38 slides

Comprehensive Guide to Decision Making and Creative Thinking in Management

Explore the rational model of decision-making, ways individuals and groups make compromises, guidelines for effective decision-making and creative thinking, utilizing probability theory and decision trees, advantages of group decision-making, and strategies to overcome creativity barriers. Understan

0 views • 30 slides

Introduction to Decision Theory in Business Environments

Decision theory plays a crucial role in business decision-making under conditions of uncertainty. This chapter explores the key characteristics of decision theory, including alternatives, states of nature, payoffs, degree of certainty, and decision criteria. It also introduces the concept of payoff

0 views • 41 slides

Understanding Decision Trees in Machine Learning with AIMA and WEKA

Decision trees are an essential concept in machine learning, enabling efficient data classification. The provided content discusses decision trees in the context of the AIMA and WEKA libraries, showcasing how to build and train decision tree models using Python. Through a dataset from the UCI Machin

3 views • 19 slides

Understanding Markov Chains and Their Applications in Networks

Andrej Markov and his contributions to the development of Markov chains are explored, highlighting the principles, algorithms, and rules associated with these probabilistic models. The concept of a Markov chain, where transitions between states depend only on the current state, is explained using we

19 views • 21 slides

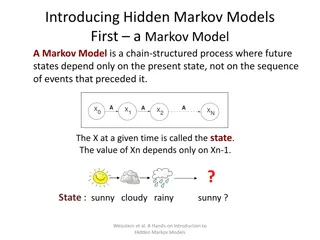

Introduction to Markov Models and Hidden Markov Models

A Markov model is a chain-structured process where future states depend only on the present state. Hidden Markov Models are Markov chains where the state is only partially observable. Explore state transition and emission probabilities in various scenarios such as weather forecasting and genetic seq

2 views • 12 slides

Understanding Infinite Horizon Markov Decision Processes

In the realm of Markov Decision Processes (MDPs), tackling infinite horizon problems involves defining value functions, introducing discount factors, and guaranteeing the existence of optimal policies. Computational challenges like policy evaluation and optimization are addressed through algorithms

2 views • 39 slides

Understanding Markov Decision Processes in Machine Learning

Markov Decision Processes (MDPs) involve taking actions that influence the state of the world, leading to optimal policies. Components include states, actions, transition models, reward functions, and policies. Solving MDPs requires knowing transition models and reward functions, while reinforcement

0 views • 26 slides

Introduction to Markov Decision Processes and Optimal Policies

Explore the world of Markov Decision Processes (MDPs) and optimal policies in Machine Learning. Uncover the concepts of states, actions, transition functions, rewards, and policies. Learn about the significance of Markov property in MDPs, Andrey Markov's contribution, and how to find optimal policie

0 views • 59 slides

Understanding MCMC Algorithms and Gibbs Sampling in Markov Chain Monte Carlo Simulations

Markov Chain Monte Carlo (MCMC) algorithms play a crucial role in generating sequences of states for various applications. One popular MCMC method, Gibbs Sampling, is particularly useful for Bayesian networks, allowing the random sampling of variables based on probability distributions. This process

1 views • 7 slides

Understanding Programs and Processes in Operating Systems

Exploring the fundamental concepts of programs and processes in operating systems, this content delves into the definitions of programs and processes, the relationship between them, the components of a program, what is added by a process, and how processes are created. The role of DLLs, mapped files

0 views • 22 slides

The Assisted Decision-Making (Capacity) Act 2015 in the Criminal Justice Context

The Assisted Decision-Making (Capacity) Act 2015 introduces key reforms such as the abolition of wards of court system for adults, a statutory functional test of capacity, new guiding principles, a three-tier framework for support, and tools for advance planning. It emphasizes functional assessment

0 views • 17 slides

Implementing Group Decision-Making Tools with Voting Procedures at Toulouse E-Democracy Summer School

Decision-making in organizations is crucial, and group decision-making can lead to conflicts due to differing views. Group Decision Support Systems (GDSS) are essential for facilitating decision-making processes. The Toulouse E-Democracy Summer School discusses the implementation of voting tools in

0 views • 21 slides

Optimal Sustainable Control of Forest Sector with Stochastic Dynamic Programming and Markov Chains

Stochastic dynamic programming with Markov chains is used for optimal control of the forest sector, focusing on continuous cover forestry. This approach optimizes forest industry production, harvest levels, and logistic solutions based on market conditions. The method involves solving quadratic prog

0 views • 27 slides

Decision Analysis: Problem Formulation, Decision Making, and Risk Analysis

Decision analysis involves problem formulation, decision making with and without probabilities, risk analysis, and sensitivity analysis. It includes defining decision alternatives, states of nature, and payoffs, creating payoff tables, decision trees, and using different decision-making criteria. Wi

0 views • 27 slides

Understanding Complex Probability and Markov Stochastic Process

Discussion on the concept of complex probability in solving real-world problems, particularly focusing on the transition probability matrix of discrete Markov chains. The paper introduces a measure more general than conventional probability, leading to the idea of complex probability. Various exampl

1 views • 10 slides

Utilizing Six Thinking Hats for Enhanced Decision Making and Problem Solving

Exploring the concept of Lateral Thinking through the Six Thinking Hats technique, this article delves into how this approach by Edward de Bono can revolutionize decision-making processes. By understanding the objectives and roles of each colored hat - Blue, Red, Yellow, Black, Green, and White - in

0 views • 12 slides

ETSU Fall 2014 Enrollment Projections Analysis

The ETSU Fall 2014 Enrollment Projections Analysis conducted by Mike Hoff, Director of Institutional Research, utilized a Markov chain model to estimate enrollment. The goal was to reach 15,500 enrollments, with data informing college-level improvement plans. Assumptions included stable recruitment

0 views • 43 slides

Understanding Continuous-Time Markov Chains in Manufacturing Systems

Explore the world of Continuous-Time Markov Chains (CTMC) in manufacturing systems through the lens of stochastic processes and performance analysis. Learn about basic definitions, characteristics, and behaviors of CTMC, including homogeneous CTMC and Poisson arrivals. Gain insights into the memoryl

0 views • 50 slides

Modeling the Bombardment of Saturn's Rings and Age Estimation Using Cassini UVIS Spectra

Explore the modeling of Saturn's rings bombardment and aging estimation by fitting to Cassini UVIS spectra. Goals include analyzing ring pollution using a Markov-chain process, applying optical depth correction, using meteoritic mass flux values, and comparing Markov model pollution with UVIS fit to

0 views • 11 slides

Understanding Markov Chains and Applications

Markov chains are models used to describe the transition between states in a process, where the future state depends only on the current state. The concept was pioneered by Russian mathematician Andrey Markov and has applications in various fields such as weather forecasting, finance, and biology. T

1 views • 17 slides

Decision Making Under Uncertainty Using Decision Trees

In this scenario, Colaco faces the decision of whether to conduct a market study for their product, Chocola. The decision involves potential national success or failure outcomes, along with the consequences of a local success or failure from the market study. By utilizing decision trees, this comple

10 views • 7 slides

Enhancing Decision Making with Information Systems

Explore the role of information systems in enhancing decision-making processes within organizations. Topics include business intelligence, types of decisions, decision-making processes, and managerial roles. Learn about structured, unstructured, and semi-structured decisions, different models of man

0 views • 21 slides

Understanding Markov Decision Processes in Reinforcement Learning

Markov Decision Processes (MDPs) involve states, actions, transition models, reward functions, and policies to find optimal solutions. This concept is crucial in reinforcement learning, where agents interact with environments based on actions to maximize rewards. MDPs help in decision-making process

0 views • 25 slides

Learning from Demonstration in the Wild: A Novel Approach to Behavior Learning

Learning from Demonstration (LfD) is a machine learning technique that can model complex behaviors from expert trajectories. This paper introduces a new method, Video to Behavior (ViBe), that leverages unlabelled video data to learn road user behavior from real-world settings. The study presents a v

0 views • 19 slides

Exploring Levels of Analysis in Reinforcement Learning and Decision-Making

This content delves into various levels of analysis related to computational and algorithmic problem-solving in the context of Reinforcement Learning (RL) in the brain. It discusses how RL preferences for actions leading to favorable outcomes are resolved using Markov Decision Processes (MDPs) and m

0 views • 18 slides

Exploring Markov Chain Random Walks in McCTRWs

Delve into the realm of Markov Chain Random Walks and McCTRWs, a method invented by a postdoc in Spain, which has shown robustness in various scenarios. Discover the premise of random walk models, the concept of IID, and its importance, along with classical problems that can be analyzed using CTRW i

0 views • 48 slides

Reinforcement Learning for Queueing Systems

Natural Policy Gradient is explored as an algorithm for optimizing Markov Decision Processes in queueing systems with unknown parameters. The challenges of unknown system dynamics and policy optimization are addressed through reinforcement learning techniques such as Actor-critic and Trust Region Po

0 views • 20 slides

Understanding Biomedical Data and Markov Decision Processes

Explore the relationship between Biomedical Data and Markov Decision Processes through the analysis of genetic regulation, regulatory motifs, and the application of Hidden Markov Models (HMM) in complex computational tasks. Learn about the environment definition, Markov property, and Markov Decision

0 views • 24 slides

State Estimation and Probabilistic Models in Autonomous Cyber-Physical Systems

Understanding state estimation in autonomous systems is crucial for determining internal states of a plant using sensors. This involves dealing with noisy measurements, employing algorithms like Kalman Filter, and refreshing knowledge on random variables and statistics. The course covers topics such

1 views • 31 slides

Understanding Confidence, Uncertainty, Risk, and Decision-Making in Engineering Design

This presentation explores the intricate relationship between confidence, uncertainty, and risk in decision-making processes within engineering design studies. It highlights the importance of considering various perspectives and evaluating potential risks and benefits to make informed decisions. The

0 views • 21 slides

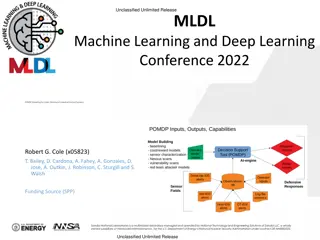

Decision Support System Development for SCADA/ICS Cyber Defense

Developing a Decision Support System (DSS) powered by Partially Observable Markov Decision Processes (POMDP) to aid novice cyber defenders in protecting critical SCADA/ICS infrastructure. The system leverages Domain Expertise, AI Expert System Shell, and emulation environments for testing and valida

0 views • 7 slides

Reinforcement Learning for Long-Horizon Tasks and Markov Decision Processes

Delve into the world of reinforcement learning, where tasks are accomplished by generating policies in a Markov Decision Process (MDP) environment. Understand the concepts of MDP, transition probabilities, and generating optimal policies in unknown and known environments. Explore algorithms and tool

0 views • 11 slides