Understanding MCMC Algorithms and Gibbs Sampling in Markov Chain Monte Carlo Simulations

Markov Chain Monte Carlo (MCMC) algorithms play a crucial role in generating sequences of states for various applications. One popular MCMC method, Gibbs Sampling, is particularly useful for Bayesian networks, allowing the random sampling of variables based on probability distributions. This process involves fixing evidence variables and iteratively updating other variables within their Markov blankets. The example of Gibbs Sampling further illustrates how non-evidence variables are sampled to infer probabilistic distributions. Calculating Markov blanket distributions is key to computing probability distributions efficiently in MCMC simulations.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

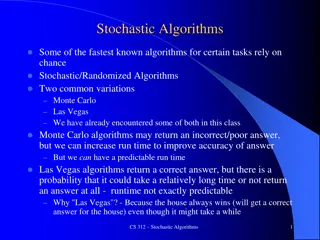

Markov Chain Monte Carlo Simulation Markov Chain Monte Carlo (MCMC) algorithms: specifies a value for every variable at the current state (i.e., sample). generates a next state by making random changes to the current state. Markov chain is a random process that generates a sequence of states.

Gibbs Sampling An MCMC algorithm well suited for Bayes nets that starts with an arbitrary state, fix evidence variables at their observed values, and generates a next state by randomly sampling a value for a nonevidence variable ?? chosen according to probability distribution ?(?). ?? is independent of all the variables outside of its Markov blanket (consisting its parents, children, and children s other parents). ?? s parents, children, and children s other parents

The Algorithm ?, number of samples // the value of ? is not // changed from the previous // iteration if ?? ?.

Example of Gibbs Sampling Gibbs sampling for ?? is conditioned on the current values of the variables in its Markov blanket. Query?(Rain | Sprinkler = true,WetGrass = true) randomly generated values for nonevidence variables Cloudy and Rain Initial state [true,true,false,true] evidence variables Sprinkler and WetGrass fixed to their observed values Order: Cloudy, Sprinkler, Rain, WetGrass

Example (contd) Query?(Rain | Sprinkler = true,WetGrass = true) Non-evidence variables are then sampled in random order following some probability distribution ?(?). Cloudy is chosen and sampled given the current values of its Markov blanket {Sprinkler, Rain}. Sampling distribution: ? CloudySprinkler = true,Rain = false} Sampling result: Cloudy=false. Rain is chosen next and sampled given the current values of its Markov blanket {Cloudy, Sprinkler, WetGrass}. Sampling distribution: Order: Cloudy, Sprinkler, Rain, WetGrass How to calculate Markov blanket distribution ? ?? | ?? ?? ? [true,true,false,true] [false,true,false,true] (initial state) (2nd state) ? RainCloudy = false, Sprinkler = true, WetGrass = true) Sampling result: Rain=true. [false,true,true,true] (3rd state)

Markov Blanket Distribution ??(??): variables in the Markov blanket of ??. ?? ?? = Children ?? Parents ?? ?? | ?? ?? ?? ?? Children ?? ?? Parents(??) ??(??): values of the variables in ??(??). parents ??: values of the parents of ?? children ??: values of the children of ?? others ??: values of the other parents of ?? s children We want to compute the probability distribution ? ?? ??(??)}. = ? ??parents ??,childre? ??,others ??) = ?? (??,parents ??,childre? ??,others ??) ? ?? | ?? ?? = ?? ??parents ??,others ??) ? children ?? | parents ??,??,others ?? = ?? ??parents ??) ? children ?? | ??,others ?? = ?? ??parents(??)) ? ??parents(??)) ?? children(??)

Markov Blanket Distribution ? ?? ?? ??) = ?? ??parents(??)) ? ??parents(??)) ?? children(??) ??(Cloudy) Sampling distribution: ? CloudySprinkler = true,Rain = false} ? ? ?, ?) = ?? ? ? ? ?)? ? ?) = ?0.5 0.1 0.2 ? ? ?, ?) = ?? ? ? ? ?)? ? ?) = ?0.5 0.5 0.8 ? C?, ?} = ? 0.001,0.020 0.048,0.952