Managing File System Consistency in Operating Systems

File systems in operating systems face challenges with crash consistency, especially during write operations. Issues arise when modified data in cache is lost due to system crashes or when data structure invariants are violated. Solutions like write-through and write-back caches help mitigate these

6 views • 14 slides

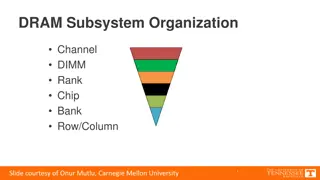

Understanding the Organization of DRAM Subsystem Components

Explore the intricate structure of the DRAM subsystem, including memory channels, DIMMs, ranks, chips, banks, and rows/columns. Delve into the breakdown of DIMMs, ranks, chips, and banks to comprehend the design and functioning of DRAM memory systems. Gain insights into address decoding, row/column

0 views • 16 slides

Computer Architecture: Understanding SRAM and DRAM Memory Technologies

In the field of computer architecture, SRAM and DRAM are two prevalent memory technologies with distinct characteristics. SRAM retains data as long as power is present, while DRAM is dynamic and requires data refreshing. SRAM is built with high-speed CMOS technology, whereas DRAM is more dense and b

3 views • 38 slides

High-Throughput True Random Number Generation Using QUAC-TRNG

DRAM-based QUAC-TRNG provides high-throughput and low-latency true random number generation by utilizing commodity DRAM devices. By employing Quadruple Row Activation (QUAC), this method outperforms existing TRNGs, achieving a 15.08x improvement in throughput and passing all 15 NIST randomness tests

0 views • 10 slides

SIMDRAM: An End-to-End Framework for Bit-Serial SIMD Processing Using DRAM

SIMDRAM introduces a novel framework for efficient computation in DRAM, aiming to overcome data movement bottlenecks. It emphasizes Processing-in-Memory (PIM) and Processing-using-Memory (PuM) paradigms to enhance processing capabilities within DRAM while minimizing architectural changes. The motiva

2 views • 14 slides

In-Depth Look at Pentium Processor Features

Explore the advanced features of the Pentium processor, including separate instruction and data caches, dual integer pipelines, superscalar execution, support for multitasking, and more. Learn about its 32-bit architecture, power management capabilities, internal error detection features, and the ef

0 views • 24 slides

Insights into DRAM Power Consumption and Design Concerns

Detailed experimental study reveals that DRAM power models may not provide accurate insights into power consumption. The increasing importance of managing DRAM power in system design is emphasized. The study delves into DRAM organization, operation, and power consumption patterns, highlighting the n

0 views • 43 slides

Constructive Computer Architecture Realistic Memories and Caches

Explore the realm of constructive computer architecture, realistic memories, and cache systems as presented by the Computer Science & Artificial Intelligence Lab at Massachusetts Institute of Technology. Delve into topics like 2-stage pipeline, magic memory models, and memory system views to gain in

0 views • 20 slides

Dram Shop Act and Premises Liability for Bar and Tavern Owners

Understanding the liabilities and responsibilities of bar and tavern owners under the Dram Shop Act based on the case of Build It and They Will Drink, Inc. v. Strauch. The act outlines exceptions where licensees can be held civilly liable for selling alcohol to minors or visibly intoxicated individu

0 views • 12 slides

Improving GPGPU Performance with Cooperative Thread Array Scheduling Techniques

Limited DRAM bandwidth poses a critical bottleneck in GPU performance, necessitating a comprehensive scheduling policy to reduce cache miss rates, enhance DRAM bandwidth, and improve latency hiding for GPUs. The CTA-aware scheduling techniques presented address these challenges by optimizing resourc

0 views • 33 slides

Understanding Parallelism and Vector Instructions in CMPT 295

Delve into the world of parallelism and vector instructions in CMPT 295 as you explore fixed-length vector intrinsics, RISC-V concepts, computer programming fundamentals, processor execution processes, scalar and vector loops, and more. Discover the intricacies of memory, data arrays, structs, integ

1 views • 45 slides

Exploring Caching Techniques in Web Systems

Dive into the world of web caching with concepts like consistent hashing, Bloom filters, and shared caches for enhanced performance and efficiency. Discover the challenges faced in managing large-scale caches and learn about innovative solutions and ideas in the field of web systems.

0 views • 21 slides

Enhancing Multi-Node Systems with Coherent DRAM Caches

Exploring the integration of Coherent DRAM Caches in multi-node systems to improve memory performance. Discusses the benefits, challenges, and potential performance improvements compared to existing memory-side cache solutions.

0 views • 28 slides

Enhancing Memory Cache Efficiency with DRAM Compression Techniques

Explore the challenges faced by Moore's Law in relation to bandwidth limitations and the innovative solutions such as 3D-DRAM caches and compressed memory systems. Discover how compressing DRAM caches can improve bandwidth and capacity, leading to enhanced performance in memory-intensive application

0 views • 48 slides

Memory Consistency Models and Sequential Consistency in Computer Architecture

Memory consistency models play a crucial role in ensuring proper synchronization and ordering of memory references in computer systems. Sequential consistency, introduced by Lamport in 1979, treats processors as interleaved processes on a shared CPU and requires all references to fit into a global o

1 views • 64 slides

Summer Fellows 2024: Dive into OSDF Caches and IP Geolocation Challenges

Explore the Summer Fellows 2024 program focusing on topics like Glideins, IP geolocation challenges, OSDF Caches, and the use of AI in OSPool Failure Classification. Participants delve into learning the GlideinWMS system and grappling with issues related to network latency, hops, and machine learnin

0 views • 64 slides

Architecting DRAM Caches for Low Latency and High Bandwidth

Addressing fundamental latency trade-offs in designing DRAM caches involves considerations such as memory stacking for improved latency and bandwidth, organizing large caches at cache-line granularity to minimize wasted space, and optimizing cache designs to reduce access latency. Challenges include

0 views • 32 slides

Understanding RowPress: A New Read Disturbance Phenomenon in Modern DRAM Chips

Demonstrating and analyzing RowPress, a novel read disturbance phenomenon causing bitflips in DRAM chips. Different from RowHammer vulnerability, RowPress showcases effective solutions on real Intel systems with DRAM chips.

0 views • 46 slides

Managing DRAM Latency Divergence in Irregular GPGPU Applications

Addressing memory latency challenges in irregular GPGPU applications, this study explores techniques like warp-aware memory scheduling and GPU memory controller optimization to reduce DRAM latency divergence. The research delves into the impact of SIMD lanes, coalescers, and warp-aware scheduling on

0 views • 33 slides

Panopticon: Complete In-DRAM Rowhammer Mitigation

Despite extensive research, DRAM remains vulnerable to Rowhammer attacks. The Panopticon project proposes a novel in-DRAM mitigation technique using counter mats within DRAM devices. This approach does not require costly changes at multiple layers and leverages existing DRAM logic for efficient miti

0 views • 17 slides

Understanding Cache Memory Organization in Computer Systems

Exploring concepts such as set-associative cache, direct-mapped cache, fully-associative cache, and replacement policies in cache memory design. Delve into topics like generality of set-associative caches, block mapping in different cache architectures, hit rates, conflicts, and eviction strategies.

0 views • 35 slides

Understanding DRAM Errors: Implications for System Design

Exploring the nature of DRAM errors, this study delves into the causes, types, and implications for system design. From soft errors caused by cosmic rays to hard errors due to permanent hardware issues, the research examines error protection mechanisms and open questions surrounding DRAM errors. Pre

0 views • 31 slides

CSE351 Spring 2019 - Caches and Memory Concepts

Exploring the intricacies of caching in CSE351 Spring 2019, delving into memory management, data structures, assembly language, Java comparisons, and mnemonic aids. The course covers practical applications and theoretical underpinnings, including memory allocation, virtual memory, and processor cach

0 views • 31 slides

CSE351 Autumn 2017: Caches Instructor and TA Information

The content provides information about the CSE351 course on caches for Autumn 2017, including details about the instructor, teaching assistants, administrative updates, midterm policies, course roadmap, units and prefixes, mnemonic techniques, and execution time analysis. It also includes important

0 views • 31 slides

Understanding Caches and the Memory Hierarchy in Computer Systems

Delve into the intricacies of memory hierarchy and caches in computer systems, exploring concepts like cache organization, implementation choices, hardware optimizations, and software-managed caches. Discover the significance of memory distance from the CPU, the impact on hardware/software interface

0 views • 84 slides

Transparent Hardware Management of Stacked DRAM for Memory Systems

Explore the innovative use of stacked DRAM as Part of Memory (PoM) to increase overall memory capacity and eliminate duplication. The system involves OS-managed PoM, challenges, and the potential of hardware-managed PoM to reduce OS-related overhead. Learn about the practical implications and evalua

0 views • 24 slides

Challenges and Solutions in Memory Hierarchies for System Performance Growth

The evolution of memory scaling poses challenges for system performance growth due to limitations in memory hierarchy, capacity gaps, and DRAM scaling obstacles. The need for alternative technologies and architectural support to address these challenges is highlighted, focusing on reducing latency,

0 views • 23 slides

Understanding Latency Variation in Modern DRAM Chips

This research delves into the complexities of latency variation in modern DRAM chips, highlighting factors such as imperfect manufacturing processes and high standard latencies chosen to boost yield. The study aims to characterize latency variation, optimize DRAM performance, and develop mechanisms

0 views • 37 slides

Efficient Cache Management using The Dirty-Block Index

The Dirty-Block Index (DBI) is a solution to address inefficiencies in caches by removing dirty bits from cache tag stores, improving query response efficiency, and enabling various optimizations like DRAM-aware writeback. Its implementation leads to significant performance gains and cache area redu

0 views • 44 slides

Understanding Power Consumption in Memory-Intensive Databases

This collection of research delves into the power challenges faced by memory-intensive databases (MMDBs) and explores strategies for reducing DRAM power draw. Topics covered include the impact of hardware features on power consumption, experimental setups for analyzing power breakdown, and the effec

0 views • 13 slides

A Software Memory Partition Approach for Eliminating Bank-level Interference in Multicore Systems

Memory requests from different threads can cause interferences in DRAM banks, impacting performance. The solution proposed involves partitioning DRAM banks between threads to eliminate interferences, leading to improved performance and energy savings.

0 views • 32 slides

Proxies, Caches, and Scalable Software Architectures

Statelessness, proxies, and caches play key roles in creating scalable software architectures. The lecture explains the concepts of proxies and caches, highlighting their functions in enhancing performance and scalability. Proxies act as intermediaries for requests, while caches store frequently acc

0 views • 13 slides

Understanding Web Caching: An Overview

Web caching, implemented through various types of caches like browser cache, proxy cache, and gateway cache, plays a crucial role in improving content availability, reducing network congestion, and enhancing user experience by saving bandwidth and decreasing latency. It addresses the challenges pose

0 views • 27 slides

Enhancing DRAM Performance with ChargeCache: A Novel Approach

Reduce average DRAM access latency by leveraging row access locality with ChargeCache, a cost-effective solution requiring no modifications to existing DRAM chips. By tracking recently accessed rows and adjusting timing parameters, ChargeCache achieves higher performance and lower DRAM energy consum

0 views • 33 slides

Memory and Caches in CSE 351 Spring 2020: Insights and Roadmap

Exploring the world of memory and caches in CSE 351 Spring 2020 led by Instructor Ruth Anderson and her dedicated team of Teaching Assistants. Discover the essential topics covered such as data integers, x86 assembly, processes, and more. Dive into the nuances of memory allocation, Java implementati

0 views • 30 slides

Intelligent DRAM Cache Strategies for Bandwidth Optimization

Efficiently managing DRAM caches is crucial due to increasing memory demands and bandwidth limitations. Strategies like using DRAM as a cache, architectural considerations for large DRAM caches, and understanding replacement policies are explored in this study to enhance memory bandwidth and capacit

0 views • 23 slides

Enhancing Data Movement Efficiency in DRAM with Low-Cost Inter-Linked Subarrays (LISA)

This research focuses on improving bulk data movement efficiency within DRAM by introducing Low-Cost Inter-Linked Subarrays (LISA). By providing wide connectivity between subarrays, LISA enables fast inter-subarray data transfers, reducing latency and energy consumption. Key applications include fas

0 views • 49 slides

CLR-DRAM: Dynamic Capacity-Latency Trade-off Architecture

CLR-DRAM introduces a low-cost DRAM architecture that enables dynamic configuration for high capacity or low latency at the granularity of a row. By allowing a single DRAM row to switch between max-capacity and high-performance modes, it reduces key timing parameters, improves system performance, an

0 views • 42 slides

Locality-Aware Caching Policies for Hybrid Memories

Different memory technologies present unique strengths, and a hybrid memory system combining DRAM and PCM aims to leverage the best of both worlds. This research explores the challenge of data placement between these diverse memory devices, highlighting the use of row buffer locality as a key criter

0 views • 34 slides

Understanding the Impact of On-Die ECC on DRAM Error Characteristics

The BEER project explores how on-die ECC complicates DRAM reliability studies by concealing error characteristics. It aims to uncover the unique ECC function of DRAM chips and infer error locations in error-prone cells. The study highlights the challenges in identifying and correcting bit flips obfu

0 views • 17 slides