SIMDRAM: An End-to-End Framework for Bit-Serial SIMD Processing Using DRAM

SIMDRAM introduces a novel framework for efficient computation in DRAM, aiming to overcome data movement bottlenecks. It emphasizes Processing-in-Memory (PIM) and Processing-using-Memory (PuM) paradigms to enhance processing capabilities within DRAM while minimizing architectural changes. The motivation is to extend the applicability of PuM mechanisms by supporting complex operations and enabling flexibility for new operations without significant DRAM alterations.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

SIMDRAM: A Framework for Bit-Serial SIMD Processing using DRAM Nastaran Hajinazar* Sven Gregorio Joao Ferreira Nika Mansouri Ghiasi Minesh Patel Mohammed Alser Saugata Ghose Juan G mez Luna Onur Mutlu Geraldo F. Oliveira*

Data Movement Bottleneck Data movement is a major bottleneck More than 60% of the total system energy is spent on data movement1 Main Memory (DRAM) Computing Unit (CPU, GPU, FPGA, Accelerators) Memory channel Bandwidth-limited and power-hungry memory channel 2 1 A. Boroumand et al., Google Workloads for Consumer Devices: Mitigating Data Movement Bottlenecks, ASPLOS, 2018

Processing-in-Memory (PIM) Processing-in-Memory: moves computation closer to where the data resides - Reduces/eliminates the need to move data between processor and DRAM Main Memory (DRAM) Computing Unit (CPU, GPU, FPGA, Accelerators) Memory channel 3

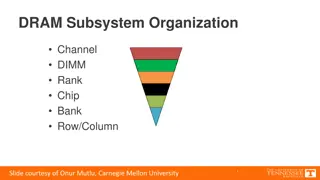

Processing-using-Memory (PuM) PuM: Exploits analog operation principles of the memory circuitry to perform computation - Leverages the large internal bandwidth and parallelism available inside the memory arrays A common approach for PuM architectures is to perform bulk bitwise operations - Simple logical operations (e.g., AND, OR, XOR) - More complex operations (e.g., addition, multiplication) 4

Motivation, Goal, and Key Idea Existing PuM mechanisms are not widely applicable - Support only a limited and mainly basic set of operations - Lack the flexibility to support new operations - Require significant changes to the DRAM subarray Goal: Design a PuM framework that - Efficiently implements complex operations - Provides the flexibility to support new desired operations - Minimally changes the DRAM architecture SIMDRAM: An end-to-end processing-using-DRAM framework that provides the programming interface, the ISA, and the hardware support for: - Efficiently computing complex operations in DRAM - Providing the ability to implement arbitrary operations as required - Using an in-DRAM massively-parallel SIMD substrate that requires minimal changes to DRAM architecture 5

SIMDRAM: PuM Substrate SIMDRAM framework is built around a DRAM substrate that enables two techniques: (2) Majority-based computation (1) Vertical data layout Cout= AB + ACin + BCin most significant bit (MSB) A 4-bit element size Row Decoder MAJ Cout B Cin least significant bit (LSB) Pros compared to the conventional horizontal layout: Pros compared to AND/OR/NOT- based computation: Implicit shift operation Massive parallelism Higher performance Higher throughput Lower energy consumption 6

SIMDRAM Framework: Overview User Input SIMDRAM Output Step 1: Generate MAJ logic Step 2: Generate sequence of DRAM commands Desired operation New SIMDRAM ?Program ???????? ACT/PRE ACT/PRE ACT/PRE ACT/ACT/PRE done ?Program MAJ ???????? Main memory bbop_new MAJ/NOT logic AND/OR/NOT logic ISA New SIMDRAM instruction SIMDRAM Output Instruction result in memory User Input Step 3: Execution according to Program SIMDRAM-enabled application ACT/PRE ACT/PRE ACT/PRE ACT/PRE/PRE done foo () { ACT/PRE bbop_new } ?Program Control Unit 18 Memory Controller 7

SIMDRAM Framework: Overview User Input SIMDRAM Output Step 1: Generate MAJ logic Step 2: Generate sequence of DRAM commands Desired operation New SIMDRAM ?Program ???????? ACT/PRE ACT/PRE ACT/PRE ACT/ACT/PRE done ?Program MAJ ???????? Main memory bbop_new MAJ/NOT logic AND/OR/NOT logic ISA New SIMDRAM instruction SIMDRAM Output Instruction result in memory User Input Step 3: Execution according to Program SIMDRAM-enabled application Step 1: Builds an efficient MAJ/NOT representation of a given desired operation from its AND/OR/NOT-based implementation ACT/PRE ACT/PRE ACT/PRE ACT/PRE/PRE done foo () { ACT/PRE bbop_new } ?Program Control Unit 18 Memory Controller 8

SIMDRAM Framework: Overview User Input SIMDRAM Output Step 1: Generate MAJ logic Step 2: Generate sequence of DRAM commands Desired operation New SIMDRAM ?Program ???????? ACT/PRE ACT/PRE ACT/PRE ACT/ACT/PRE done ?Program MAJ ???????? Main memory bbop_new MAJ/NOT logic AND/OR/NOT logic ISA New SIMDRAM instruction SIMDRAM Output Instruction result in memory User Input Step 3: Execution according to Program SIMDRAM-enabled application Step 2: Allocates DRAM rows to the operation s inputs and outputs Generates the sequence of DRAM commands (?Program) to execute the desired operation ACT/PRE ACT/PRE ACT/PRE ACT/PRE/PRE done foo () { ACT/PRE bbop_new } ?Program Control Unit 18 Memory Controller 9

SIMDRAM Framework: Overview User Input SIMDRAM Output Step 1: Generate MAJ logic Step 2: Generate sequence of DRAM commands Desired operation Step 3: Executes the Program to perform the operation Uses a control unit in the memory controller New SIMDRAM ?Program ???????? ACT/PRE ACT/PRE ACT/PRE ACT/ACT/PRE done ?Program MAJ ???????? Main memory bbop_new MAJ/NOT logic AND/OR/NOT logic ISA New SIMDRAM instruction SIMDRAM Output Instruction result in memory User Input Step 3: Execution according to ?Program SIMDRAM-enabled application ACT/PRE ACT/PRE ACT/PRE ACT/PRE/PRE done foo () { ACT/PRE bbop_new } ?Program Control Unit 18 Memory Controller 10

SIMDRAM Framework: Overview User Input SIMDRAM Output Step 1: Generate MAJ logic Step 2: Generate sequence of DRAM commands Desired operation New SIMDRAM ?Program ???????? ACT/PRE ACT/PRE ACT/PRE ACT/ACT/PRE done ?Program MAJ ???????? Main memory bbop_new MAJ/NOT logic AND/OR/NOT logic ISA New SIMDRAM instruction SIMDRAM Output Instruction result in memory User Input Step 3: Execution according to Program SIMDRAM-enabled application ACT/PRE ACT/PRE ACT/PRE ACT/PRE/PRE done foo () { ACT/PRE bbop_new } ?Program Control Unit 18 Memory Controller 11

Key Results Evaluated on: - 16 complex in-DRAM operations - 7 commonly-used real-world applications SIMDRAM provides: 88 and 5.8 the throughput of a CPU and a high-end GPU, respectively, over 16 operations 257 and 31 the energy efficiency of a CPU and a high-end GPU, respectively, over 16 operations 21 and 2.1 the performance of a CPU an a high-end GPU, over seven real-world applications 12

Conclusion SIMDRAM: - Enables efficient computation of a flexible set and wide range of operations in a PuM massively parallel SIMD substrate - Provides the hardware, programming, and ISA support, to: Address key system integration challenges Allow programmers to define and employ new operations without hardware changes More in the paper: - Efficiently transposing data - Programming interface - Handling page faults, address translation, coherence, and interrupts - Security implications - Reliability evaluation - Comparison to in-cache computing - And more SIMDRAM is a promising PuM framework Can ease the adoption of processing-using-DRAM architectures Improve the performance and efficiency of processing- using-DRAM architectures 13

SIMDRAM: A Framework for Bit-Serial SIMD Processing using DRAM Nastaran Hajinazar* Sven Gregorio Joao Ferreira Nika Mansouri Ghiasi Minesh Patel Mohammed Alser Saugata Ghose Juan G mez Luna Onur Mutlu Geraldo F. Oliveira*