Understanding Data Quality Dimensions and Management Systems

Explore the various dimensions of data quality such as validity, reliability, timeliness, precision, and integrity in the context of M&E capacity strengthening workshops. Learn how data management systems ensure accurate and reliable data collection and reporting to support evidence-based programs. Discover the importance of data quality assurance and assessment in promoting accountability and informed decision-making.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Dimensions of Data Quality M&E Capacity Strengthening Workshop, Addis Ababa 4 to 8 June 2012 Arif Rashid, TOPS

Data Quality Project Implementation Data Management System An information system represents these activities by collecting the results that were produced and mapping them to a recording system. Project activities are implemented in the field. These activities are designed to produce results that are quantifiable. Data Quality: How well the DMS represents the fact ? Data True picture of the field Management System Slide # 1

Why Data Quality? Program is evidence-based Data quality Data use Accountability Slide # 2

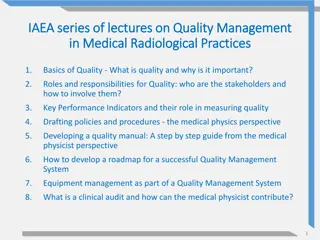

Conceptual Framework of Data Quality Dimensions of Data Quality Quality Data Validity, Reliability, Timeliness, Precision, Integrity Functional components of Data Management Systems Needed to Ensure Data Quality Data management and reporting M&E Unit in the Country Office M&E Structures, Roles and Responsibilities Indicator definitions and reporting guidelines system Intermediate aggregation levels (e.g. districts/ regions, etc.) Data collection and reporting forms/tools Data management processes Data quality mechanisms Service delivery points M&E capacity and system feedback Slide # 3

Dimensions of data quality Validity Valid or accurate data are considered correct. Valid data minimize error (e.g., recording or interviewer bias, transcription error, sampling error) to a point of being negligible. Reliability Data generated by a project s information system are based on protocols and procedures. The data are objectively verifiable. The data are reliable because they are measured and collected consistently. Slide # 4

Dimensions of data quality Precision The data have sufficient detail information. For example, an indicator requires the number of individuals who received training on integrated pest management by sex. An information system lacks precision if it is not designed to record the sex of the individual who received training. Timeliness Data are timely when they are up-to-date (current), and when the information is available on time. Integrity Data have integrity when the system used to generate them are protected from deliberate bias or manipulation for political or personal reasons. Slide # 5

Data Quality: Assurance and Assessment Data Quality Assurance - A process for defining the appropriate dimensions and criteria of data quality, and procedures to ensure that data quality criteria are met over time Data Quality Assessment Review of project M&E system to ensure that quality of data captured by the M&E system is acceptable. Slide # 6

Whats a Data Quality Assessment (DQA)? A data quality assessment is a periodic review that: Helps Food for Peace and the implementing partner determine and document How good are the data? Provides an opportunity for capacity-building of implementing partners. DQAs are required of all USAID data that are reported to the federal government. It is a requirement by the US Government. Slide # 7

Data quality Assessments Project participants Managers Technicians Field staff Partners Local Govt. Headquarters Slide # 8

Components of DQA (1/2) 1. Assess four main dimensions of data collection process: Design Organizational structure Implementation practices Follow-up verification of reported data Slide # 9

Components of DQA (2/2) 2. Systems assessment of data management and reporting Are systems and practices in place to collect, aggregate, analyze the appropriate information? Are these systems and practices being followed? 3. Verification of reported data for key indicators Spot checks to find non-sampling errors Slide # 10

M&E Systems Assessment Tools Are key M&E and data-management staff identified with clearly assigned responsibilities? M&E structures, functions and capabilities 1 Have the majority of key M&E and data management staff received the required training? 2 Are there operational indicator definitions meeting relevant standards that are systematically followed by all service points? Indicator definitions and reporting guidelines 3 Has the project clearly documented what is reported to who, and how and when reporting is required? 4 Are there standard data-collection and reporting forms that are systematically used? Data collection and reporting forms/tools 5 Are data recorded with sufficient precision/detail to measure relevant indicators? 6 Are source documents kept and made available in accordance with a written policy? 7 Slide # 11

M&E Systems Assessment Tools Does clear documentation of collection, aggregation and manipulation steps exist? Data management processes 8 Are data quality challenges identified and are mechanisms in place for addressing them? 9 Are there clearly defined and followed procedures to identify and reconcile discrepancies in reports? 10 Are there clearly defined and followed procedures to periodically verify source data? 11 Do M&E staff have clear understanding about the roles and how data collection and analysis fits into the overall program quality? M&E capacity and system feedback 12 Do M&E staff have clear understanding with the PMP, IPTT and M&E Plan? 13 Do M&E staff have required skills in data collection, aggregation, analysis, interpretation and reporting ? 14 Are there clearly defined feedback mechanism to improve data and system quality? 15 Slide # 12

Schematic of follow-up verification Slide # 13

Practical DQA Tips Build assessment into normal work processes Use software checks and edits of data on computer systems Get feedback from users of the data Compare the data with data from other sources Obtain verification by independent parties Slide # 14

DQA realities! The general principle is that performance data should be as complete, accurate and consistent as management needs and resources permit. Consequently, DQAs are not intended to be overly burdensome or time intensive Slide # 15

M&E system design for data quality Appropriate design of M&E system is necessary to comply with both aspects of DQA Ensure that all dimensions of data quality are incorporated into M&E design Ensure that all processes and data management operations are implemented and fully documented (ensure a comprehensive paper trail to facilitate follow-up verification) Slide # 16

This presentation was made possible by the generous support of the American people through the United States Agency for International Development (USAID). The contents are the responsibility of Save the Children and do not necessarily reflect the views of USAID or the United States Government.