Understanding Linux Process Scheduling and Priorities

Delve into the intricacies of process scheduling in Linux systems, covering topics such as task prioritization, process states, scheduler decisions, and important scheduling scenarios. Learn about traditional scheduling concerns like throughput and latency, as well as different types of workloads such as compute-bound and real-time tasks.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Scheduling of Regular Tasks in Linux David Ferry, Chris Gill, Brian Kocoloski, James Orr CSE 422S - Operating Systems Organization Washington University in St. Louis St. Louis, MO 63143 1

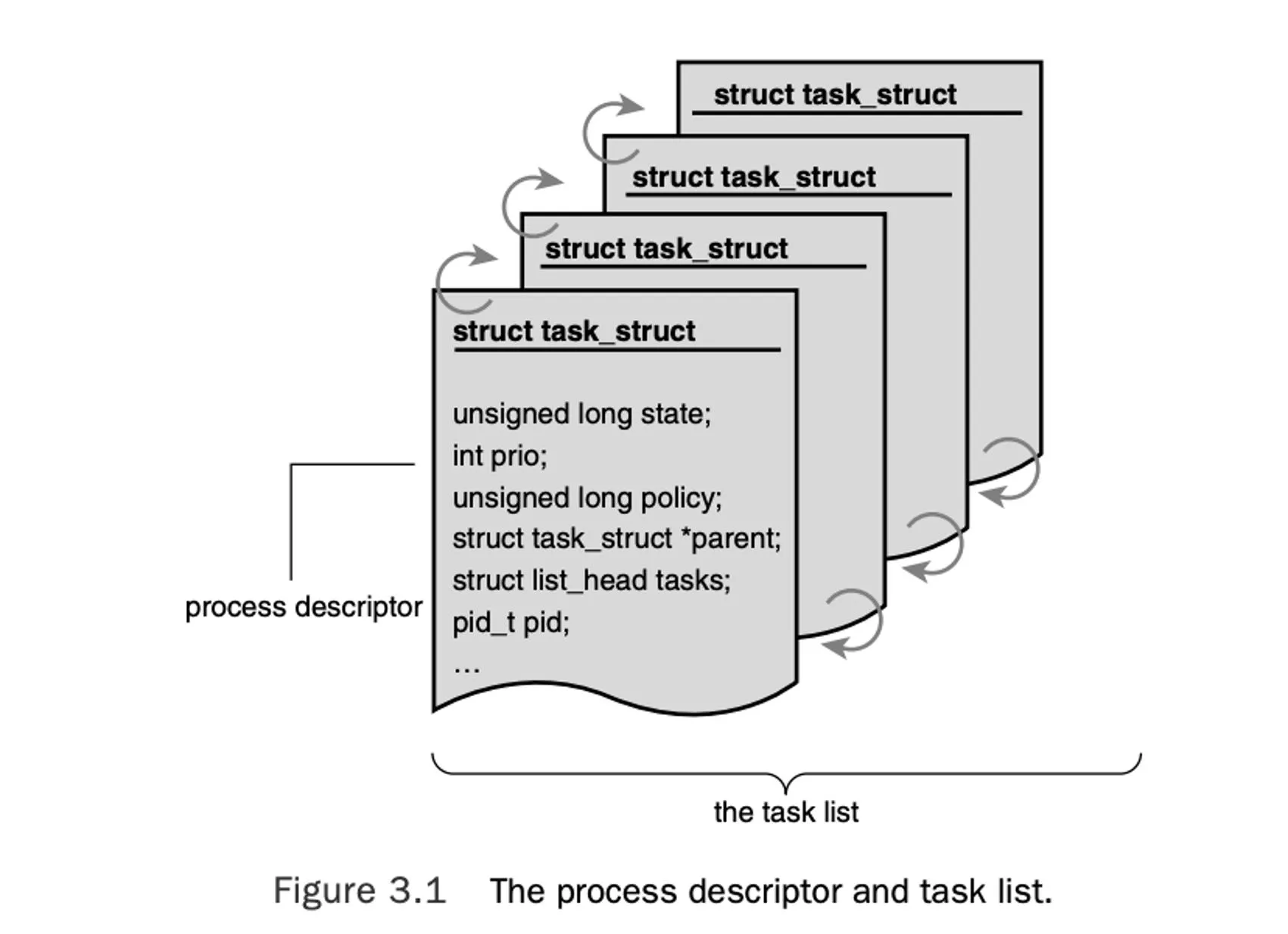

Processes in the kernel: struct task_struct CSE 422S Operating Systems Organization 2

Possible states of a process CSE 422S Operating Systems Organization 3

Traditional Scheduling Concerns Throughput: Maximize tasks finished per time Latency: Minimize time between creation and completion Response time: Minimize time between wakeup and execution Starvation: All tasks guaranteed some processor time Fairness: All tasks given equal processor time Overhead: Multicore scalability, efficiency A scheduler must compromise! 4 CSE 422S Operating Systems Organization

Important Scheduling Scenarios Compute Bound (e.g. while(1) ) Tries to keep the cache hot I/O Bound (e.g. always waits for keyboard) Tries to respond as quickly as possible Server Tries to minimize backlog of requests (throughput & latency) Desktop Tries to maximize interactivity (like I/O bound case) But must also handle heterogeneous workloads, multiple devices Real-time Tries to make response times predictable Must guarantee that critical tasks complete by their deadlines 5 CSE 422S Operating Systems Organization

What a Scheduler Must Decide Which task should run next? How long should it run? 6 CSE 422S Operating Systems Organization

Normal Task Priorities Based on niceness levels Levels range from [-20, 19], default is 0 More nice => Lower Priority (higher) Less nice => Higher priority (lower) Can be adjusted heuristically for interactive and CPU bound tasks 7 CSE 422S Operating Systems Organization

O(1) Scheduler Fundamental idea: Map nice values to fixed timeslices E.g., 0: 100 ms 1: 95 ms 2: 90 ms When tasks exhaust their timeslice they move to expired array, if blocking they stay active When active array is empty we pointer swap When a task uses its timeslice, it moves to an expired array Next task to run: remaining task with highest priority When all tasks have run, the expired array becomes active, start again from the front 8 CSE 422S Operating Systems Organization

Simple Example Linux characterizes tasks as either compute bound or I/O bound Compute bound Makes heavy use of the processor, non-interactive, does not care about latency I/O bound Makes only sporadic use of the processor, reads/writes storage/network data, or waits for user input; cares about latency Example (LKD pp 45) App 1: text editor (I/O bound) App 2: video encoder (compute bound) 9 CSE 422S Operating Systems Organization

Problems with O(1) Scheduler Recall O(1) philosophy: fixed timeslices for different priority levels What would timeslice allocations be for: One video encoder (nice 19) and one text editor (nice 0)? Two video encoder tasks? Two text editor tasks? 10 CSE 422S Operating Systems Organization

Problems with O(1) Scheduler Inverted switching rates High priority (low nice value) tasks are generally interactive, I/O intensive Low priority (high nice value) tasks are generally compute bound Further consider two low priority processes they will switch every 5 ms Two high priority processes they will switch every 100 ms Additional problems? Variance across nice intervals Nice values of 0,1 get timeslices of 100,95 ms (5% decrease) Nice values of 18,19 get timesliices of 10,5 ms (50% decrease) Need absolute timeslices, limited by HW capability 11 CSE 422S Operating Systems Organization

Completely Fair Scheduler (CFS) Goal: All tasks receive a weighted proportion of processor time. On a system with N tasks, each task should be promised 1/N processor time i.e. completely fair Allows interactive tasks to run at high priority while sharing CPU equally between CPU bound tasks. Does not require OS to determine a priori if a process is I/O or CPU bound Fundamental idea: Abandons notion of fixed timeslice (and varying fairness), for fixed fairness (and varying timeslice) 12 CSE 422S Operating Systems Organization

Timeslice Calculation Scheduling period is a period of time in which each thread/process on a CPU is guaranteed to be scheduld at least once Nice values are transformed into a weight and each task receives a timeslice according to its weight Timeslice = (scheduling period)*(Weight of task)/(Weight of all tasks) Images: https://helix979.github.io/jkoo/post/os-scheduler/ CSE 422S Operating Systems Organization 13

Virtual Runtime Virtual runtime: the actual running time of a process weighted by its priority, stored as nanoseconds value virtual runtime=(actual runtime) 1024/weight. If all tasks have nice priority 0, their virtual runtime is equal to their actual runtime Updated in update_curr() in fair.c 14 CSE 422S Operating Systems Organization

Same example, but with CFS Consider our video encoder and text editor once again Now, rather than fixed timeslices, we need a target latency a single absolute value that reflects how responsive the system should feel e.g. 20 ms Assume nice values of 0 and 20 This works out to about 95% of the processor for nice 0 and 5% of the processor for nice 20 So, timeslices would be 19 ms and 1 ms What about two text editors? Two video encoders? 15 CSE 422S Operating Systems Organization

CFS Example Consider a video encoder and a text editor Video encoder Entitled proportion: 50% Text editor Entitled proportion:50% Used Unused Over-use Actual proportion: 95% Has low priority. Actual proportion: 5% Has high priority when it wants to run. 16 CSE 422S Operating Systems Organization

CFS Run Queue Implementation Need to pick the task with shortest virtual runtime Is there an efficient data structure that allows us to always pick the lowest value (each time)? Need to consider the cost (and frequency) of operations needed to maintain the data structure s invariants, as well as the cost of picking 17 CSE 422S Operating Systems Organization

Red-Black Binary Tree 18 CSE 422S Operating Systems Organization

Todays Studio Monitor the CFS scheduler with user-level workloads and priorities on your Raspberry Pis Gain experience with cpu pinning, priority setting, and performance monitoring 19 CSE 422S Operating Systems Organization