Understanding OpenMP Programming on NUMA Architectures

In NUMA architectures, data placement and thread binding significantly impact application performance. OpenMP plays a crucial role in managing thread creation/termination and variable sharing in parallel regions. Programmers must consider NUMA architecture when optimizing for performance. This involves understanding memory distribution, CPU layout, and relative latencies within NUMA nodes on Linux systems. Utilizing tools like lscpu, numactl, and hwloc-ls can provide valuable insights for efficiently exploiting NUMA architectures in OpenMP programming.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

OpenMP programming on NUMA architectures In a NUMA machine, data placement and thread binding will have impact on the performance of an application oOpenMP data allocation oOpenMP thread affinity

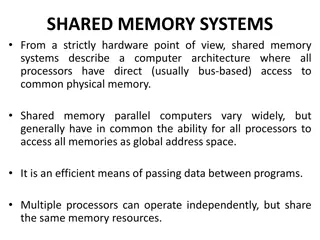

NUMA Shared memory architecture Current SMP systems adopt mostly NUMA shared memory architecture. Memory resides in NUMA domains. oAlthough memory are addressed with a global address space, accessing different parts of memory can result in different latency and bandwidth. oLook at AMD s EPYC architecture to see why. o Local memory offers higher bandwidth and lower latency than remote memory .

NUMA Shared memory architecture With OpenMP taking care of thread creation/termination, the programmer specifies the following o The parallel region o whether each variable is shared or private. Programmer must also make sure that the program does not have race condition! Does a programmer need to worry about the NUMA architecture? Before we develop a strategy for exploiting NUMA, let us first look at how to get NUMA information of your computer.

Getting CPU and NUMA information in Linux <linprog1:38> lscpu Architecture: x86_64 CPU op-mode(s): 32-bit, 64-bit Byte Order: Little Endian CPU(s): 56 On-line CPU(s) list: 0-55 Thread(s) per core: 2 Core(s) per socket: 14 Socket(s): 2 NUMA node(s): 2 Vendor ID: GenuineIntel CPU family: 6 Model: 63 Model name: Intel(R) Xeon(R) CPU E5-2683 v3 @ 2.00GHz Hyperthreading on, 2 threads per core, 2*14*2=56 threads per node 14 physical cores per processor 2 processors

Getting CPU and NUMA information in Linux <linprog1:39> numactl --hardware available: 2 nodes (0-1) node 0 cpus: 0 2 4 6 8 10 12 14 16 18 20 22 24 26 28 30 32 34 36 38 40 42 44 46 48 50 52 54 node 0 size: 128669 MB node 0 free: 106564 MB node 1 cpus: 1 3 5 7 9 11 13 15 17 19 21 23 25 27 29 31 33 35 37 39 41 43 45 47 49 51 53 55 node 1 size: 129019 MB node 1 free: 113990 MB node distances: node 0 1 0: 10 21 1: 21 10 Logical cores enabled by hyperthreading Available memory on NUMA node Relative latency for memory references

Getting CPU and NUMA information in Linux Another useful tool: hwloc-ls o Gives more detailed NUMA information.

Impact of NUMA on performance of shared memory programming Where (which part of the memory) variables reside will have an impact on performance. o May need to know and control which part of the memory a variable resides? Where (which core) does a thread run on will have an impact on performance. oMay need to know and control which core a thread runs on? To achieve high performance on a NUMA architecture, the programmer may need to take these into consideration.

Where (which NUMA domain) arrays are allocated? This is determined by the OS. Most OS has a default first touch policy: the first core that touches the memory will get the memory in its NUMA domain. #pragma omp parallel num_threads(2) For (i=0; i<100000; i++) A[i] = 0.0; For (i=0; i<100000; i++) A[i] = 0.0; A[50000..99999] A[0..49999] A[0..99999]

Where (which NUMA domain) arrays are allocated? First touch policy: the first core that touches the memory will get the memory in its NUMA domain. o Parallel initialization or sequence initialization can make a big difference. o It is unclear which one is better. This depends on many factors -- reasoning about memory performance is complicated. oSee lect12/vector_omp.c for example (OMP_NUM_THREADS=2).

Binding threads to cores Controlling where each thread runs is also important in a NUMA machine. Different strategies can be used to bind a thread to a core The most efficient binding depends on many factors, the NUMA topology, how the data is placed, and the application characteristics (data access patterns). In general, threads may be put close to one another or apart from one another (on different sockets).

Binding strategy Putting threads far apart, i.e. on different sockets o May improve aggregated memory bandwidth to the application o May improve the combined cache size available to the application o May decrease performance of synchronization operations Putting threads close together, i.e. on adjacent cores that potentially share caches o May improve the performance of synchronization operations o May decrease the aggregated memory bandwidth to the application. No clean-cut winner.

OpenMPs control over binding OMP_PLACES and OMP_PROC_BIND oSupported in OpenMP 4.0 and above. oOn linprog /usr/bin/gcc has OpenMP 3.0. You can use /usr/local/gcc/6.3.0/bin/gcc to get OpenMP 4.5. A list of places can be specified in OMP_PLACES. The place-partition- var ICV , obtains its initial value from the OMP_PLACES value, and makes the list available to the execution environment. o threads: each place corresponds to a hardware thread o cores: each place corresponds to a hardware core o sockets: each place corresponds to a single socket o the OMP_PLACES value can also explicitly enumerate comma separated lists.

OMP_PLACES examples setenv OMP_PLACES threads setenv OMP_PLACES cores setenv OMP_PLACES sockets setenv OMP_PLACES "{0,1,2,3},{4,5,6,7},{8,9,10,11},{12,13,14,15}" setenv OMP_PLACES "{0:4},{4:4},{8:4},{12:4}" setenv OMP_PLACES "{0:4}:4:4"

OMP_PROC_BIND The value of OMP_PROC_BIND is either true, false, or a comma separated list of master, close, or spread. o If the value is false, then thread binding is disable. Threads may be moved to any places o Otherwise, thread binding is enabled. Threads are not moved from one place to another. o master: assign every thread in the team to the same place as the master thread. o close : assign the threads in the team to places close to the place of the parent thread. o spread: spread threads among places.

OpenMP thread affinity examples From https://pages.tacc.utexas.edu/~eijkhout/pcse/html/omp- affinity.html Example 1: The machine has two sockets and OMP_PLACES=sockets o thread 0 goes to socket 0 o thread 1 goes to socket 1 o thread 2 goes to socket 0 o and so on.

OpenMP thread affinity examples Example 2: The machine has two sockets and 16 cores. OMP_PLACES=cores and OMP_PROC_BIND=close o thread 0 goes to core 0 in socket 0 o thread 1 goes to core 1 in socket 0 o thread 2 goes to core 2 in socket 0 o o thread 7 goes to core 7 in socket 0 o thread 8 goes to core 8 in socket 1 o and so on

OpenMP thread affinity examples Example 3: The machine has two sockets and 16 cores. OMP_PLACES=cores and OMP_PROC_BIND=spread o thread 0 goes to core 0 in socket 0 o thread 1 goes to core 8 in socket 1 o thread 2 goes to core 1 in socket 0 o thread 3 goes to cores 9 in socket 1 o and so on Similar to OMP_PLACES=sockets, but bind threads to specific cores.

Summary First touch memory placement. OpenMP supports thread affinity control with places and binding. Reasoning about performance at this level is hard. o You should try multiple thread affinity schemes to decide a good strategy.