Parallel Implementations of Chi-Square Test for Feature Selection

The chi-square test is an effective method for feature selection with categorical data and classification labels. It helps rank features based on their chi-square values or p-values, indicating importance. Parallel processing techniques, such as GPU implementation in CUDA, can significantly speed up the computation by evaluating chi-square values for multiple features simultaneously. This approach enhances efficiency in data analysis and model building.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Parallel chi-square test Usman Roshan

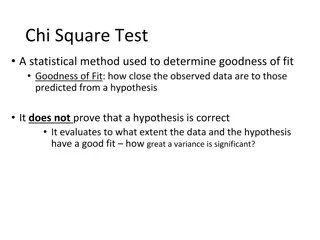

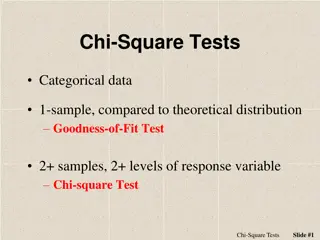

Chi-square test The chi-square test is a popular feature selection method when we have categorical data and classification labels as opposed to regression In a feature selection context we would apply the chi-square test to each feature and rank them chi-square values (or p-values) A parallel solution is to calculate chi-square for all features in parallel at the same time as opposed to one at a time if done serially

Chi-square test Contingency table We have two random variables: Label (L): 0 or 1 Feature (F): Categorical Null hypothesis: the two variables are independent of each other (unrelated) Under independence P(L,F)= P(D)P(G) P(L=0) = (c1+c2)/n P(F=A) = (c1+c3)/n Expected values E(X1) = P(L=0)P(F=A)n We can calculate the chi-square statistic for a given feature and the probability that it is independent of the label (using the p-value). Features with very small probabilities deviate significantly from the independence assumption and therefore considered important. Feature=A Feature=B Label=0 Observed=c1 Expected=X1 Observed=c2 Expected=X2 Label=1 Observed=c3 Expected=X3 Observed=c4 Expected=X4 (ci- xi)2 xi d-1 c2= i=0

Parallel GPU implementation of chi- square test in CUDA The key here is to organize the data to enable coalescent memory access We define a kernel function that computes the chi- square value for a given feature The CUDA architecture automatically distributes the kernel across different GPU cores to be processed simultaneously.