Introduction to Thrust Parallel Algorithms Library

Thrust is a high-level parallel algorithms library, providing a performance-portable abstraction layer for programming with CUDA. It offers ease of use, distributed with the CUDA Toolkit, and features like host_vector, device_vector, algorithm selection, and memory management. With a large set of al

0 views • 18 slides

Parallel Chi-square Test for Feature Selection in Categorical Data

The chi-square test is a popular method for feature selection in categorical data with classification labels. By calculating chi-square values in parallel for all features simultaneously, this approach provides a more efficient solution compared to serial computation. The process involves creating c

1 views • 4 slides

Parallel Implementations of Chi-Square Test for Feature Selection

The chi-square test is an effective method for feature selection with categorical data and classification labels. It helps rank features based on their chi-square values or p-values, indicating importance. Parallel processing techniques, such as GPU implementation in CUDA, can significantly speed up

0 views • 4 slides

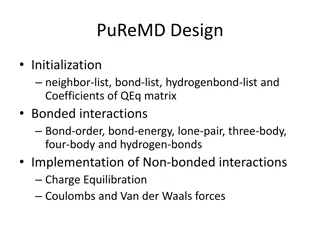

PuReMD Design - Initialization, Interactions, and Experimental Results

PuReMD Design involves the initialization of neighbor lists, bond lists, hydrogen bond lists, and coefficients of QEq matrix for bonded interactions. It also implements non-bonded interactions such as charge equilibration, Coulomb's forces, and Van der Waals forces. The process includes the generati

0 views • 23 slides

Communication Costs in Distributed Sparse Tensor Factorization on Multi-GPU Systems

This research paper presented an evaluation of communication costs for distributed sparse tensor factorization on multi-GPU systems. It discussed the background of tensors, tensor factorization methods like CP-ALS, and communication requirements in RefacTo. The motivation highlighted the dominance o

0 views • 34 slides

Update on Tools Integration, Measurement, and Modeling

TAU, a performance analysis framework, is being ported to ARM64 Linux and Power 8 Linux environments with updated instrumentation features. It offers measurement sampling support and integrates with various libraries for efficient performance tracking. Additionally, the TAU interface enables energy

0 views • 22 slides

OpenACC Compiler for CUDA: A Source-to-Source Implementation

An open-source OpenACC compiler designed for NVIDIA GPUs using a source-to-source approach allows for detailed machine-specific optimizations through the mature CUDA compiler. The compiler targets C as the language and leverages the CUDA API, facilitating the generation of executable files.

0 views • 28 slides

Fast Noncontiguous GPU Data Movement in Hybrid MPI+GPU Environments

This research focuses on enabling efficient and fast noncontiguous data movement between GPUs in hybrid MPI+GPU environments. The study explores techniques such as MPI-derived data types to facilitate noncontiguous message passing and improve communication performance in GPU-accelerated systems. By

0 views • 18 slides

Parallelism and Synchronization in CUDA Programming

In this lecture on CS.179, the focus is on parallelism, synchronization, matrix transpose, profiling, and using AWS clusters in CUDA programming. The content delves into ideal cases for parallelism, synchronization examples, atomic instructions, and warp-synchronous programming in GPU computing. It

0 views • 29 slides

Emerging Trends in Bioinformatics: Leveraging CUDA and GPGPU

Today, the intersection of science and technology drives advancements in bioinformatics, enabling the analysis and visualization of vast data sets. With the utilization of CUDA programming and GPGPU technology, researchers can tackle complex problems efficiently. Massive multithreading and CUDA memo

0 views • 32 slides