CH. 4: Parametric Methods

Parametric methods play a crucial role in statistics, focusing on parameter estimation and classification based on given samples. This involves estimating parameters and defining functions such as distribution and regression functions. Maximum likelihood estimation and Bayesian approaches are key methods used in parameter estimation, particularly in scenarios involving probability theory.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

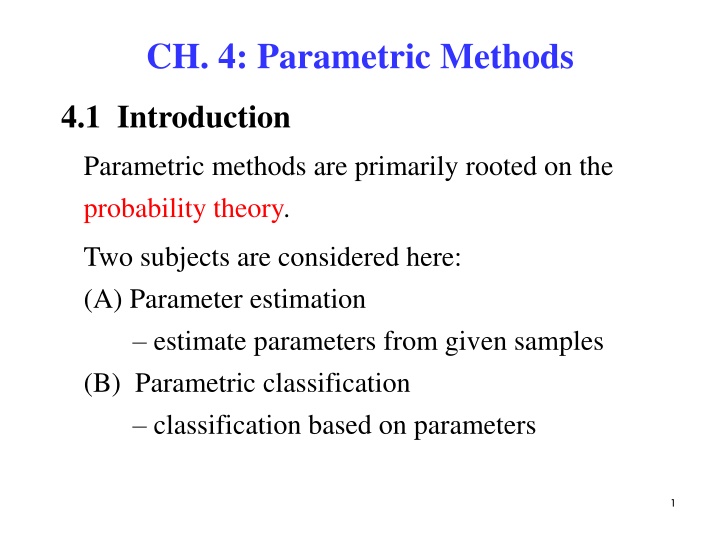

CH. 4: Parametric Methods 4.1 Introduction Parametric methods are primarily rooted on the probability theory. Two subjects are considered here: (A) Parameter estimation estimate parameters from given samples (B) Parametric classification classification based on parameters 1

4.2 Parameter Estimation -- Parameter estimation assume a known function defined up to a set of parameters. Two kinds of parameters are considered: (1) Distribution functions (2) Regression functions 2 ( ) x 1 = ( ) p x e 2 2 Ex.1: Gaussian distribution: Parameters: 2 = ( , ) T Once are estimated from the sample, the whole is known. ( ) p x 2

( ) g x = + w x w Ex.2: Linear regression function Parameters: 1 0 = T ( , ) w w 0 1 Quadratic regression function ( ) 2 g x w x = 2 + + w x w 1 0 = T Parameters: ( , , ) w w w 0 1 2 4.2.1 Distribution Functions Two methods of parameter estimation: (a) Maximum likelihood approach (b) Bayesian approach 3

(A) Maximum Likelihood Estimation (MLE) -- Given a training sample, choose the value of a parameter that maximizes the likelihood of the parameter, which is the probability of obtaining the sample. = x t N ( | ) p x Let be a sample drawn from . 1 { } t X = * * ( | x Estimate that makes the sample X from ) p as likely as possible, i.e., maximum likelihood. 4

( | ) l X The likelihood of given a sample X is the probability of obtaining X, which is ( | ) p X t the product of the probabilities of | ) = x N t p 1 ( obtaining the individual examples, i.e., = = t x N t ( | ) ( | ) ( | ) l X p X p = 1 N = = t | x ( ) log ( | l ) log ( | ) L X X p Log-likelihood: = 1 t * Maximum likelihood estimator: = argmax ( | ) L X A.1 Bernoulli Distribution x {0,1} The random variable X takes values . 5

Probability function: P(x) = px(1 p)1 x p is the only parameter to be estimated, i.e., . = p Given a sample , the log-likelihood of { } X x = t N t : = 1 N N t t 1 ( | = | ) = t x x ) log ( log (1 ) L X p x p p = = 1 1 p t t 1 1 N N 1 1 = + + x x x x log (1 ) log (1 ) p p p ( ( ) 1 1 2 2 N N 1 1 1 = x x x x x x log (1 ) (1 ) (1 ) p p p p p p ) t t t t x N x x N x = = + log (1 ) log log(1 ) p p p p t t t t = + t t log ( )log(1 ) x p N x p t t 6

The maximum likelihood estimate (MLE) of p: dL dp = Let / 0 dL dp d dp = + t t ( log ( )log(1 )) x p N x p t t t t (1 ) ( ) p x p N x 1 p 1 = = t t ( ) x N x t p t 1 (1 ) (1 ) p p p p t t = (1 0 = t t ) ( ) 0 p x p N x t t + = = t t t t 0 0 x p x pN p x x pN t t t t t = p / x N t 7

A.2 Binomail Distribution In binomial trials, N identical independent Bernoulli trials (0/1) are conducted. Random variable X represents the number of 1s. N x N x x = Probability function: ( ) (1 ) P x p p = p is the only parameter to be estimated, i.e., . p Given a sample , derive the maximum { } X x = t n t = 1 . likelihood estimate of 8 8

: The log-likelihood of N x n n N x t t ( | = | ) = t x ) log ( log (1 ) L X p x p p t = = 1 1 t t N x N x N x N x 1 1 2 2 = + x x log (1 ) log (1 ) p p p p 1 2 N x N x n n + + x log (1 ) p p n N x N x ( ) N x N x 1 1 n n = x x log (1 ) (1 ) p p p p 1 N c 9 9

( ) N x N x 1 1 n n = x x log (1 ) (1 ) c p p p p N x N x = (where ) c 1 n t t x Nn x = log (1 ) c p p t t t t x Nn x = = + + + log log log(1 + ) c p p t t t t log log ( )log(1 ) c x p Nn x p t t The maximum likelihood estimate (MLE) of p: dL dp = Let / 0 10

dL dp d dp = + + t t (log log ( )log(1 )) c x p Nn x p t t t t t t ( ) (1 ) ( ) x Nn x p x p Nn x = = = 0 t t t p t 1 (1 ) (1 ) p p p p p = t t (1 ) ( ) 0 p x p Nn x t t p + = = t t t t 0 0 x p x pNn x x pNn t t t t t = p / x Nn t 11

A.3 Gaussian (Normal ) Distribution ( ) 2 x 1 ( ) p x Density function: = exp 2 2 2 = ( , ) T Parameters to be estimated: = t N t { } x X Given a sample: = 1 ( ) p x N = t Log-likelihood: ( | ) log L X = ) 1 2 t ( t x 1 N = log exp 2 2 2 = 1 t 2 t N t ( ) N x = = 1 log(2 ) log N 2 2 2 12

( , ) The maximum likelihood estimate (MLE) of : = = Let / 0, / 0 L L 2 t N t ( 2 ) L N x = = 1 log(2 ) log N 2 2 1 1 N N 2 2 = = t t ( ) ( ) x x 2 2 2 2 = = 1 1 t t 1 1 ( ) N N = = = t t 2( ) ( ) 0 x x 2 2 2 = = 1 1 t t 1 N N N = = = t t t ( t = ) 0, 0, x x N x t = 1 1 t 13

2 t N t ( 2 ) L N x = = 1 log(2 ) log N 2 2 2 t N t ( 2 ) x = = 1 log N 2 1 2 1 N N 2 = t ( ) x 2 = 1 t 1 N N 2 = + = t ( ) 0 x 3 = 1 t 1 1 N N N 2 2 2 + = = t t ( ) 0, ( ) N x x 2 = = 1 1 t t 14

(B) Bayes Estimation ( ) p | ) -- Treat as a random variable with prior density . p X ( Using Bayes rule to combine the likelihood ( | = | ) ( )/ ( ) p ) ( p X p X p X to obtain the posterior . = | ) ML estimator: argmax ( p X ML MAP estimator: = argmax ( | ) p X MAP Bayes = [ | E = ( | ] ) X p X d Bayes estimator: i.e., the expected value of characterized by the posterior density. 15

= t N t { } , x Example: Given a sample X = 1 2 t 1 ( ) x 2 N tx = t Suppose : ( , ), ( ) p x exp 1/2 2 (2 ) 2 2 t 1 ( ) x N | ) = ( exp p X Likelihood: 1/2 2 (2 ) 2 = 1 t 2 t 1 N ( ) x = t exp /2 2 (2 ) N 2 2 ( ) 1 Prior: ( ) = 2 0 exp p 0 : ( , ), N 1/2 2 0 (2 ) 2 0 0 Posterior: ( | = | ) ( )/ ( ) p ) ( p X p X p X 16

2 2 t 1 ( ) ( ) x = + t exp / ( ) p X 0 1)/2 + ( 2 2 0 (2 ) N N 2 2 0 1 = [ | E = ( | = ] ) X p X d 1)/2 + Bay ( (2 ) N N 0 2 2 t ( ) ( ) x + t exp / ( ) p X d 0 2 2 0 + 2 2 2 0 1/ 2 + 1/ / N = + m 0 2 2 0 2 2 0 / 1/ / N N large close to ; N m Bay 2 0 small or small close to . N 0 Bay 17

4.2.2 Regression Functions t t N t ( , ) , x r -- Given a sample determine the parameters of the relationship f for e.g., Linear function: ( ) f x Quadratic function: ( ) f x = 1 ( ). f x w + w x = r = = T 0, 2 w x = ( + , ) w w w x 1 0 w 1 + 0, 2 1 = T ( , , ) w w w 0 1 2 Traditional method -- RANSAC Example: Linear fitting of a set of data points Two points are sufficient to define a line. 1. Select two points at random from the data set. Guess that the line join them is the correct model. 18

2. Test the model by asking how many data points are close enough to the guess, i.e., consensus points. ( ) 3. If there is a significant number (n) of consensus points, re-compute the model using the consensus set. 4. Repeat steps 2 and 3 using smaller and larger n. 19

Fit a line to a set of data points in the 2D space + Line equation: = -- (A) a x b y d a b d + = x y 2 2 2 2 2 2 + + + a b = a b a b a b d = = , , a b d Let 2 2 2 2 2 2 + + + a + b = a b a b (A) . ax by d The perpendicular distance ax by + = ( , x y ) d from point to line is | | i i ax by d + i i n 2 = + Error measure: ( , , ) E a b d ( ) ax by d i i = 1 i 20

Minimize E w.r.t. (a, b, d) E d n ax by = 1 ( i i n = n E ax = n = + = 2 ( ) 0 ax by d Let i i = 1 i n = + + = ( ) nd ax by ( ) 0, nd i i i i = 1 i 1 i n n n 1 n = + = + = + ) ( ) d ax by ax by ax by i i i = = 1 1 1 i i n 2 2 = + = + + ( ) ( ( )) by d ax by ax by i i i i = 1 1 i i n 2 2 = + = n [ ( a x ) ( )] x b y y U i i = 1 i 21

x x y y = a b 1 1 n , where = U x x y y n n 2 The solution of min is the unit eigenvector with the minimum Un w.r.t. n under constraint n = 1 eigenvalue of T . U U The least squares error solution of a homogeneous system is the eigenvector of U = 0 x T U U corresponding to the smallest eigenvalue. 22

Find the least squares error solution by the method of Lagrange multipliers Error: x E U = = 0 2 2 T T = x x x ( ) U U U x = Constraint 1 2 T T Minimize = + ( ) x where ( ) x x d ( U U x x x ( : Lagrange multiplier ) ( 1) F U U dF Let T = + = 0 x x 2( ) 2 U U T = We obtain The solution x is an eigenvector of with eigenvalue x x ) T U U 23

T T T = = U = 0 x x x = The associated error x x ( ) E U U The least squares error solution to is the eigenvector of to the smallest eigenvalue. corresponding T U U Parametric method -- takes uncertainty into account by and view and r as random variables. ( ) r f x = + ( ) Given ( ) p s r p t = : : ( ) p s ds dt / . ( Recall the theorem which states that and ( ), where is strictly increasing or decreasing. Then, Y : : ( ) p x X = Y f X f = ( ) p y ( ) p x dx dy / . ) 24

( ) | The log-likelihood of can be calculated from the sample . { , } X x r = ( ) Find by max | . L X Example: L X t t N t = 1 ( ) ( ) ( ) ( ) 2 2 = Assume : 0, : | | , , N r p r x N f x ( ) ( ) f x the estimator | : where of f x up to . The log-likelihood of : ( ) | log L X = ( ) ( ) ( ) N N 2 = t t t | log | , p r x N f x = = 1 1 t t 25

( ) 2 f x t t | r 1 N = log exp 2 2 2 = 1 t 1 ( ) 2 N f x = t t log 2 | N r 2 2 = 1 t 1 ( ) = log 2 | N E X 2 Maximizing Minimizing ( | L X Linear Regression: ( f x 1 | 2 t ) ( ) | E X ) = = + t T t | ( , ) w w w x w 0 1 1 0 1 2 ( ) 2 2 N N ( ) = = + t t t t | ( ) E X r f x r w x w 1 0 = = 1 1 t 26

( ) = = + t t , | 0, , E w w X r Nw w x 0 1 0 1 w t t 0 ( ) x 2 ( ) = = + t t t t , | 0, E w w X r x w x w 0 1 0 1 In vector-matrix form w t t t 1 = w r , A t N x t r w w t = = = 0 w r t where , , . A ( ) x 2 t t r x t t x 1 t t t A 1 = w r The solution: 27

Polynomial Regression: ( | ( f x w = ( ) | E X = 1 , , 1 2 1 2 t = ) = = + + 2 + t t k t )) ( ) ( ) w w x ( f x w x w 0 1 0 k k ) N t t | r = 1 t 2 N 1 = + + + ) t t k t ( ( ) ( ) ) r w x w x ( E w 1 0 k 1 ( t | | E X X = 0, , 0 k w = w 0 k 0 + + t t ( ) x ( ) , x r w w 0 k t t t = , 2 + + t t k t k t k ( ) r x ( ) x ( ) x w w 0 k t t t 28

= w r In vector-matrix form , where A 0 1 t t t k ( ) ( ) x ( ) ( ) x L L ( ) ( ) x x x x t t t + 1 2 1 t t t k = t t t A + 1 2 t k t k t k ( ) x ( ) x L ( ) x t t t 0 t t w w M ( ) ( ) r x M r x 0 t 1 t t 1 = = t w r , t t k w ( ) r x k t 2. = Find w that minimizes w r e A 29

de d d 2 T = = = 0 w r w r Let 2 ( ) A A A w w r A ( A A ( A A w = d T T T T T = = w r w r 0 0 ( ) ) ) , , A A A A A A A 1 + = = T T w r r A A 1 += T T : A A the pseudoinverse of A. Tuning Model Complexity: Bias and Variance ( ) Given M samples ( ) Let x ( ) Let f x tofit . f x = t t M i = { , } i x r X 1 i i = 1 M f , 1, , , be the estimates. ( ) x if i M = i i 30

1 N ( ) f x ( ) f x 2 ( ) f 2 = t t Bias t 1 ( ) x ( ) f x 2 ( ) f = t t Variance f i NM t i Bias/Variance Dilemma -- As we increase model complexity, bias decreases (a better fit to data) and variance increases (fit varies more with data) 31

4.3 Parametric Classification -- Classification based on parameters. From the Bayes rule, the posterior probability of i C ) ( | p x C p C p x ) ( ( ) ) ( | p x C p C p x C = ) ( = = ( | ) P C x i i i i i K k ( | ) ( p C ) 1 k k Discriminant function of i C ( ) ( ) ( ) ( ) ( ) = + | or log | log g x p x C P C p x C P C i i i i i Example: ( ) 2 x 1 ( ) = i Assume | exp p x C i 2 2 2 i i 33

( ) 2 x log 2 2 ( ) ( ) = + i log log - (A) g x P C i i i 2 2 i = = ) {0,1} t t N t K Given a sample { , } , x r r ( , r , X r = 1 1 K 0 if 1 if x x C C = where j . r i i ( ) Let be the estimates of ( ) , , i i i P C m s 2 i , , 2 P C i i ( ) 2 t t t x m r t t r x r r ( ) t i i 2 i = = = t t , , P C m s i i i i t t N r t t i i 34

Substitute into (A) ( ) 2 x m s log 2 2 ( ) ( ) = + i log log - (B) g x s P C i i i 2 2 i Assume priors are equal and variances are equal, g x ( ) ( ) 2 = x m (B) can be reduced to . i i = Choose if min k . C x m x m i i k i.e., classification is conducted on the basis of the mean parameter m. 35

Summary Parametric methods are primarily rooted on the probability theory. Two subjects are considered: (a) Parameter estimation (b) Parametric classification Parameter estimation assume a known function defined up to a set of parameters. Two kinds of parameters are considered: (i) Distribution functions (ii) Regression functions 36

Distribution Functions Two methods of parameter estimation: (a) Maximum likelihood estimation (MLE) (b) Bayesian estimation (BE) MLE -- Given a training sample, choose the value of a parameter that maximizes the likelihood of the parameter, which is the probability of obtaining the sample. 37

( ) p BE -- Treat as a random variable with prior Using Bayes rule to combine the likelihood p X ( | ) to obtain the posterior ( | = | ) ( )/ ( ) p ) ( p X p X p X X the expected value of characterized by Bayes estimator: ( | ) p Bayes = [ | E = ( | ] ) X p X d Likelihood is known from the sample, ( | ) p X p and prior is given. ( ) 38

Regression Functions t t N t ( , ) , x r -- Given a sample determine parameters of the relationship f for = 1 ( ). f x = r Parametric method takes uncertainty into account by and view and r as random variables. ( ) Assume ( ) p s r p t : : ( | L X ( ) f x = + r = ( ) p s ds dt / . ) The log-likelihood can be calculated from the sample . 1 { , } t X x r = = ( ) Find by max | . L X t t N 39

Tuning Model Complexity ( ) tofit . f x = t t M i = { , } i x r Given M samples ( ) Let x X 1 i i = 1 M , 1, , , be the estimates. if i M ( ) f x ( ) x f = Let i i 1 N ( ) f x 1 NM ( ) f x ( ) i f 2 ( ) f 2 = t t = Bias t ( ) f x 2 ( ) f t t Variance x t i Bias/Variance Dilemma - As we increase model complexity, bias decreases (a better fit to data) and variance increases (fit varies more with data) 40