Bayesian Networks in Machine Learning

Bayesian Networks are probabilistic graphical models that represent relationships between variables. They are used for modeling uncertain knowledge and performing inference. This content covers topics such as conditional independence, representation of dependencies, inference techniques, and learning Bayesian networks using techniques like EM algorithm and structure search.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Overview of Other ML Techniques Geoff Hulten

Conditional Independence Definition: X is conditionally independent of Y give Z if the probability distribution governing X is independent of the value of Y given the value of Z. ??,??,??? ? = ??? = ??,? = ?? = ? ? = ??? = ??) ? ? ?,? = ?(?|?) Example: Thunder is conditionally independent of Rain, given Lightning: ? ? ????? ????,??? ?????) = ? ? ????? ??? ?????)

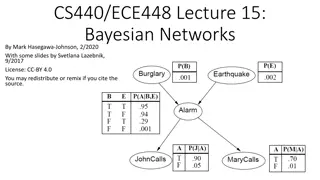

Bayesian Network Represent conditional dependencies via a directed acyclic graph, where: A variable is independent of its non-descendants given the value of its parents. 3 binary variables ?1 P(X=0,Y=0,Z=0) ?2 P(X=1,Y=0,Z=0) ?(? ?????,??? ?????,????) ? ?,?,? = Thunder is conditionally independent of Rain, given Lightning ?8 P(X=1,Y=1,Z=1) Z Eight Parameters X Y Five Parameters Rain Thunder ?1 ?1 P(Z=1) P(X=1|Z=0) ? ? ?,? = ?(?|?) ?2 P(X=1|Z=1) ? ? ?,? = ?(?|?) Lightning ?1 P(Y=1|Z=0) ?2 P(Y=1|Z=1) ? ?,?,? = ? ? ? ? ? ?(?|?) Decompose joint distribution according to structure

Inference in Bayesian Networks ? = argmax ?(??) ?(??|??) ?? ? ? P(Rain) .3 Rain ? P(Lightning|Rain=0) .1 Lightning ?1 ? ?? P(Lightning|Rain=1) .5 Na ve Bayes Super simple case ~ P(Lightning|Rain=0) = .1 < Rain=0, Lightning=? > ?(???? = 1)?(??? ????? = 1|???? = 1) ? ?????(???? = ?)?(??? ????? = 1|???? = ?) ? ???? = 1 = ~ P(Rain |Lightning=1) < Rain=?, Lightning=1 > Sorta simple case In general use techniques like EM or Gibbs sampling

More Complex Inference Situation def SimpleGibbs(???? ?????????,X,F): ???????= ? with unknown randomly initialized for i in range(burnInSteps): ???????= ??????(???????) samples = [] for i in range(len(samplesToGenerate)): ???????= ??????(???????) if i % samplesToSkip == 0: samples.append(???????) Rain TourGroup Lightning Campfire ForestFire Thunder return F(samples) ? ???? ?????????? = 1,? ????? = 0) = ?? def ??????(?): ????= ? for i in variables: if IsUnknown(??) ???? return ???? ? = ?????? ?????? ????? ????? ????

Learning Bayesian Networks Just a Joke: Combine EM with structure search Search for structure: Unknown Structure Abandon Hope Initial State: empty network or prior network Operations: Add arc, delete arc, reverse arc Evaluations: LL(D|G) * prior(G) Structure Known MAP Estimates for Parameters (Like Na ve Bayes) EM Algorithm All Variables Observed Some Variables Hidden

Example of Collaborative Filtering Will Alice like ?????2? ??????,??? = 1.38 ? = .357 ??????,? ??? = 1.41 ??????,2= 3.75 + .357(1.38 5 3.4 1.41 2 2.5 ) ??????,2= 3.75 + .357(2.22 + 0.70) Challenges: Cold Start Sparsity ??????,2= 3.75 + 1.04 ??????,2= 4.79

Support Vector Machine (SVM) Linear separator ? ?^= ????(?0+ ?? ??) ?=1 Maximum Margin Support Vectors

Support Vector Machines For non-linear data Data not linearly separable Add a new dimension: ????= 1.0 2(.5 ?1)2+(.5 ?2)2 Will be more linearly separable Various standard options (kernels) Try multiple methods Project back to original space

Support Vector Machines (More Concepts) Optimization Solve constrained system of equations Quadratic programming (e.g. SMO) Dealing with noise (soft vs hard)

Summary There are a lot of Machine Learning Algorithms