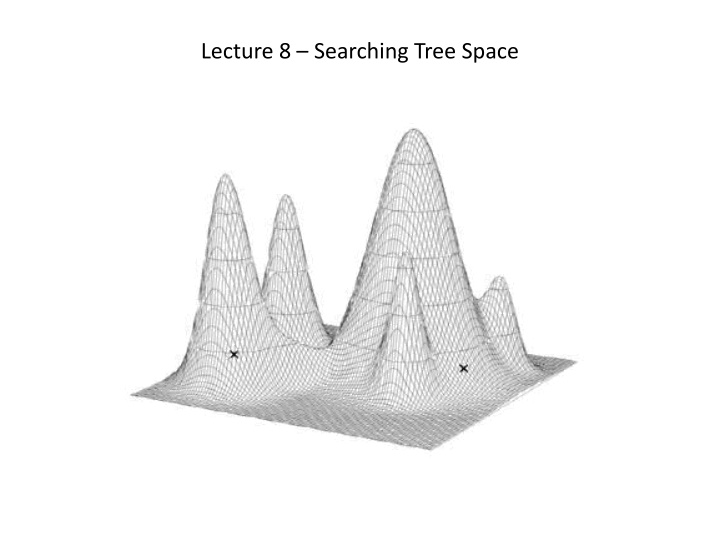

Advanced Techniques in Tree Space Searching for Phylogenetic Analysis

Explore advanced methods like Nearest-neighbor interchange (NNI), Subtree Pruning-Regrafting (SPR), and Tree Bisection-Reconnection (TBR) for searching tree space efficiently in phylogenetic analysis. Discover strategies for optimal tree selection, including greedy and less greedy approaches, and the use of random addition sequences and tree islands. Additionally, learn about transforming tree space with the Parsimony Ratchet method for improved search outcomes with real and perturbed data sets.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

The Search Tree 15 Steps Current upper bound

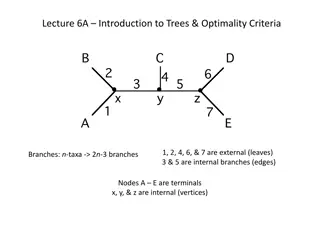

A. Nearest-neighbor interchange (NNI) There are 2(n 3) NNI rearrangements for any tree. 2 Figure from Swofford & Sullivan (2003. Chapter 7, pp. 160-206 in The Phylogenetic Handbook)

B. Subtree Pruning-Regrafting (SPR) 4(n 3)(n 2) SPR rearrangements Figure from Swofford & Sullivan (2003. Chapter 7, pp. 160-206 in The Phylogenetic Handbook)

C. Tree bisection-reconnection (TBR) All branches are bisected and reconnected in all possible ways. It s not possible to generalize how many TBR rearrangements could be made for a tree of a given size (as we could with NNI & SPR), but TBR swapping searches tree space more thoroughly than SPR or NNI.

How greedy should we be? 26 taxon data set and first, let s be very greedy . Ignore ties in building starting tree and in swapping. NNI, examine 42 trees SPR, examine 2072 trees TBR, examine 5816 trees Less greedy - save all equally optimal trees at each step. NNI, examine 140 trees SPR, examine 6212 trees TBR, examine 16,604 trees

Random Addition Sequence and Tree Islands So, in the above example, using the least greedy strategies and using starting trees generated by 100 random addition sequences, we ll look at 341,355 different trees. Island Size tree tree Score replicate hit --------------------------------------------------------------------------------- 1 2 1 2 278 1 99 2 1 - - 279 97 1 First Last First Times

May be better off spending less effort searching on one island and more effort searching for multiple islands Transforming Tree Space Parsimony Ratchet (Nixon. 1999. Cladistics. 15: 407 ) Alternate searches using real data and searches on perturbed data set. Get a starting tree by stepwise addition from the real data. Reweight a random set (20-25%) characters: this transforms tree space. Hill climb from the starting tree via greedy TBR with perturbed data. If a better tree is found, use that tree to start TBR using original data. This is iterated a couple hundred times.

Simulated Annealing Designed to search a large, complex, discrete search space. Laura (Salter) Kubatko was one of the first to apply it to phylogenies as a means of estimating ML trees (Salter and Perl, 2001. Syst. Biol. 50:7). MCMC approach to search tree space and permits down-hill moves. Steps: Generate an initial state (a starting tree). Initially, a random tree was used. Propose a stochastic change to the initial state (usually a minor change). This was initially derived via a random NNI. If the proposal improves the tree (has a better ML score), the move is accepted. Proposals (NNIs) that degrade the tree are accepted with a small probability proportional to how much worse the proposed tree is. Early on, the acceptance probability is high and decreases as the search runs.

RAxML & Alternating Criteria Stamatakis permits use of a modified simulated annealing in RAxML. First, he starts with a tree generated by stepwise addition using parsimony (randomized addition sequence), then applies MCMC. The SA approach can be used to alter topology via (lazy) SPR under ML, but only the branches involved in the swapping are reoptimized. Third, RAxML builds proposals to alter branch lengths and model parameters that are only accepted if they improve the likelihood (i.e., this aspect of the searches are entirely hill climbing). These optimizations are cursory and halted with a liberal stopping rule. This approach allows pretty thorough searches of tree space really quickly, which permits us to estimate ML trees for very large data sets (e.g., thousands of taxa).

Genetic Algorithms Paul Lewis (1998. Mol. Biol. Evol. 15:277) There are n individuals: tree with parameters and branch lengths. Ranked by their likelihood. Tree with highest fitness leaves k offspring in the next generation. Other trees leave offspring proportional to rank. All offspring are subject to branch length and model mutations. Some offspring ((n-1)/ ) are subject to random SPR mutations.

Genetic Algorithms Recombination searches tree space broadly. GARLi was written by Derek Zwickl and modifies Lewis GA. Topological mutations include NNI & SPR rearrangements and some local SPR. Starting trees are generated via stepwise addition with random addition sequences. This approach allows thorough searches of tree space for up to a couple thousand taxa.

Fast, Approximate Packages for Large Datasets RAxML: Starting tree via parsimony stepwise addition & many random addition sequences. Lazy random SPR rearrangements (evaluates under ML). Regrafts only close to prune point and BL optimization only for three branches closest to regraft point. Permits simulated annealing MCMC. Limited BL optimization (e.g., one round of Newton-Raphson). Model parameters optimized via hill-climbing. IQTree: Candidate set of (100) starting trees via parsimony stepwise addition with RAS. Rank under ML and select 20 best. Hill climb on each with local NNI, reoptimizing lengths of neighboring branches; retain best 5 trees. Select one at random for stochastic NNI; select internal branch at random for NNI swapping. Add perturbed tree to set of 5 if it s better than any of them and iterate. Repeat until 100 random perturbations without founding a better tree. PhyML: Starting tree via BIONJ (default), Parsimony (presumably stepwise addition, but it s not defined anywhere), a random tree, or a user-defined tree. Filtered SPR rearrangements: For all subtrees, all regrafts are scored under parsimony. Only good regrafts (under parsimony) are evaluated under ML and only lengths of close branches are re- estimated. Downslope movement can be approximated by evaluating (under ML) regrafts that are a few steps (can be tuned recommend PT=5) longer than the tree in memory. After filtered SPR searching is done, all model parameters and branch lengths are re-estimated.