Advanced Techniques in Contention Resolution Schemes

Explore the cutting-edge approaches in contention resolution schemes through submodular function maximization, multilinear relaxation, and stochastic probing. Understand constraints and relaxations involved, with a focus on balanced CRSs and approximation algorithms for maximizing weight functions. Discover the application of these techniques in various fields through demonstrations.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

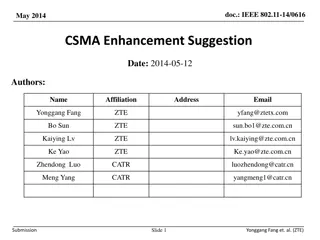

Contention Resolution Schemes: Offline and Online Moran Feldman The Open University of Israel Based On Submodular function maximization via the multilinear relaxation and contention resolution schemes. Chandra Chekuri, Jan Vondr k and Rico Zenklusen, SIAM J. Comput 2014. A stochastic probing problem with applications. Anupam Gupta and Viswanath Nagarajan, IPCO 2013. Online Contention Resolution Schemes. Moran Feldman, Ola Svensson and Rico Zenklusen, SODA 2016.

Talk Objectives Study a new tool Contention Resolution Schemes Main objective Promote the use of this tool in additional applications/fields. Demonstrate using a few applications. 2

Constraints Ground set N. A constraint is a down-closed subset F 2N. We say that a set is feasible (in F) if it belongs to F. The elements of N are mapped to the edges of a graph G = (V, N). A set S N is feasible if it is a legal matching in G. total weight of the elements in S is at most B. The intersection of more than two constraints can be defined similarly. Constraint Examples A matching constraint A knapsack constraint Each element is associated with a weight. A set S N is feasible if the A collection F of sets is down-closed if: (a) A F (b) B A Constraints Intersection The intersection of two constraints F1 and F2 is a new constraint F = F1 F2. A set is feasible in F if and only if it is feasible in both F1 and F2. B F 3

Relaxations 1S {0, 1}N is the characteristic vector of a set S N u 1 constraint F A polytope P is a relaxation of a The integral vectors of P are: {1S : S F} 0 u S ( ) u Reminder: = 1 S S Remarks A polytope is the relaxation of at most one constraint. A relaxation P of a constraint F contains the convex hull of F. F P 4

Contention Resolution Schemes A contention resolution scheme (CRS) x for a relaxation P of constraint F is a (random) function from 2N to 2N. (a) x(S) S (b) x(S) F For every set S N: This CRS ignores the parameter x. The function x might depend on the parameter x P. Example Matching Constraint Scan the elements (edges) of S in some arbitrary (fixed) order. For every edge uv, add it to the solution if no previous edge hits u or v. 5

Balanced CRSs Given a vector x [0, 1]N, R(x) is a random set containing every element e N with probability x(e), independently. A CRS x is (b, c)-balanced if: Given a vector x [0, 1]N, R(x) is a random set containing every element u N with probability x(u), independently. A CRS x is c-balanced if: ( ) ( ) x R e x Pr ( ( x R e x Pr b ( ) e c ) ) ( ) e c x b e N e x N Application - Approximation Algorithm Solve the LP: x w . t . s P x . t . s Application - Approximation Algorithm Solve the LP: x w Always outputs a set in F. Always outputs a set in F. max max bc-approximation for finding a set in c-approximation for finding a set in F maximizing the weight function w. F maximizing the weight function w. x P Output x(R(x)). Output x(R(b x)). 6

Analyzing the Matching CRS Relaxation max Conclusion The matching CRS is (b, (1 b)2)-balanced. For b = 1/3 we get 0.15-approximation. w x . t . s 1 x u V e ( ) u e 0 x e E e In R(b x) Fix an edge uv. No other edge in R(b x) hits u with probability 1 b. No other edge in R(b x) hits v with probability 1 b. The above two events are independent. 7

Monotone CRSs A CRS x is monotone if: e Pr ( ) S ( ) S Pr e e S S N 1 2 1 2 x x Observation The matching CRS is monotone. Why? The matching CRS is not randomized, so we need to prove: ( ) ( ) S e S e x x 1 S Edges of S2 must appear after uv. e S N 2 1 2 u v 8

Combing Monotone CRSs A (b, c1)-balanced CRS 1,x for polytope P1. A (b, c2)-balanced CRS 2,x for polytope P2. Consider the function x(S) = 1,x(S) 2,x(S). Observation x(S) is a CRS for P1 P2. Question How good is x(S)? Generalizes to the intersection of multiple constraints in the natural way. Lemma If 1,x(S) and 2,x(S) are monotone, then x(S) is a monotone (b, c1 c2)-balanced CRS. 9

Proof Idea Monotonicity e Pr ( ) S ( ) ( ) S ( ) ( ) S x = Pr Pr e S e S 1 , 1 1 , 2 1 x x x ( ) N = Pr Pr Pr e e e S , 1 2 , 2 2 2 x x e S S 1 2 (b, c1 c2)-Balance We need to show: e Pr By the FKG Decreasing function of S inequality Decreasing function of S | | ( ) S ( ) S ( ) S ( ) ( ) x c ( ) x e e Pr Pr , ~ e e b Pr c e c c e e N S R b 1 2 x S S 1 2 x x ( ) S ( ) S | Pr | e S e e S , 1 , 2 x x 10

Examples Some Known CRSs (b, e-2b)-balanced monotone CRS for matching polytopes (b, (1 e-b)/b)-balanced monotone CRS for matroid polytopes (1 , 1 )-balanced monotone CRS for knapsack polytopes There is a (b, e-4b)-balanced CRS for the intersection of two matching constraints. For b = 1/4, we get 0.092-approximation. Requires preprocessing. There is a (b, (e-2b e-3b)/b - )-balanced CRS for the Involving two different graph. intersection of a matching, a matroid and a knapsack constraints. For b = 0.4, we get 0.148-approximation. 11

Summary Quick (often non-optimal) approximation for involved constraints formed as the intersection of simple constraints. Quick to prove Often efficient The same ideas can be extended also to submodular objective functions. True also for the rest of the talk. 12

Stochastic Probing - Application Setting A ground set N. Every element e N has a weight we. Every element e N is active with probability pe. Known Algorithm (Policy) Can decide in each step which element to probe (if any). When an element e N is probed: The algorithm learns whether e is active. If e is active it must be added to the solution. Objective Construct a maximum weight solution. The probing must obey two constraints: inner and outer. 13

Stochastic Probing (cont.) Inner Constraint The solution must obey an inner constraint Fin 2N with relaxation Pin. Outer Constraint The set of probed elements must obey an outer constraint Fout 2N with relaxation Pout. Obeys the inner constraint Obeys the outer constraint Corollary The algorithm can probe an element e only if adding e does not violate neither Fin nor Fout. 14

Relaxation LP w max y w is the vector of element weights p is the vector of activation probabilities . t . s x P out y P in = y p x Intuition x(e) is the probability of probing element e. y(e) is the probability of element e to be both probed and active. Every legal algorithm induces a feasible solution. 15

Our Objective Given: A (b, c1)-balanced monotone CRS x,out for Pout. A (b, c2)-balanced monotone CRS x,in for Pin. An algorithm for the stochastic probing with a non-trivial approximation ratio. Spoiler: we aim for an approximation ratio of b c1 c2. Unfortunately, this is unknown. We need the CRS x,in to have an additional property. 16

Ordered CRS A CRS x is ordered if: x constructs x(S) by examining the elements in a (possibly random) order . For every examined element e the CRS x: Learns whether e S. If e S, decides whether to add e to x(S). Remark x does not know whether elements that have not been examined yet belong to S. Am I in S? Am I in S? Am I in S? Remark When calculating x(R(x)) the membership of an element e in R(x) can be decided only when x examines e. 17

Ordered CRS Examples Matching The matching CRS presented earlier is an ordered CRS. Some Additional Ordered CRSs (b, 1 b)-balanced ordered monotone CRS for matroids. (b, e-2b)-balanced ordered monotone CRS for matchings. (b, 1 1 / (2 2b))-balanced ordered monotone CRS for knapsack constraints (assuming b ). (b, 1 k b)-balanced ordered monotone CRS for k-systems. (b, 1 6b)-balanced ordered monotone CRS for unsplittable flow on trees (assuming no bottlenecks and b 60-1). 18

Algorithm Reminder x(e) The probability of probing e according to the relaxation. y(e) The probability of e to be both probed and active according to the relaxation. w y The value we need to compete with. Black Boxes x,out (b, cout)-balanced monotone CRS for Pout. x,in (b, cin)-balanced ordered monotone CRS for Pin. The algorithm obeys the outer constraint. First Step Choose a random set Rout R(b x), and let Q = x,out(Rout). Only (some) elements from Q will be probed. 19

Algorithm (cont.) First Step Choose a random set Rout R(x), and let Q = x,out(Rout). Only (some) elements from Q will be probed. Every element e belongs to Rout with probability b x(e) pe = b y(e), independently. Second Step We construct a set random set Rin R(b y) on the fly. For every element y,in(Rin) examines: Yes YesWill y,in except e if it is in Rin? Is e Rout? Is e Q? No No No Yes e is in Rin with probability pe Probe e. e is in Rin if it is active e is not in Rin 20

Algorithm (cont.) Solution x,out(Rout) y,in(Rin) The algorithm obeys the inner constraint. By the FKG inequality: Pr[e is selected] cin cout [b y(e)]. Yes YesWill y,in except e if it is in Rin? Is e Rout? Is e Q? No No No Yes e is in Rin with probability pe Probe e. e is in Rin if it is active e is not in Rin 21

Adversaries An ordered CRS examines elements in an order it specifies. What happens if the order is determined by an adversary? Offline Adversary Specifies the order at the beginning, knowing nothing beside x. Almighty Adversary Knows everything: R(x) and the (future) random bits of the algorithm. Online Adversary Chooses the order online. Has the same knowledge as the algorithm. 22

Greedy OCRSs Online CRS (OCRS) Examine the elements in an adversarial order. When examining an element e: Learns whether e belongs to R(b x). If e R(b x), decides whether to add e to the output. Greedy OCRS for a Relaxation P Let F be the constraint of P. Defined by a (possibly random) constraint Fx F. Let S be the output. When examining an element e R(b x): Add e to S if S {e} Fx. 23

Selectability We need a quality measure like balance for OCRSs. An OCRS Fx is (b, c)-selectable if: F e I x Pr ( ) , I R b x I F c e N x Intuition With probability c the set R(b x) has the property: If we add to the output a subset of R(b x) that is legal in Fx, then e can also be added to the output. If Fx is (b, c)-selectable, then every element e N is in the output with probability at least bc x(e). 24

Matching OCRS Matching CRS Scan the elements (edges) of S in some arbitrary (fixed) order. For every edge uv, add it to the solution if no previous edge hits u or v. current output hits u or v. Matching Greedy OCRS Scan the elements (edges) of S in the order specified by the adversary. For every edge uv, add it to the solution if no edge of the Depends on rejected edges. Not a greedy OCRS. Observations In this OCRS Fx = F. For an edge uv, with probability (1 b)2 no other edge in R(b x) hits u or v. (b, (1 b)2)-selectable. 25

More OCRSs Some Additional OCRSs (b, 1 b)-selectable greedy OCRS for matroids. (b, e-2b)-selectable greedy OCRS for matchings. (b, 1 1 / (2 2b))-selectable greedy OCRS for knapsack constraints (assuming b ). A (b, c1)-selectable greedy OCRS F1,x for polytope P1. A (b, c2)-selectable greedy OCRS F2,x for polytope P2. No need for monotonicity this time. Consider the constraint Fx = F1,x F2,x. Observation Fx is a greedy OCRS for P1 P2. Fx is (b, c1 c2)-selectable. 26

Stochastic Probing and OCRSs One can implement the algorithm for stochastic probing with greedy OCRSs Fx,out and Fx,in instead of the CRSs x,out and x,in. If Fx,out is (b, cout)-selectable and Fx,in is (b, cin)-selectable then we get a b cout cin-approximation. Advantage 1 Previously the algorithm examined elements in the order determined by x,in. Fx,in can work with an adversarial order. Allows adversarial ordering. The adversary points at elements, and the algorithm must decide immediately whether to probe the pointed element. 27

Advantage 2 - Deadlines Problem Extension Each element e N specifies a deadline de. If the algorithm probes e, this must be one of the first de probes. Idea Note that we assume again that the algorithm control the order. Consider the elements in a non-decreasing deadline order. Introduce a second outer constraint: d: Probe at most d elements of {e N | de d}. Laminar matroid constraint Chain of sets 28

Advantage 2 Deadlines (cont.) We need a specific order of examining elements The inner constraint is unchanged Two outer constraints: The original Laminar matroid OCRSs let us handle arbitrary orders (b, cin)-selectable OCRS for the inner constraint Corresponding OCRS: (b, cout)-selectable (b, 1 b)-selectable Approximation ratio for Stochastic Probing with Deadlines : b(1 b) cin cout Combined OCRS: (b, (1 b) cout)-selectable 29

More Applications Prophet Inequality Each element takes a random value from a known distribution. The elements are examined in some order. For every examined element: The algorithm learns its realized value. Must decide immediately whether to add it to the solution (subject to some constraint). an almighty adversary Even determined by (b, c)-selectable OCRS for a constraint bc-approximation for maximizing the total value 30

More Applications (cont.) Constrained Oblivious Posted Price Mechanisms Each buyer is willing to pay an amount chosen from a known distribution. The buyers arrive in an adversarial order. For every arriving buyer: The algorithm can skip the buyer or offer her a take-it- or-leave-it price, respecting a supply constraint. The buyer takes the offer if this is beneficial for her. Truthful The mechanism must, in fact, obey additional properties. (b, c)-selectable OCRS for a constraint bc-competitive mechanism compared to the optimal truthful mechanism 31

OCRS for the Graphical Matroid Graphical Matroid Defined by a graph G = (V, E). The elements of the matroid are the edges of E. A set S E of edges is feasible (independent) if it forms a forest (i.e., it contains no cycles). A similar proof works for general matroids. Relaxation The convex hull of the characteristic vectors of all forests. One can efficiently optimize linear functions over this polytope. 32

OCRS for the Graphical Matroid (cont.) This is the vector x from the relaxation polytope. Every edge e appears with probability b x(e). [b = here] 0.4 0.2 0.8 0.4 0.4 0.2 0.4 0.2 0.8 0.4 0.4 0.2 0.5 0.25 0.6 0.3 0.1 0.05 0.250.25 0.5 0.2 0.1 0.5 0.5 0.25 0.7 0.35 1 0.5 0.8 0.4 0.6 0.3 0.5 0.25 0.5 0.25 0.7 0.35 0.1 0.05 0.4 0.2 0.5 0.25 0.5 0.25 0.7 0.35 0.2 0.1 0.8 0.4 0.5 0.25 0.4 0.2 33

OCRS for the Graphical Matroid (cont.) We are interested in the probability of an edge to be spanned by other edges. Edges that are likely to be spanned have to be protected. 0.2 0.4 0.2 0.2 0.4 0.2 0.25 0.3 0.05 0.250.25 0.1 0.25 0.35 0.5 0.4 0.3 0.25 0.25 0.35 0.05 0.2 0.25 0.25 0.35 0.1 0.4 0.25 0.2 34

Technical Tool Theorem There exists a set S E of edges such that: ( ( R e span Pr ( ) ) \ ) \ b x S e b e N S Inside the contracted region it is OK to have an arbitrary forest. After contracting S no edge is likely to be spanned. 0.4 0.2 Like in the original problem 0.2 0.2 0.4 0.4 0.2 0.2 0.4 0.2 0.25 0.3 0.05 0.250.25 0.1 0.25 0.35 0.1 0.5 0.4 0.3 0.25 0.25 0.35 0.05 0.2 0.25 0.25 0.2 0.35 0.1 0.4 0.25 0.2 35

Complete OCRS Case 1 For an edge e S, accept the edge if it is not spanned in the contracted graph G(V, E) / S. The edge is accepted with probability at least 1 - b. Case 2 For an edge e S, feed it to the same OCRS on the induced graph G(V, S). We made advanecment since S N. A (b, 1 b)-selectable OCRS for the graphical matroid. 36