Understanding Encoder and Decoder in Combinational Logic Circuits

In the world of digital systems, encoders and decoders play a crucial role in converting incoming information into appropriate binary forms for processing and output. Encoders transform data into binary codes suitable for display, while decoders ensure that binary data is correctly interpreted and u

5 views • 18 slides

Knowledge Distillation for Streaming ASR Encoder with Non-streaming Layer

The research introduces a novel knowledge distillation (KD) method for transitioning from non-streaming to streaming ASR encoders by incorporating auxiliary non-streaming layers and a special KD loss function. This approach enhances feature extraction, improves robustness to frame misalignment, and

0 views • 34 slides

Laxmi Nagar: Your Shortcut to Digital Marketing Stardom

Living in Laxmi Nagar and dreaming of online marketing magic? Don't get tangled in the web of confusing terms like SEO and PPC! Laxmi Nagar is bursting with awesome digital marketing courses that ditch the jargon and make you a marketing whiz-kid in no time.\nThese courses are like secret decoder ri

1 views • 6 slides

Understanding Decoders and Multiplexers in Computer Architecture

Decoders and multiplexers are essential components in computer architecture, converting binary information efficiently. Integrated circuits house digital gates, enabling the functioning of these circuits. A decoder's purpose is to generate binary combinations, with examples like the 3-to-8-line deco

0 views • 37 slides

Evolution of Neural Models: From RNN/LSTM to Transformers

Neural models have evolved from RNN/LSTM, designed for language processing tasks, to Transformers with enhanced context modeling. Transformers introduce features like attention, encoder-decoder architecture (e.g., BERT/GPT), and fine-tuning techniques for training. Pretrained models like BERT and GP

1 views • 11 slides

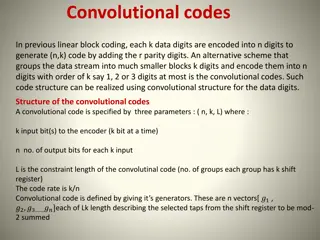

Understanding Convolutional Codes in Digital Communication

Convolutional codes provide an efficient alternative to linear block coding by grouping data into smaller blocks and encoding them into output bits. These codes are defined by parameters (n, k, L) and realized using a convolutional structure. Generators play a key role in determining the connections

0 views • 19 slides

ELECTRA: Pre-Training Text Encoders as Discriminators

Efficiently learning an encoder that classifies token replacements accurately using ELECTRA method, which involves replacing some input tokens with samples from a generator instead of masking. The key idea is to train a text encoder to distinguish input tokens from negative samples, resulting in bet

0 views • 12 slides

Decoding and NLG Examples in CSE 490U Section Week 10

This content delves into the concept of decoding in natural language generation (NLG) using RNN Encoder-Decoder models. It discusses decoding approaches such as greedy decoding, sampling from probability distributions, and beam search in RNNs. It also explores applications of decoding and machine tr

0 views • 28 slides

Comparing CLIP vs. LLaVA on Zero-Shot Classification by Misaki Matsuura

In this study by Misaki Matsuura, the effectiveness of CLIP (contrastive language-image pre-training) and LLaVA (large language-and-vision assistant) on zero-shot classification is explored. CLIP, with 63 million parameters, retrieves textual labels based on internet image-text pairs. On the other h

0 views • 6 slides

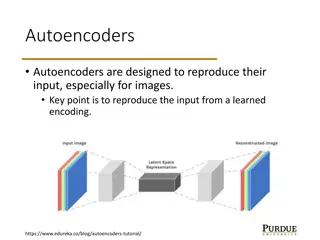

Understanding Variational Autoencoders (VAE) in Machine Learning

Autoencoders are neural networks designed to reproduce their input, with Variational Autoencoders (VAE) adding a probabilistic aspect to the encoding and decoding process. VAE makes use of encoder and decoder models that work together to learn probabilistic distributions for latent variables, enabli

6 views • 11 slides

Solar Energy Generator Design Rendering and Prototype Details

Solar Energy Generator design includes a prototype system mounted in a Pelican case with various peripherals. The system features a Laser Cut Delrin Panel covering all electronics with display, buttons, and a rotary encoder. External connections are facilitated through Souriau UTS circular connector

0 views • 7 slides

Generating Sense-specific Example Sentences with BART Approach

This work focuses on generating sense-specific example sentences using BART (Bidirectional and AutoRegressive Transformers) by conditioning on the target word and its contextual representation from another sentence with the desired sense. The approach involves two components: a contextual word encod

0 views • 19 slides

Optimized Colour Ordering for Grey to Colour Transformation

The research discusses the challenge of recovering a colour image from a grey-level image efficiently. It presents a solution involving parametric curve optimization in the encoder and decoder sides, minimizing errors and encapsulating colour data. The Parametric Curve maps grayscale values to colou

0 views • 19 slides

Line Encoding Lab 6 - 2019/1440: Polar NRZ-L, RZ, and MAN Techniques with Decoder

Explore the Line Encoding Lab 6 from 2019/1440, featuring Polar NRZ-L, RZ, and MAN techniques with decoders. Dive into Simulink settings and output results for each encoding method. Discover how to modify binary number generators and pulse generators to enhance encoding. Conclude with a thank you me

0 views • 14 slides

ZEN: Pre-training Chinese Text Encoder Enhanced by N-gram Representations

The ZEN model improves pre-training procedures by incorporating n-gram representations, addressing limitations of existing methods like BERT and ERNIE. By leveraging n-grams, ZEN enhances encoder training and generalization capabilities, demonstrating effectiveness across various NLP tasks and datas

0 views • 17 slides

Training wav2vec on Multiple Languages From Scratch

Large amount of parallel speech-text data is not available in most languages, leading to the development of wav2vec for ASR systems. The training process involves self-supervised pretraining and low-resource finetuning. The model architecture includes a multi-layer convolutional feature encoder, qua

0 views • 10 slides

Investigation of LDPC Improvements in IEEE 802.11-24

This document delves into the investigation of LDPC (Low-Density Parity-Check) improvements within IEEE 802.11-24 standards. It discusses the history of LDPC codes in 802.11 networks, current FEC (Forward Error Correction) details, a new proposal for LDPC codes, recent LDPC code developments in vari

0 views • 24 slides

Transformer Neural Networks for Sequence-to-Sequence Translation

In the domain of neural networks, the Transformer architecture has revolutionized sequence-to-sequence translation tasks. This involves attention mechanisms, multi-head attention, transformer encoder layers, and positional embeddings to enhance the translation process. Additionally, Encoder-Decoder

0 views • 24 slides

OWSM-CTC: An Open Encoder-Only Speech Foundation Model

Explore OWSM-CTC, an innovative encoder-only model for diverse language speech-to-text tasks inspired by Whisper and OWSM. Learn about its non-autoregressive approach and implications for multilingual ASR, ST, and LID.

0 views • 39 slides

Advances in Completely Automatic Decoder Synthesis

This presentation by Y.C. Chou and H.S. Liu on "Towards Completely Automatic Decoder Synthesis" covers topics such as motivation, preliminary concepts, main algorithms, and experimental results in the field of communication and cryptography systems. The content delves into notation, SAT solvers, Cra

0 views • 35 slides

Memory Address Decoding in 8085 Microprocessor

The 8085 microprocessor with 16 address lines can access 216 locations in physical memory. Utilizing a 74LS138 address decoder, chip select signals are generated for memory block selection. The interfacing involves decoding address lines to enable memory access, with distinctions between RAM and ROM

0 views • 18 slides

Automatic Decoder Synthesis: Advancements in Communication and Cryptography

Cutting-edge progress in automatic decoder synthesis for communication and cryptography systems, presented in a comprehensive study covering motivation, prior work, preliminary notations, SAT solver algorithms, and experimental findings.

0 views • 32 slides

BIKE Cryptosystem: Failure Analysis and Bit-Flipping Decoder

The BIKE cryptosystem is a code-based KEM in the NIST PQC standardization process, utilizing the Niederreiter variant of the McEliece Construction with a QC-MDPC code. It ensures security against IND-CPA, and efforts are made to further confirm or disconfirm its estimates for IND-CCA security requir

0 views • 14 slides

High-Speed Hit Decoder Development for RD53B Chip

Development of a high-speed hit decoder for the RD53B chip by Donavan Erickson from MSEE ACME Lab, focusing on data streams, hitmap encoding, ROM splitting, decode engine building, and more. The process involves encoding methods, ROM setup with borrowed software look-up tables, and buffer systems fo

0 views • 33 slides

Efficient Video Encoder on CPU+FPGA Platform

Explore the integration of CPU and FPGA for a highly efficient and flexible video encoder. Learn about the motivation, industry trends, discussions, Xilinx Zynq architecture, design process, H.264 baseline profile, and more to achieve high throughput, low power consumption, and easy upgrading.

0 views • 27 slides

Neural Image Caption Generation: Show and Tell with NIC Model Architecture

This presentation delves into the intricacies of Neural Image Captioning, focusing on a model known as Neural Image Caption (NIC). The NIC's primary goal is to automatically generate descriptive English sentences for images. Leveraging the Encoder-Decoder structure, the NIC uses a deep CNN as the en

0 views • 13 slides

Insights into the Rowhammering.BIKE Cryptosystem and Decoding Strategies

Explore the Rowhammering.BIKE cryptosystem, its parameters, and the black-grey flip decoder. Learn about the bitflipping algorithm, prior analysis of DFR in QC-MDPC decoders, and strategies for key recovery and decoding in this innovative system. Discover how understanding the error patterns related

0 views • 7 slides

Overview of BIKE Key Exchange Protocol by Ray Perlner

The BIKE (Bit-Flipping Key Exchange) protocol, presented by Ray Perlner, offers variants based on MDPC codes like McEliece and Niederreiter with a focus on quasi-cyclic structures. These non-algebraic codes show promise for key reduction, targeting IND-CPA security. The protocol features competitive

1 views • 18 slides

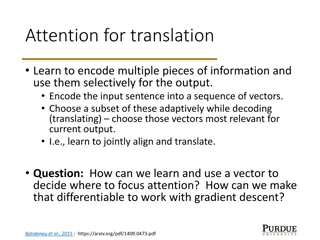

Understanding Attention Mechanism in Neural Machine Translation

In neural machine translation, attention mechanisms allow selective encoding of information and adaptive decoding for accurate output generation. By learning to align and translate, attention models encode input sequences into vectors, focusing on relevant parts during decoding. Utilizing soft atten

0 views • 17 slides

Understanding General Register Organization in Computer Architecture

In computer architecture, a common bus system is used to efficiently connect a large number of registers in the CPU. This enables communication between registers for data transfers and various microoperations. The setup includes multiple registers connected through a common bus, multiplexers for for

0 views • 40 slides