Managing File System Consistency in Operating Systems

File systems in operating systems face challenges with crash consistency, especially during write operations. Issues arise when modified data in cache is lost due to system crashes or when data structure invariants are violated. Solutions like write-through and write-back caches help mitigate these

6 views • 14 slides

In-Depth Look at Pentium Processor Features

Explore the advanced features of the Pentium processor, including separate instruction and data caches, dual integer pipelines, superscalar execution, support for multitasking, and more. Learn about its 32-bit architecture, power management capabilities, internal error detection features, and the ef

0 views • 24 slides

Constructive Computer Architecture Realistic Memories and Caches

Explore the realm of constructive computer architecture, realistic memories, and cache systems as presented by the Computer Science & Artificial Intelligence Lab at Massachusetts Institute of Technology. Delve into topics like 2-stage pipeline, magic memory models, and memory system views to gain in

0 views • 20 slides

Parallelism and Vector Instructions in CMPT 295

Delve into the world of parallelism and vector instructions in CMPT 295 as you explore fixed-length vector intrinsics, RISC-V concepts, computer programming fundamentals, processor execution processes, scalar and vector loops, and more. Discover the intricacies of memory, data arrays, structs, integ

1 views • 45 slides

Caching Techniques in Web Systems

Dive into the world of web caching with concepts like consistent hashing, Bloom filters, and shared caches for enhanced performance and efficiency. Discover the challenges faced in managing large-scale caches and learn about innovative solutions and ideas in the field of web systems.

0 views • 21 slides

Enhancing Multi-Node Systems with Coherent DRAM Caches

Exploring the integration of Coherent DRAM Caches in multi-node systems to improve memory performance. Discusses the benefits, challenges, and potential performance improvements compared to existing memory-side cache solutions.

0 views • 28 slides

Enhancing Memory Cache Efficiency with DRAM Compression Techniques

Explore the challenges faced by Moore's Law in relation to bandwidth limitations and the innovative solutions such as 3D-DRAM caches and compressed memory systems. Discover how compressing DRAM caches can improve bandwidth and capacity, leading to enhanced performance in memory-intensive application

0 views • 48 slides

Memory Consistency Models and Sequential Consistency in Computer Architecture

Memory consistency models play a crucial role in ensuring proper synchronization and ordering of memory references in computer systems. Sequential consistency, introduced by Lamport in 1979, treats processors as interleaved processes on a shared CPU and requires all references to fit into a global o

1 views • 64 slides

Summer Fellows 2024: Dive into OSDF Caches and IP Geolocation Challenges

Explore the Summer Fellows 2024 program focusing on topics like Glideins, IP geolocation challenges, OSDF Caches, and the use of AI in OSPool Failure Classification. Participants delve into learning the GlideinWMS system and grappling with issues related to network latency, hops, and machine learnin

0 views • 64 slides

Architecting DRAM Caches for Low Latency and High Bandwidth

Addressing fundamental latency trade-offs in designing DRAM caches involves considerations such as memory stacking for improved latency and bandwidth, organizing large caches at cache-line granularity to minimize wasted space, and optimizing cache designs to reduce access latency. Challenges include

0 views • 32 slides

Cache Memory Organization in Computer Systems

Exploring concepts such as set-associative cache, direct-mapped cache, fully-associative cache, and replacement policies in cache memory design. Delve into topics like generality of set-associative caches, block mapping in different cache architectures, hit rates, conflicts, and eviction strategies.

0 views • 35 slides

CSE351 Spring 2019 - Caches and Memory Concepts

Exploring the intricacies of caching in CSE351 Spring 2019, delving into memory management, data structures, assembly language, Java comparisons, and mnemonic aids. The course covers practical applications and theoretical underpinnings, including memory allocation, virtual memory, and processor cach

0 views • 31 slides

CSE351 Autumn 2017: Caches Instructor and TA Information

The content provides information about the CSE351 course on caches for Autumn 2017, including details about the instructor, teaching assistants, administrative updates, midterm policies, course roadmap, units and prefixes, mnemonic techniques, and execution time analysis. It also includes important

0 views • 31 slides

Caches and the Memory Hierarchy in Computer Systems

Delve into the intricacies of memory hierarchy and caches in computer systems, exploring concepts like cache organization, implementation choices, hardware optimizations, and software-managed caches. Discover the significance of memory distance from the CPU, the impact on hardware/software interface

0 views • 84 slides

Enhancing Accelerator-Host Coherence with Crossing Guard

Explore the need for coherence interfaces in integrating accelerators with host protocols, addressing complexities and safety concerns, emphasizing customizable caches and standardized interfaces for optimal performance and system reliability.

0 views • 55 slides

Efficient Handling of Cache Miss Rate in FPGAs

This study focuses on improving cache miss rate efficiency in FPGAs through the implementation of non-blocking caches and efficient Miss Status Holding Registers (MSHRs). By tracking more outstanding misses and utilizing memory-level parallelism, this approach proves to be more cost-effective than s

0 views • 44 slides

Efficient Cache Management using The Dirty-Block Index

The Dirty-Block Index (DBI) is a solution to address inefficiencies in caches by removing dirty bits from cache tag stores, improving query response efficiency, and enabling various optimizations like DRAM-aware writeback. Its implementation leads to significant performance gains and cache area redu

0 views • 44 slides

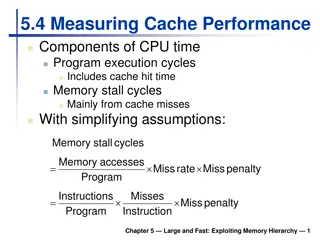

Cache Performance Components and Memory Hierarchy

Exploring cache performance components, such as hit time and memory stall cycles, is crucial for evaluating system performance. By analyzing factors like miss rates and penalties, one can optimize CPU efficiency and reduce memory stalls. Associative caches offer flexible options for organizing data

0 views • 22 slides

Proxies, Caches, and Scalable Software Architectures

Statelessness, proxies, and caches play key roles in creating scalable software architectures. The lecture explains the concepts of proxies and caches, highlighting their functions in enhancing performance and scalability. Proxies act as intermediaries for requests, while caches store frequently acc

0 views • 13 slides

Introduction to CernVM File System (CVMFS)

CernVM File System (CVMFS) is a scalable, reliable, and low-maintenance software distribution service used by various computing communities. It was developed to support High Energy Physics (HEP) collaborations and has since been adopted by other fields like Medical, Space, and Earth Sciences. Using

0 views • 16 slides

Web Caching: An Overview

Web caching, implemented through various types of caches like browser cache, proxy cache, and gateway cache, plays a crucial role in improving content availability, reducing network congestion, and enhancing user experience by saving bandwidth and decreasing latency. It addresses the challenges pose

0 views • 27 slides

Trace-Driven Cache Simulation in Advanced Computer Architecture

Trace-driven simulation is a key method for assessing memory hierarchy performance, particularly focusing on hits and misses. Dinero IV is a cache simulator used for memory reference traces without timing simulation capabilities. The tool aids in evaluating cache hit and miss results but does not ha

0 views • 13 slides

Cache Coherence in Computer Architecture

Exploring the concept of cache coherence in computer architecture, this content delves into the challenges and solutions associated with maintaining consistency among multiple caches in modern systems. It discusses the importance of coherence in shared memory systems and the use of cache-coherent me

0 views • 24 slides

Memory and Caches in CSE 351 Spring 2020: Insights and Roadmap

Exploring the world of memory and caches in CSE 351 Spring 2020 led by Instructor Ruth Anderson and her dedicated team of Teaching Assistants. Discover the essential topics covered such as data integers, x86 assembly, processes, and more. Dive into the nuances of memory allocation, Java implementati

0 views • 30 slides

Enhancing Key-Value Store Efficiency with SwitchKV

Explore how SwitchKV optimizes key-value store performance through content-aware routing, minimizing latency, and enabling efficient load balancing. By leveraging SDN and switch hardware, SwitchKV offers a fast, cost-effective solution for dynamic workloads in modern cloud services, overcoming chall

0 views • 16 slides

Advanced Computer Architecture in Parallel Computing

Covering topics like Instruction-Set Architecture (ISA), 5-stage pipeline, and Pipelined instructions, this course delves into the intricacies of advanced computer architecture, with a focus on achieving high performance by optimizing data flow to execution units. The course provides insights into t

0 views • 12 slides

Web Caching, Proxies, and CDNs in Web Architecture

This comprehensive guide delves into the concepts of web caching, proxies, and CDNs, explaining their importance in web architecture. It covers topics such as caching mechanisms, browser cache management, what can be cached, and controlling caches with HTTP headers. The provided images visually illu

1 views • 42 slides

Intelligent DRAM Cache Strategies for Bandwidth Optimization

Efficiently managing DRAM caches is crucial due to increasing memory demands and bandwidth limitations. Strategies like using DRAM as a cache, architectural considerations for large DRAM caches, and understanding replacement policies are explored in this study to enhance memory bandwidth and capacit

0 views • 23 slides

Revolutionizing Data Storage: The BW-Tree Architecture for Modern Hardware Platforms

The BW-Tree presents a novel latch-free approach for high-performance data management on modern hardware. By leveraging processor caches and implementing log-structured storage, it offers efficient data organization and management. The architecture ensures thread efficiency and cache preservation, t

0 views • 37 slides

Memory Hierarchy in Parallel Computer Architecture

This content delves into the intricacies of memory hierarchy, caches, and the management of virtual versus physical memory in parallel computer architecture. It discusses topics such as cache compression, the programmer's view of memory, virtual versus physical memory, and the ideal pipeline for ins

2 views • 86 slides

The Contents of a Vnode in Operating Systems

Contents of a vnode in operating systems include flags for identifying attributes, reference counts, pointers to filesystem structures, information for file read-ahead, and more. Vnodes contain various elements such as mutex for protecting flags, lock-manager, caches for names, vnode operations, and

0 views • 22 slides

Content Distribution Networks and P2P File Sharing in Advanced Computer Systems

Exploring the challenges of single-server setups, skewed web traffic popularity, web caching techniques like forward and reverse proxies, and the role of proxy caches in optimizing content delivery and reducing server costs.

0 views • 27 slides

Resolverless DNS and its Implications

Resolverless DNS, as discussed by Geoff Huston, delves into the architecture and issues of the DNS system. It explores the impact of resolver caches on speed and predictability of resolution, the potential for filtering DNS content, and the significance of metadata collection. The evolution of DNS s

0 views • 30 slides

Maximizing Cache Hit Rate with LHD: An Overview

This presentation discusses the concept of Least Hit Density (LHD) for improving cache hit rates, focusing on the challenges and benefits of key-value caches in maximizing performance through efficient eviction policies like LRU. It emphasizes the importance of cache hit rates in enhancing web appli

0 views • 40 slides

Cooperative Cache Scrubbing for Efficient Memory Management in Multicore Systems

Cooperative Cache Scrubbing optimizes memory management in multicore systems by efficiently handling short-lived application objects and reducing unnecessary data writes to memory. By communicating semantic information to hardware caches, dead lines are scrubbed, dirty bits unset, and unnecessary fe

0 views • 40 slides

Cross-Core Prime+Probe Attacks on Non-inclusive Caches: Modern Challenges and Solutions

Modern systems are transitioning to non-inclusive cache hierarchies, presenting new challenges for cache attacks. This study delves into the complexities of Prime+Probe attacks on non-inclusive caches, highlighting the difficulties in eviction set construction and the need for innovative strategies

0 views • 10 slides

Online Algorithms and Cache Optimization

This comprehensive overview delves into the efficient management of pages and caches in computer systems. Explore the k-Server Problem, Linear Programming, and the intricacies of cache eviction strategies. Discover the competitive algorithms and the role of randomization in enhancing performance.

0 views • 35 slides

Carnegie Mellon Cache Memories and Computer Organization

In this information from Carnegie Mellon, explore topics related to cache memory organization, performance impact of caches, memory hierarchy, cache concepts, and cache memory management in computer systems. Learn about the structure of cache memory, memory hierarchy levels, and general cache organi

0 views • 45 slides

Architectural Analysis and Modeling of Deeply-scaled FinFET Devices in Caches

Memory design in deeply-scaled CMOS technologies faces challenges due to short channel effects and device mismatches. This study explores FinFET devices for enhancing cache stability through robust SRAM cell designs and modeling using the FinCACTI tool.

0 views • 23 slides