Preferred Capabilities of Record Linkage Systems for Research

This presentation by Krista Park from the US Census Bureau explores the preferred capabilities of record linkage systems in facilitating research. It includes disclaimers, details about the researcher stakeholder team, coordination, leadership oversight, and the outline of the linkage process. The focus is on the intersection of research, data science, and optimization for effective record linkage.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Preferred Capabilities of Record Linkage Systems for Facilitating Research Record Linkage Krista Park US Census Bureau Center for Optimization and Data Science Presentation for FEDCASIC 2023 1

Disclaimers This presentation is released to inform interested parties of research and research requirements and to encourage discussion of a work in progress. Any views expressed are those of the authors and not those of the U.S. Census Bureau. Further, although all of the contributing authors for the report upon which this presentation is based are credited for their large efforts on the project, the contents of this presentation are solely those of the presenter. Not all other contributors may have had the opportunity to do a deep review of this presentation. This presentation includes no content derived from restricted data sets. 2

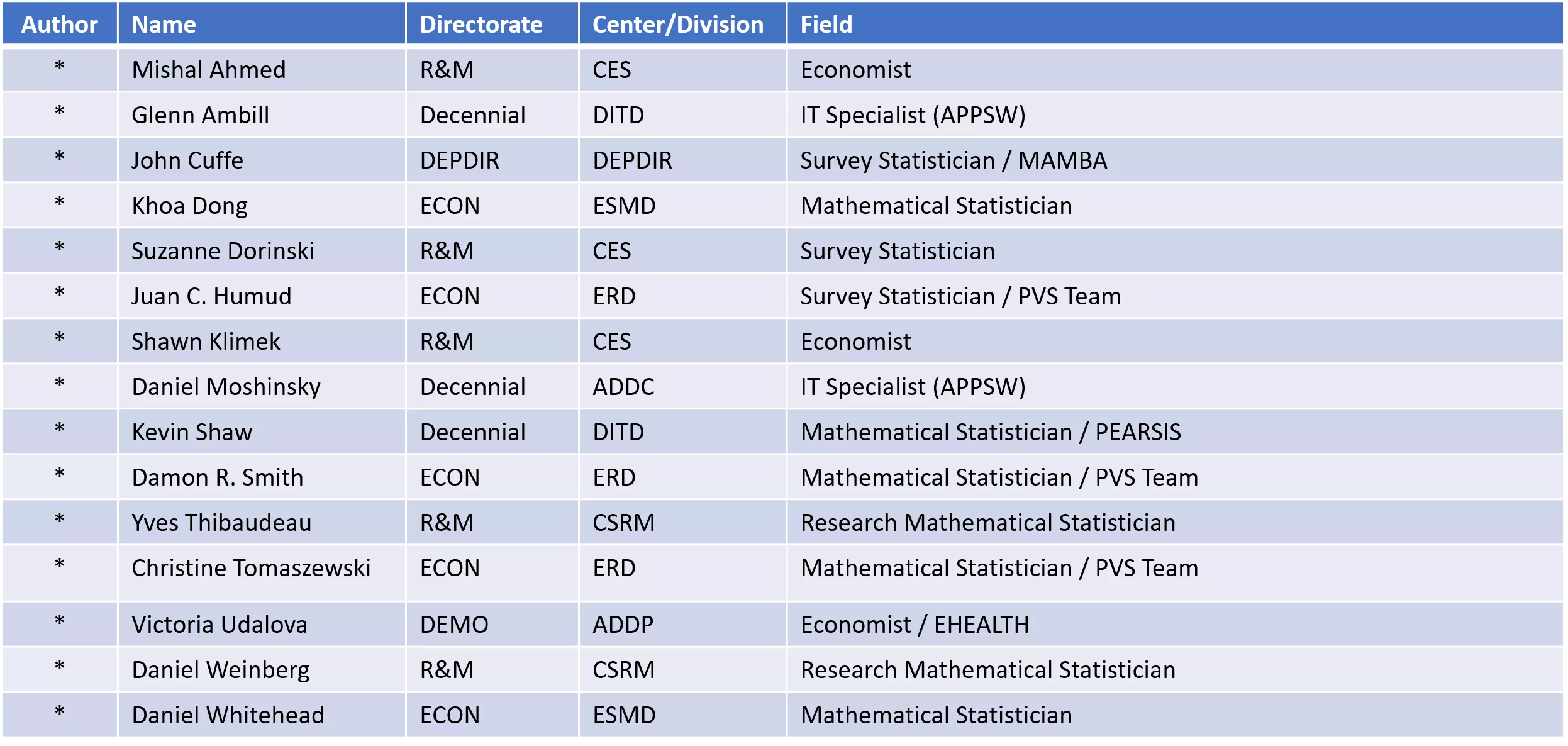

Researcher Stakeholder Team / Co-Authors Author Name Directorate Center/Division Field * Mishal Ahmed R&M CES Economist * Glenn Ambill Decennial DITD IT Specialist (APPSW) * John Cuffe DEPDIR DEPDIR Survey Statistician / MAMBA * Khoa Dong ECON ESMD Mathematical Statistician * Suzanne Dorinski R&M CES Survey Statistician * Juan C. Humud ECON ERD Survey Statistician / PVS Team * Shawn Klimek R&M CES Economist * Daniel Moshinsky Decennial ADDC IT Specialist (APPSW) * Kevin Shaw Decennial DITD Mathematical Statistician / PEARSIS * Damon R. Smith ECON ERD Mathematical Statistician / PVS Team * Yves Thibaudeau R&M CSRM Research Mathematical Statistician * Christine Tomaszewski ECON ERD Mathematical Statistician / PVS Team * Victoria Udalova DEMO ADDP Economist / EHEALTH * Daniel Weinberg R&M CSRM Research Mathematical Statistician * Daniel Whitehead ECON ESMD Mathematical Statistician 3

Coordination, Leadership Oversight, Computer Environment Support, Program Management Support A Name Directorate Center/Division Field * Krista Park R&M Center for Optimization and Data Science (CODS) * Casey Blalock R&M Center for Economic Studies (CES) Statistician (Data Scientist) * Steven Nesbit Contractor OCIO / ADRM J. David Brown R&M Center for Economic Studies (CES) Economist Kristee Camilletti R&M ADRM Contracts / Acquisitions Jaya Damineni R&M Center for Optimization and Data Science (CODS) CODS ADC - Software Engineering Ken Haase R&M ADRM ST Computer Science Anup Mathur R&M Center for Optimization and Data Science (CODS) Chief, CODS Vincent T. Mule Decennial DSSD Mathematical Statistician And Thank you to the many people I didn t list. 4

Research v. Production Record Linkage Research Variety of data set formats / schema Easy to experiment with multiple blocking & matching algorithms Easy to change settings Detailed metadata for both the matching & computational systems Production Restricted data set formats / schema Able to do planned blocking & matching algorithm Metadata required for downstream production 6

Requirements Gathering & Refinement Phases 1. Current State Assessment (March 2021 Start) 2. Elicit Capability Requirements and Weights 3. Demonstration 4. Gating 5. Technical Solutions Assessment (TSA) Scoring and Selection 6. POCs /QaQI and Score Update 7. TSA Model and Results 8. Findings 7

Phase 1: Current State Repository of Census Bureau Record Linkage Software Research Papers Internal Reports External Analysis List of commercial, academic, and open source record linkage solutions Reviewed reports by research and advisory companies or organizations such as Forrester, Gartner, USDA, and MIT 8

Phase 2: Elicit Capability Requirements, Criteria and Weights The Subject Matter Experts (SMEs) were divided into five teams. Each team met for 3-6 Requirements gathering workshops. Each workshop lasted approximately 2- 3 hours. The groups were then shuffled, and in three teams met for 3 hour workshops (Over 10 workshops) to refine the requirements and finalize criteria and weights for each requirement Finally, the entire group met to walkthrough and approve the requirements in another 3 hour workshop. Total of over 35 sessions 9

Capability Requirements Categories and Topics Requirements are organized into 6 categories aligned with the Census Bureau Technical Solutions Assessment (TSA) Framework published by the Chief Technology Office (CTO)/Office of Systems Engineering (OSE) and used to build out a comprehensive set of topics within each category encapsulating the Records Linkage Capability Requirements. Categories Capability Requirement Topics (with number of capability requirements per topic) Data Handling (81) Outputs/Results (15) Indexing, Blocking, Clustering or Equivalent (16) Use Cases (6) Diagnosability (7) Reportability (26) Operational Risk (1) Elasticity (2) Response Time (5) Risk (2) Field Comparison (32) Technical Risk (1) Matching / Classification (31) Technical (182) Configurability (16) Operability (21) Supportability (13) Adaptability (1) Scalability (12) QaQI Quality Metrics and Experience (20)* Enterprise Licensing Agreement (1) Source Code Availability (1) Cost Factor Risk (1) Maintainability (3) Monitorability (6) Manageability (1) Serviceability(8) Operations (102) Integration (5) Accuracy (2) Interoperability (3) Performance Metrics (6) Performance (58) Solution Price Model (1) Commitment Requirement (1) Training Price Model (1) Hidden Costs (1) IT Implementation and Support Costs (1) Maintenance and Support Costs (1) Training (1) Cost Factors (9) User Experience (9) Accessibility 508 (1) Product Documentation (1) Resilience (1) Localization (1) Technical Documentation (1) Cybersecurity (11) Usability (4) Compliance (6) Security (18) *Developed in a later project phase Total number of Capability Requirements: 378 10

Phase 3: Demonstrations 3 Hour Presentations on Commercial, Open Source, and Internal solutions Record Linkage as well as 90 Minute Demonstrations 45 Minute Answers to Distributed Questions 45 Minute Interactive Q&A + Suggestions/Recommendations Demonstrations used simulated data generated by the febrl data generator (200k records in the original file; 300k records in the duplicate file) Demonstrations were conducted explicitly NOT as part of an acquisition with several layers of protection to ensure Census Bureau staff who participate in future acquisitions in this zone will not get the results from these demonstrations. 11

Phase 4: Gating Phase 5: Technical Solutions Assessment (TSA) Scoring and Selection 43 Key Capabilities Identified. Used to assess the entire pool of candidate software packages and eliminate (Gate) those that wouldn t meet the key requirements. Remaining packages were scored against the entire TSA Lessons Learned Small commercial solutions, Internal and Open-Source Solutions were primarily built for the specific purpose of performing records linkage at a component level versus attempting to deliver End-to-End Enterprise capabilities as larger Commercial Solutions implement Large, complex, simulated data set is needed to enable this type of product research. The simulated data set used was too small to fully evaluate the products 12

Reasons for Initial Gating Solutions Eliminating Criteria Data Formats Databases Supported Isolate/Handle Errors w/o Disruption Logging Maintain Original Dataset Turn Off Built-In Standardizer Technical Risk Category Gating Capability Requirement Topics Data Formats Databases Supported Maintain Original Dataset Local Storage Locations Turn Off Built-In Standardizer Process Missing Values Isolate/Handle Errors w/o Disruption Logging Select/Customize Blocking Variables Only Non-Traditional Indexing Explains Approach Support Deduplication Match a single input to another Matching a single dataset to another Matching 1:M datasets Matching M:M datasets Select two or more datasets to match De-Duplication Facility Identify Best Match Available Store Possible Matches Match Quality Indicators Technical Risk Technical Operational Risk Census Bureau Approved Operating System Commercial Software Ability to Obtain SWG Approval Operational Risk (e.g., Stability) Operations Performance Risk Enterprise Standards Profile Ability to be Compatible Performance Risk (e.g., Stability) Solution Pricing Model Commitment Requirement Hidden Costs Localization Minimal Skillset Level Performance Solution Pricing Model Cost Factor Risk Maintenance and Support Costs Cost Factor Risk (e.g., No Commercial Pricing, No U.S. Sales) Cost Factors Technical Documentation Overall Product Documentation Technical Documentation User Experience Internet Connections Security Monitoring Enterprise Certificate Authority RDP or SSH Support, Cryptography Secure Sockets Layer (SSL) Transport Layer Security (TLS) FedRAMP Solution National Origin of Solution Security 13

Phase 5 (cont): Condensed TSA SMEs realized that Census Internal and Open-Source Solutions were not intended to result in end-to-end solutions (the model the TSA was designed for) Created a subset of the Capability Requirements (194/358) focused on Records Linkage engines better suited to this set of solutions Team recommendations for the hands-on-test were informed by, not dictated by the scores. Considerations: Number of solutions achievable within the schedule + Is there value add for more algorithms or features in Records Linkage in Python? + The capability to process the number of records defined in the QaQI Use Cases + User Base for the Solution vs the Language + Can the Solution run on Spark? + Relative speed of the Solution + Ability to change Solution Code + Amount of debugging required to support the QaQI + QaQI team skillset 14

Phase 6: QAQI and Score Updates Replaced a Proof-of-Concept Test involving commercial projects due to logistical hurdles Hand on Testing with only Internal and Open Source packages Cloud Computing Environment Mix of Decennial Census & Business Use Cases Results Documented Quality Metrics Calculations Revisions to existing TSA Capability Requirements 20 New QaQI Quality Metrics and Experience Capability Requirements defined and Scored User Experience Write-ups QAQI Results Evaluation Forthcoming paper by Yves Thibaudeau 15

Phase 7: TSA Model and Results Viable commercial options exist that meet current requirements Commercial options are often pipelines (they are user friendly w/ GUIs) Open source options are less frequently complete pipelines Performance and Quality benchmarks were developed as part of the QAQI effort Internal and Open Source solutions that passed the Gating review were benchmarked using the process developed during the QAQI effort 16

Phase 8: Findings Census has emerging requirements that aren t met by existing solutions Census users often need a complete pipeline and would prefer a GUI Users want A broad range of data transformation / standardization tools built in A variety of different (1) blocking and (2) linking algorithm options available within the tool Native support for multiprocessing, multi-core processors, and threading Realtime monitoring of the environment and resources including load history, activity, errors, workflows and services including triggering actions when criteria are met 17