Analysis of Deep Learning Models for EEG Data Processing

This content delves into the application of deep learning models, such as Sequential Modeler, Feature Extraction, and Discriminator, for processing EEG data from the TUH EEG Corpus. The architecture involves various layers like Convolution, Max Pooling, ReLU activation, and Dropout. It explores temporal and spatial context analysis, feature extraction techniques, and classification results in the context of EEG data processing. The models are designed to handle long-term dependencies and generate predictions based on input EEG signals.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

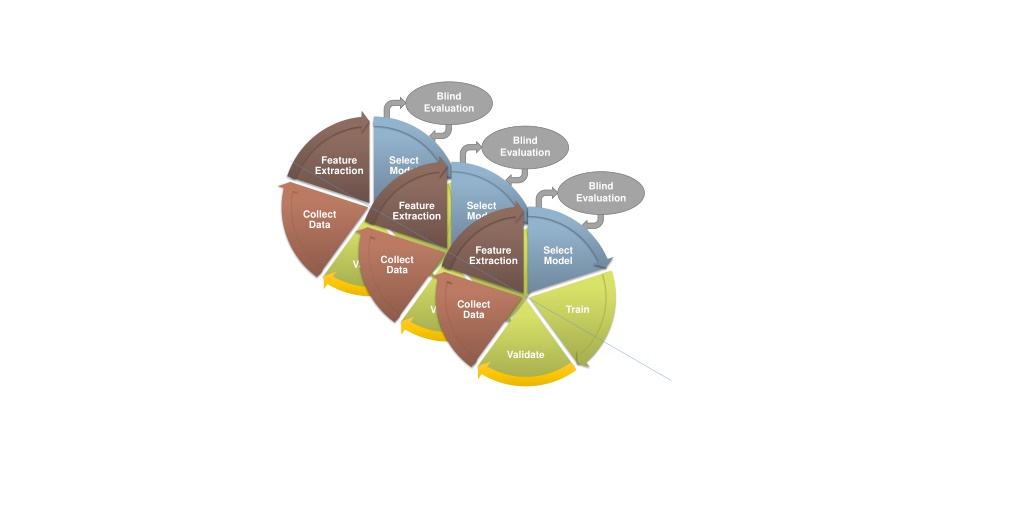

Blind Evaluation Blind Evaluation Feature Extraction Select Model Blind Evaluation Feature Extraction Select Model Collect Data Train Feature Extraction Select Model Collect Data Validate Train Collect Data Train Validate Validate

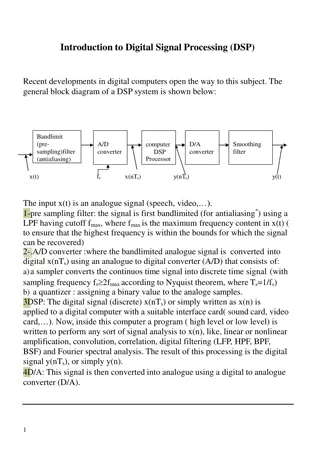

Epoch Posteriors Temporal and Spatial Context Analysis by Deep Learning ? Sequential Modeler ?11 ?22 ?33 ? ?? Feature Extraction ?12 ?23 TUH EEG ?? ??(x,z) ?2(?) ?1(?) ?3(?) Corpus ? ? Hidden Markov Models Stacked Denoising Autoencoders Post Processor? ? Output S2 S1 ? ? ? ? S4 S3 ? Epoch Label Finite State Machine for Language Model

Input Layer Convolution Max Pooling 16@34*10 Convolution Convolution Convolution 32@32*8 16@68*20 32@34*10 16@70*22 Channels Flatten Max Pooling Convolution Max Pooling 32@16*4 Convolution Time 64@16*4 64@14*2 64@7*1 Output ...

Input ReLU ReLU ReLU Conv2d Dropout Conv2d Dropout Conv2d Dropout Conv2d ReLU ReLU Dropout Conv2d ReLU Dropout Conv2d ReLU Dropout Conv2d Conv2d ReLU Dropout Conv2d + + + + Max Pooling2D Max Pooling2D ReLU ReLU Dropout Conv2d Dropout Conv2d Flatten ReLU ReLU ReLU Dropout Conv2d ReLU Dropout Conv2d Dense Dropout Conv2d Dropout Sigmoid + + Predictions

Discriminator Classification Result CNN Real World EEGs TUH EEG Corpus x Transposed CNN Is Discriminator Correct? Noise Synthetic Generated EEGs Update Models Generator

Sequential Modeler Feature Extraction Output Gate ?? Output Input Gate ?? ?? ?? TUH EEG Corpus Forget Gate ?? Learning Long-Term Dependencies with LSTM

Feature Extraction 2D-Max Pooling 210@5*6*32 2D-Convolution 2D-Max Pooling 210@11*13*16 2D-Convolution 210@22*26*16 210@11*13*32 210@22*26*1 TUH EEG Corpus 2D-Convolution 1D-Convolution 210@16 Flatten 210@384 2D-Max Pooling 210@2*3*64 210@5*6*64 Sequential Modeler Post Output Gate Processor ?? Input Gate ?? Output ?? ?? 1D-Max Pooling 26@16 Forget Gate ?? Learning Long-Term Dependencies with B- LSTM

Epoch Posteriors Sequential Modeler Output Gate Sequential Modeler ?11 ?? ?22 ?33 Input Gate ?? Feature Extraction ?12 ?23 TUH EEG ?? ?? ?2(?) ?1(?) ?3(?) Corpus Forget Gate Hidden Markov Models ?? Learning Long-Term Dependencies with LSTM Post Processor? ? Output S2 S1 ? ? ? ? S4 S3 ? Epoch Label Finite State Machine for Language Model

0.88 0.9 7 0.61 0.82 0.91 0.87 0.72 0.57 0.77 0.99 0.6 Probabilistic filtering (Threshold = 0.75) 0.88 0.9 7 0.82 0.91 0.87 0.77 0.66 5 0.99 0.6 Duration filtering (Threshold = 3 Sec.) 0.88 0.63 2 0.9 7 0.82 0.91 0.87 0.77 Target aggregation (Margin = 3.54 Sec.) 0.88 0.63 2 0.9 7 0.91 0.82 Posterio r Seizure Posterio r Backgroun d Time scale 4 Seconds