Enhancing Information Retrieval with Augmented Generation Models

Augmented generation models, such as REALM and RAG, integrate retrieval and generation tasks to improve information retrieval processes. These models leverage background knowledge and language models to enhance recall and candidate generation. REALM focuses on concatenation and retrieval operations, while RAG decouples language models and background knowledge for scalable retrieval. Both models offer differential methods like hidden vectors and non-differential methods like WebGPT. The approach involves retrieving related documents, concatenating with masked contexts, and generating predictions. This innovative approach has been applied to tasks like intensive NLP and neural machine translation with monolingual translation memory, showcasing the potential for improving information retrieval efficiency and accuracy.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

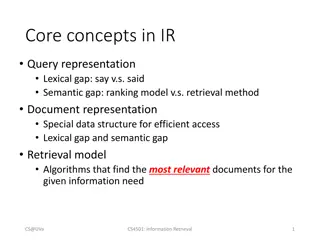

Background Retrieval Models recall task: search the candidates given the query sparse model (TF-IDF, BM25) dense models (DPR, dense phrase, ...) rerank task: rank the candidates based on the given context Generation Models given the prefix, generate the candidates step by step Retrieval Augmented Generation Models (RAG) decouple the language model and the background knowledge, easy to scale up recall related sentences or documents from the corpus given the prefix and these related resources, generate the candidates step by step

Outline of Retrieval augmented Generation Models Differential Methods Concatenation: REALM, RAG Hidden vector: using extra memory encoder LM Probability rerank: kNN-LM, Pointer Network Non-differential Methods: WebGPT, Retro

Differential Methods: Concatenation REALM: Retrieval REALM: Retrieval- -Augmented Language Model Pre Augmented Language Model Pre- - Training ( Training (arXiv arXiv 2020) 2020) Overview: Given the partial masked context ?, predict the masked token ? with the help of the retrieved document ? Step 1: retrieved related documents with MIPS (dense retrieval) Step 2: concatenate masked context ? and document ?, then feed it into the PLM to calculate the prediction logits of ?

Differential Methods: Concatenation Retrieval Retrieval- -Augmented Generation for Knowledge Augmented Generation for Knowledge- - Intensive NLP Tasks (NIPS 2020) Intensive NLP Tasks (NIPS 2020) Overview: Same with REALM, RAG also conduct the retrieval- augmented generation by using MIPS and concatenation operations RAG-Sequence Model: generate the whole sequence probability based on each document RAG-Token Model: generated the next token probability based on all of the documents

Differential Methods: Hidden Vector Neural Machine Translation with Monolingual Translation Memory (ACL 2021) Neural Machine Translation with Monolingual Translation Memory (ACL 2021) Overview: follow the basic process of the concatenation-based models (RAG, REALM) Difference: additional memory encoder models encode memory (retrieved documents/sentences) into dense representation advantage: faster disadvantage: squeezed document representation (dense vector) may lose essential information

Differential Methods: LM probability rerank Generalization Through Memorization: Nearest Neighbor Language Models (ICLR 2020) Generalization Through Memorization: Nearest Neighbor Language Models (ICLR 2020) Overview: Build the offline index by saving the context-target value (context=prefix, target=next token), then use the k-NN target token probability to rerank the next token probability. kNN context-target retrieval: search the related context-target pairs by calculating the similarity of the context and query Rerank the next token probability: final next token probability is composed from the language models and the normalized kNN targets.

Non-differential Methods WebGPT WebGPT: Browser : Browser- -assisted question assisted question- -answering with human feedback ( Overview: Fine-tuning GPT-3 to answer the long-form question based on the knowledge searched from the online search engine (Bing), which means the optimizing process is non-differential. Define demonstrations for fine-tuning the GPT-3 model 1. At each step, the model must issue one of the command 2. When the model issues the command End: Answer End: Answer or the maximum reference number is achieved, the GPT-3 model begins to write the answer based on the question and all of the references that are recorded during browsing. Discussion of non-differential Disadvantage: non-differential models are hard to optimize. For WebGPT, lots of complex optimizing methods are used to fine-tune the model (supervised learning, reinforcement learning, reward modeling, and rejection sampling) Advantage: get rid of the dependence, more flexible, easy to scale answering with human feedback (arXiv arXiv 2021.12) 2021.12)