Understanding Multiclass Logistic Regression in Data Science

Multiclass logistic regression extends standard logistic regression to predict outcomes with more than two categories. It includes ordinal logistic regression for hierarchical categories and multinomial logistic regression for non-ordered categories. By fitting separate models for each category, such as predicting student concentrations based on various predictors, multiclass logistic regression helps estimate probabilities of individuals falling into different classes. The model can be represented mathematically to predict multiple classes from a set of predictors.

- Multiclass Logistic Regression

- Data Science

- Ordinal Logistic Regression

- Multinomial Logistic Regression

- Predictive Modeling

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Lecture 18: Multiclass Logistic Regression CS109A Introduction to Data Science Pavlos Protopapas, Kevin Rader and Chris Tanner

Lecture Outline: Multiclass Classification Multinomial Logistic Regression OvR Logistic Regression k-NN for multiclass CS109A, PROTOPAPAS, RADER, TANNER 1

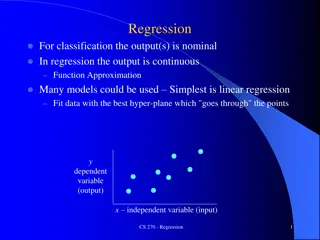

Logistic Regression for predicting 3+ Classes There are several extensions to standard logistic regression when the response variable Y has more than 2 categories. The two most common are: 1. ordinal logistic regression 2. multinomial logistic regression. Ordinal logistic regression is used when the categories have a specific hierarchy (like class year: Freshman, Sophomore, Junior, Senior; or a 7-point rating scale from strongly disagree to strongly agree). Multinomial logistic regression is used when the categories have no inherent order (like eye color: blue, green, brown, hazel, et...). CS109A, PROTOPAPAS, RADER, TANNER 2

Multinomial Logistic Regression CS109A, PROTOPAPAS, RADER, TANNER PAVLOS PROTOPAPAS

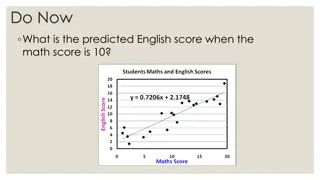

Multinomial Logistic Regression There are two common approaches to estimating a nominal (not-ordinal) categorical variable that has more than 2 classes. The first approach sets one of the categories in the response variable as the reference group, and then fits separate logistic regression models to predict the other cases based off of the reference group. For example we could attempt to predict a student s concentration: from predictors X1number of psets per week, X2how much time playing video games per week, etc. CS109A, PROTOPAPAS, RADER, TANNER 4

Multinomial Logistic Regression (cont.) We could select the y = 3 case as the reference group (other concentration), and then fit two separate models: a model to predict y = 1 (CS) from y = 3 (others) and a separate model to predict y = 2 (Stat) from y = 3 (others). Ignoring interactions, how many parameters would need to be estimated? How could these models be used to estimate the probability of an individual falling in each concentration? CS109A, PROTOPAPAS, RADER, TANNER 5

Multinomial Logistic Regression: the model To predict K classes (K > 2) from a set of predictors X, a multinomial logistic regression can be fit: ?(? = 1) ?(? = ?) ?(? = 2) ?(? = ?) ln = ?0,1+ ?1,1?1+ ?2,1?2+ + ??,1?? ln = ?0,2+ ?1,2?1+ ?2,2?2+ + ??,2?? ?(? = ? 1) ?(? = ?) Each separate model can be fit as independent standard logistic regression models! ln = ?0,? 1+ ?1,? 1?1+ ?2,? 1?2+ + ??,? 1?? CS109A, PROTOPAPAS, RADER, TANNER 6

Multinomial Logistic Regression in sklearn But wait Kevin, I thought you said we only fit K 1 logistic regression models!?!? Why are there K intercepts and K sets of coefficients???? CS109A, PROTOPAPAS, RADER, TANNER 7

What is sklearn doing? The K - 1 models in multinomial regression lead to the following probability predictions: ?(? = ?) ?(? = ?) ? ? = ? = ? ? = ? ??0,?+?1,??1+?2,???+ +??,??? This give us K 1 equations to estimate K probabilities for everyone. But probabilities add up to 1 , so we are all set. Sklearn then converts the above probabilities back into new betas (just like logistic regression, but the betas won t match): ?(? = ?) ?(? ?) ln = ?0,?+ ?1,??1+ ?2,???+ + ??,??? ln = ? 0,?+ ? 1,??1+ ? 2,???+ + ? ?,??? CS109A, PROTOPAPAS, RADER, TANNER 8

One vs. Rest (OvR) Logistic Regression CS109A, PROTOPAPAS, RADER, TANNER PAVLOS PROTOPAPAS

One vs. Rest (OvR) Logistic Regression An alternative multiclass logistic regression model in sklearn is called the One vs. Rest approach, which is our second method. If there are 3 classes, then 3 separate logistic regressions are fit, where the probability of each category is predicted over the rest of the categories combined. So for the concentration example, 3 models would be fit: a first model would be fit to predict CS from (Stat and Others) combined. a second model would be fit to predict Stat from (CS and Others) combined. a third model would be fit to predict Others from (CS and Stat) combined. CS109A, PROTOPAPAS, RADER, TANNER 10

One vs. Rest (OvR) Logistic Regression: the model To predict K classes (K > 2) from a set of predictors X, a multinomial logistic regression can be fit: ?(? = 1) ?(? 1) ?(? = 2) ?(? 2) ln = ?0,1+ ?1,1?1+ ?2,1?2+ + ??,1?? ln = ?0,2+ ?1,2?1+ ?2,2?2+ + ??,2?? ?(? = ?) ?(? ?) ln = ?0,?+ ?1,??1+ ?2,??2+ + ??,??? Again, each separate model can be fit as independent standard logistic regression models! CS109A, PROTOPAPAS, RADER, TANNER 11

Softmax So how do we convert a set of probability estimates from separate models to one set of probability estimates? The softmax function is used. That is, the weights are just normalized for each predicted probability. AKA, predict the 3 class probabilities from each of the 3 models, and just rescale so they add up to 1. Mathematically that is: ? ?? ?? ? ?? ?? ? ? = ? ? = ? ?=1 where ? is the vector of predictors for that observation and ??are the associated logistic regression coefficient estimates. CS109A, PROTOPAPAS, RADER, TANNER 12 12

OVR Logistic Regression in Python Phew! This one is as expected CS109A, PROTOPAPAS, RADER, TANNER 13

Predicting Type of Play in the NFL CS109A, PROTOPAPAS, RADER, TANNER 14 14

Classification for more than 2 Categories When there are more than 2 categories in the response variable, then there is no guarantee that P(Y = k) 0.5 for any one category. So any classifier based on logistic regression (or other classification model) will instead have to select the group with the largest estimated probability. The classification boundaries are then much more difficult to determine mathematically. We will not get into the algorithm for determining these in this class, but we can rely on predict and predict_proba! CS109A, PROTOPAPAS, RADER, TANNER 15

Prediction using Multiclass Logistic Regression CS109A, PROTOPAPAS, RADER, TANNER 16

Classification Boundary for 3+ Classes in sklearn CS109A, PROTOPAPAS, RADER, TANNER 17

Estimation and Regularization in multiclass settings There is no difference in the approach to estimating the coefficients in the multiclass setting: we maximize the log- likelihood (or minimize negative log-likelihood). This combined negative log-likelihood of all K classes is sometimes called the binary cross-entropy: ? ? ? ??= ? ln( ? (??= ?)) + ? ?? ? ln(1 ? (??= ?)) 1 ? ?=1 = ?=1 And regularization can be done like always: add on a penalty term to this loss function based on L1 (sum of the absolute values) or L2 (sum of squares) norms. CS109A, PROTOPAPAS, RADER, TANNER 18

Multiclass k-NN CS109A, PROTOPAPAS, RADER, TANNER PAVLOS PROTOPAPAS

k-NN for 3+ Classes Extending the k-NN classification model to 3+ classes is much simpler! Remember, k-NN is done in two steps: 1. Find you k neighbors: this is still done in the exact same way be careful of the scaling of your predictors! 2. Predict based on your neighborhood: Predicting probabilities: just use the observed proportions Predicting classes: plurality wins! CS109A, PROTOPAPAS, RADER, TANNER 20

k-NN for 3+ Classes: NFL Data CS109A, PROTOPAPAS, RADER, TANNER 21

Exercise Time! Ex. 1 (graded): Basic multiclass Logistic Regression and k-NN in sklearn (15+ min) Ex. 2 (not graded): A little more thinking and understanding (30+ min) 22