Effective Data Augmentation with Projection for Distillation

Data augmentation plays a crucial role in knowledge distillation processes, enhancing model performance by generating diverse training data. Techniques such as token replacement, representation interpolation, and rich semantics are explored in the context of improving image classifier performance. The discrete nature of language is highlighted as a challenge, leading to the proposition of augmentation with projection as an effective paradigm. The augmentation methods discussed involve student and teacher embeddings, KL divergence, and the use of observed/RI data with projection for better model consistency.

- Data Augmentation

- Knowledge Distillation

- Image Classifier

- Performance Improvement

- Projection Paradigm

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Augmentation with Projection: Towards an Effective and Efficient Data Augmentation Paradigm for Distillation Ziqi Wang, Yuexin Wu, Frederick Liu, Daogao Liu, Le Hou, Hongkun Yu, Jing Li, Heng Ji

Data augmentation in knowledge distillation Token Replacement Limited semantics A sad , superior human comedy played out on the back roads of life. A sad , superior human comedy played out on the back A lamentable , superior human comedy played out on the backward roads of life. roads of life.

Data augmentation in knowledge distillation Token Replacement Representation Interpolation Limited semantics Student Embedding Student Embedding watch on video at home as good ,

Data augmentation in knowledge distillation Token Replacement Representation Interpolation Limited semantics https://medium.com/@wolframalphav1.0/easy-way-to-improve-image-classifier-performance-part-1-mixup-augmentation-with-codes-33288db92de5

Data augmentation in knowledge distillation Token Replacement Representation Interpolation Limited semantics Teacher Embedding Teacher Embedding watch on video at home as good ,

Data augmentation in knowledge distillation Token Replacement Representation Interpolation Limited semantics Rich semantics KLDiv Student Model Teacher Model

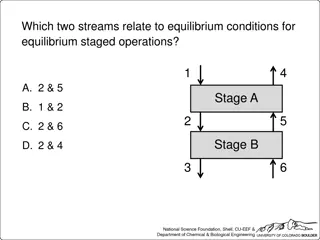

Data augmentation in knowledge distillation The discrete nature of language makes representation interpolation sub-optimal Observed data and RI data Observed / RI data with projection All data

Data augmentation in knowledge distillation Representation inconsistency KLDiv Student Model Teacher Model Same?

Methodology Token Replacement Representation Interpolation Our Method AugPro Limited semantics Rich semantics Rich semantics + Discrete Nature Teacher Embedding Teacher Embedding watch on video at home as good ,

Methodology Token Replacement Representation Interpolation Our Method AugPro Limited semantics Rich semantics Rich semantics + Discrete Nature Projection (e.g., Nearest neighbors) good video at home Teacher Embedding Watch watch on video at home as good ,

Conclusion We propose a new effective data augmentation technique for knowledge distillation that considers the discrete nature of languages Representation interpolation shows effectiveness in vision tasks However, we need to consider the discreteness of languages when we use vision techniques for NLP tasks This two observations motivate our research Results have shown that our methods are effective compared to other methods