Understanding Type I and Type III Sums of Squares in Experimental Design

Exploring the significance of Type I and Type III sums of squares in unbalanced experimental designs, highlighting the potential biases in treatment effect estimates and the differences in partitioning variation based on the order of terms entered in the model.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Confounding in Unbalanced Designs When designs are unbalanced , typically with missing values, our estimates of Treatment Effects can be biased. When designs are unbalanced , the usual computation formulas for Sums of Squares can give misleading results, since some of the variability in the data can be explained by two or more variables. 2

What would be a simple linear model and what does it say about any treatment mean comparison? Notation for layout and model. 4

Suppose we want to compare treatment 1 to treatment 2? The model says that if we compare averages, then we get a possible bias due to block. 5

Type I vs. Type III in partitioning variation If an experimental design is not a balanced and complete factorial design, it is not an orthogonal design. If a two factor design is not orthogonal, then the SSModel will not partition into unique components, i.e., some components of variation may be explained by either factor individually (or simultaneously). Type I SS are computing according to the order in which terms are entered in the model. Type III SS are computed in an order independent fashion, i.e. each term gets the SS as though it were the last term entered for Type I SS. 6

If BIBD, design is unbalanced and some variation may be explained by either factor. If we use a Venn diagram: 7

Notation for Hicks example There are only two possible factors, Block and Trt. There are only three possible simple additive models one could run. In SAS syntax they are: Model 1: Model Y=Block; Model 2: Model Y=Trt; Model 3: Model Y=Block Trt; 8

Adjusted SS notation Each model has its own Model Sums of Squares . These are used to derive the Adjusted Sums of Squares . SS(Block)=Model Sums of Squares for Model 1 SS(Trt)=Model Sums of Squares for Model 2 SS(Block,Trt)=Model Sums of Squares for Model 3 9

The Sums of Squares for Block and Treatment can be adjusted to remove any possible confounding. Adjusting Block Sums of Squares for the effect of Trt: SS(Block|Trt)= SSModel(Block,Trt)- SSModel(Trt) Adjusting Trt Sums of Squares for the effect of Block: SS(Trt|Block)= SSModel(Block,Trt)- SSModel(Block) 10

From Hicks Example SS(Block)=100.667 SS(Trt)=975.333 SS(Block,Trt)=981.500 11

For SAS model Y=Block Trt; Source df Type I SS Type III SS Block 3 SS(Block) SS(Block|Trt) =100.667 =981.50-975.333 Trt 3 SS(Trt|Block) SS(Trt|Block) =981.50-100.667 =981.50-100.667 12

ANOVA Type III and Type I (Block first term in Model) 13

For SAS model Y=Trt Block; Source df Type I SS Type III SS Trt 3 SS(Trt) SS(Trt|Block) =975.333 =981.50-100.667 Block 3 SS(Block|Trt) SS(Block|Trt) =981.50-975.333 =981.50-975.333 14

ANOVA Type III and Type I (Trt. First term in Model) 15

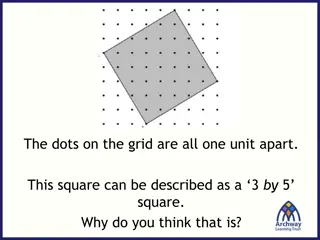

How does variation partition? SS Total Variation Block TRT Block or Trt Error 16

How this can work-I Hicks example 17

When does case I happen? In Regression, when two Predictor variables are positively correlated, either one could explain the same part of the variation in the Response variable. The overlap in their ability to predict is what is adjusted out of their Sums of Squares. 18

Example BIBD From Montgomery (things can go the other way) 19

ANOVA with Adjusted and Unadjusted Sums of Squares 20

How this can work- II Montgomery example 25

When does case II happen? Sometimes two Predictor variables can predict the Response better in combination than the total of they might predict by themselves. In Regression this can occur when Predictor variables are negatively correlated. 26