Understanding Tail Bounds in Probability for Computing

Tail bounds in probability theory play a crucial role in analyzing random variables and understanding the behavior of certain events. This content explores the concept of tail bounds, their importance through examples, and the derivation of upper bounds on tails. Markov's inequality is also discussed as a fundamental theorem in probability. The running example of flipping a fair coin multiple times is used to illustrate developing progressively better tail bounds.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Chapter 18 Tail Bounds "Introduction to Probability for Computing", Harchol-Balter '24 1

Tails Defn: The tail of random variable ? is ? ? > ? . Examples of why we care about tails: Fraction of jobs that queue more than 24 hours Fraction of packets that find the router buffer full Fraction of hash buckets that have more than 10 items Unfortunately, determining the tail of even simple r.v.s is often hard much harder than determining the mean or transform! 2 "Introduction to Probability for Computing", Harchol-Balter '24

Tails Example Q: Suppose you re distributing ? jobs among ? servers at random. What s the probability that a particular server gets ? jobs? ? ????????(?,?) No closed-form known for this ? ? ? ??1 ?? ? ?{? ?} = ?=? 3 "Introduction to Probability for Computing", Harchol-Balter '24

Tails Example Q: Jobs arrive to a datacenter according to a Poisson process with rate ? jobs/hour. What s the probability that ? jobs arrive during the first hour? ? ???????(?) No closed-form known for this ? ? ?? ?{? ?} = ?! ?=? 4 "Introduction to Probability for Computing", Harchol-Balter '24

Tails Bounds Rather than directly compute tails, we will derive upper bounds on the tails, called tail bounds! ? ? ? ?? ? ? ??1 ?? ? ?{? ?} = ?{? ?} = ?! ?=? ?=? We ll soon have tail bounds for both of these! Definition: An upper bound on ?{? ?} is called a tail bound. An upper bound on ?{|? ?| ?} where ? = ? ? is called a concentration bound or concentration inequality. 5 "Introduction to Probability for Computing", Harchol-Balter '24

Running Example We will develop progressively better (tighter) tail bounds. We will test each bound on the following running example: Flip a fair coin ? times: Q:What s a tail bound on the probability of getting at least 3 4? heads? 6 "Introduction to Probability for Computing", Harchol-Balter '24

Markovs inequality Theorem:(Markov s inequality) If r.v. ? is non-negative, with finite mean ? = ?[?], then ? > 0, ? ? ? ? ? Proof: ?[?] = ? ??(?) ? ??? ?=0 ?=? ? ??? ?=? ??? = ? ?{? ?} = ? ?=? 7 "Introduction to Probability for Computing", Harchol-Balter '24

Markovs Inequality on Running Example Flip a fair coin ? times: Q:What s a tail bound on the probability of getting at least 3 4? heads? ? = Number Heads ???????? ?,1 ? = ? ? =? 2 2 Clearly a terrible bound because doesn t involve ? ? 2 ? ? 3 ? =2 4? = 3 4? 3 4? 3 8 "Introduction to Probability for Computing", Harchol-Balter '24

Chebyshevs inequality Theorem:(Chebyshev s inequality) Let ? be any r.v. with finite mean, ?, and finite variance. Then ? > 0, ? |? ?| ? ???(?) ?2 Proof: Q: Can you see how to apply Markov s inequality here? ? ? ? ? = ? ? ?2 ?2 ? ? ?2 ?2 =???(?) ?2 9 "Introduction to Probability for Computing", Harchol-Balter '24

Chebyshevs Bound on Running Example Flip a fair coin ? times: Q:What s a tail bound on the probability of getting at least 3 4? heads? At least decreases with ? ? = ? ? =? ???(?) =? ? = Number Heads ???????? ?,1 2 4 2 ? 4 ? 4 2 =2 1 2 ???(?) = 1 =1 ? ? 2 ? ? ? 3 4? =? ? ? 2 ? 2 2? 2 ? 4 4 4 ? Why? 10 "Introduction to Probability for Computing", Harchol-Balter '24

Chernoff Bound In deriving the Chebyshev bound, we squared the r.v. and then applied Markov. In deriving the Chernoff bound, we exponentiate the r.v. and then apply Markov. ? > 0: Why are we allowed to apply Markov to this? ? ? ? = ?{?? ??} = ?{??? ???} ?[???] ??? But because this bound holds ?, it also holds for the minimizing ?. 11 "Introduction to Probability for Computing", Harchol-Balter '24

Chernoff Bound In deriving the Chebyshev bound, we squared the r.v. and then applied Markov. In deriving the Chernoff bound, we exponentiate the r.v. and then apply Markov. ? > 0: ? ? ? = ?{?? ??} = ?{??? ???} ?[???] ??? Theorem 18.3: (Chernoff bound) Let ? be any r.v. and ? be a constant. Then ?[???] ??? ? ? ? min ?>0 12 "Introduction to Probability for Computing", Harchol-Balter '24

Chernoff Bound In deriving the Chebyshev bound, we squared the r.v. and then applied Markov. In deriving the Chernoff bound, we exponentiate the r.v. and then apply Markov. ? > 0: Q: Why do we expect the Chernoff bound to be stronger than the others? ? ? ? = ?{?? ??} = ?{??? ???} ?[???] ??? Theorem: (Chernoff bound) Let ? be any r.v. and ? be a constant. Then ?[???] ??? A: Looks a lot like an onion! ? ? ? min ?>0 13 "Introduction to Probability for Computing", Harchol-Balter '24

Chernoff Bound on c.d.f. Q: What do we do if we want to upper bound ? ? ? ? Consider ? < 0 ? < 0: ? ? ? = ?{?? ??} = ?{??? ???} ?[???] ??? Theorem: (Chernoff bound on c.d.f.) Let ? be any r.v. and ? be a constant. Then ?[???] ??? ? ? ? min ?<0 14 "Introduction to Probability for Computing", Harchol-Balter '24

Chernoff Bound for Poisson Tail Goal: Bound tail of ? ???????(?) Step 1: Derive ? ???where ? > 0 Step 2: Let ? > ?. Bound ? ? ? ?[???] ??? ??? ? ? ?? ? ? ? min ?[???] = ?>0 ?! ?=0 ?? ?? 1 ??? ??? ? ?! = min ?>0 = ? ? Suffices to minimize exponent! ?=0 = ? ? ???? ?>0?? ?? 1 ?? = min = ?? ?? 1 15 "Introduction to Probability for Computing", Harchol-Balter '24

Chernoff Bound for Poisson Tail Goal: Bound tail of ? ???????(?) ? ? Step 2: Let ? > ?. Bound ? ? ? Exponent is minimized at ? = ?? Thus: ?[???] ??? ? ? ? min ?>0 ? ? ? ? ? ?? ?? 1 ??,at ? = ?? = ??? ? 1 ???? ?? ?? 1 ??? ? = min ?>0 ? ? ? = ?? ? Suffices to minimize exponent! ?>0?? ?? 1 ?? = min 16 "Introduction to Probability for Computing", Harchol-Balter '24

Chernoff Bound for Binomial Theorem 18.4: (Pretty Chernoff Bound for Binomial) Let ? ????????(?,?) where ? = ? ? = ??. Then, for any ? > 0, ? ? ?? ? ? 2?2/? ? ? ?? ? ? 2?2/? We will prove this soon, but let s try applying it first! Bound is strongest when ? = ? Try to use it in this regime. 17 "Introduction to Probability for Computing", Harchol-Balter '24

Chernoff Bound on Running Example Flip a fair coin ? times: Q:What s a tail bound on the probability of getting at least 3 4? heads? ? = ? ? =? ? = Number Heads ???????? ?,1 2 2 Decreases exponentially fast in ? ? = ? ? 2 1 ? 2? ? ? 3 4? =? ? ? 2 ? 8 4 4 Note ? =? 4= (?) 18 "Introduction to Probability for Computing", Harchol-Balter '24

Comparing the bounds Flip a fair coin ? times: Q:What s a tail bound on the probability of getting at least 3 4? heads? Q: What is the exact answer? ? ? ? ? ? ? ? 3 ? ? 1 2 1 2 ? ? = 2 ? 4? = ?=3 ?=3 4? 4? 19 "Introduction to Probability for Computing", Harchol-Balter '24

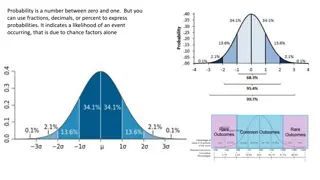

Central Limit Theorem Flip a fair coin ? times: Q:What s a tail bound on the probability of getting at least 3 4? heads? CLT applies because adding i.i.d. r.v.s ? ???????? ?,1 ? ? 3 4? = ? ? ? 2 ? 2 4 Result is approximation not bound ? ? ? 4 ? = ? ? =? 2 2 = ? ? 4 ? 4 ???(?) =? 4 ? 4 ? 4 ? 4 ? ?????? 0,1 = 1 ? ??= 20 "Introduction to Probability for Computing", Harchol-Balter '24

Comparing the approximation and bounds We re not showing Markov because it s too high Even Chebyshev is terrible Chernoff looks okay 21 "Introduction to Probability for Computing", Harchol-Balter '24

Comparing the approximation and bounds Even Chernoff doesn t look so great any more An up close view at higher ? > 70 Chebyshev no longer visible. It s too high 22 "Introduction to Probability for Computing", Harchol-Balter '24

Proof of Thm 18.4 Pretty Chernoff Bound Theorem 18.4:(Pretty Chernoff Bound for Binomial) Let ? ????????(?,?) where ? = ? ? = ??. Then, for any ? > 0, ? ? ?? ? ? 2?2/? ? ? ?? ? ? 2?2/? We will now prove Thm 18.4 (top half). The bottom half is an Exercise in your book. Our proof requires using Lemma 18.5, which is proven in your book. Lemma 18.5: For any ? > 0 and 0 < ? < 1 and ? = 1 ?, we have that: ????+ ?? ?? ??2/8 23 "Introduction to Probability for Computing", Harchol-Balter '24

Proof of Thm 18.4 Pretty Chernoff Bound Theorem 18.4: Let ? ????????(?,?) where ? = ? ? = ??. Then, for any ? > 0, ? ? ?? ? ? 2?2/? Proof: For any ? > 0, ? ? ?? ? = ? ?(? ??) ?? = ? ??(? ??) ??? = ? ??? ??( ?1 ? + ?2 ? + + ?? ? ) ? ? ??(?? ?) ? ??? ??(? ??) = ? ?? We can break this up! ? = ?=1 ?? ?????????(?) ? ?? where ?=1 24 "Introduction to Probability for Computing", Harchol-Balter '24

Proof of Thm 18.4 Pretty Chernoff Bound Theorem 18.4: Let ? ????????(?,?) where ? = ? ? = ??. Then, for any ? > 0, ? ? ?? ? ? 2?2/? So, for any ? > 0, Proof, cont: ? ? ? ?? ? ? ?? ? ??(?? ?) ?=1 ? = ? ?? ? ??(1 ?)+ 1 ? ? ?? ?=1 by Lemma 18.5 Q: What do we do next? ? = ? ??+??2/8 ??2/8 = ? ?? A: Find the minimizing ? ?=1 25 "Introduction to Probability for Computing", Harchol-Balter '24

Proof of Thm 18.4 Pretty Chernoff Bound Theorem 18.4: Let ? ????????(?,?) where ? = ? ? = ??. Then, for any ? > 0, ? ? ?? ? ? 2?2/? Proof, cont: So, for any ? > 0, The exponent is minimized at ? =4? ? ? ?? ? ? ??+??2/8 ? 2 ? ? ?? ? ? 4? ?+?4? /8= ? 2?2/? ? ? 26 "Introduction to Probability for Computing", Harchol-Balter '24

Stronger (?) Chernoff Bound for Binomial Theorem 18.6 presents an alternative, sometime stronger, bound. The bound holds for a more general definition of a Binomial. Theorem 18.6:(Sometimes stronger Chernoff Bound) ? ? Let ? = ?=1 ?? where ?? ????????? ??and ? = ? ? = ?=1 ??. Then, ? > 0, ? ?? ? ? 1 ? ? 1+? 1 + ? 27 "Introduction to Probability for Computing", Harchol-Balter '24

Stronger (?) Chernoff Bound for Binomial Theorem 18.6:(Sometimes stronger Chernoff Bound) ? ? Let ? = ?=1 ?? where ?? ????????? ??and ? = ? ? = ?=1 ??. Then, ? > 0, ? ?? ? ? 1 ? ? < 1+? 1 + ? Two observations: Plot of inner term: ?? 1. ? ? < 1, so bound is exponentially decreasing. ? ? = 1+? 1 + ? 2. Bound in Thm 18.6 is particularly strong when ? is high. 28 "Introduction to Probability for Computing", Harchol-Balter '24

Comparison of Chernoff Bounds Theorem 18.6:(Sometimes stronger bound) Let ? = ?=1 ?? where ?? ????????? ?? and ? = ? ? = ?=1 ??. Then, ? > 0, Theorem 18.4: (Pretty bound) Let ? ????????(?,?) where ? = ? ? = ??. Then, for any ? > 0, ? ? ? ?? ? ? ?? ? ? 2?2/? ? ? 1 + ? ? < 1+? 1 + ? Q: Which gives best bound on probability of getting 3 4? heads, when flipping fair coin ? times? ? ? 3? = ? ? ? ? 4 ? ? 3? = ? ? 1 +1 ? 2 2 4 4 2 This is the better bound! ?/2 ?0.5 1.51.5 ? ? 1.54 ? 8 8 29 "Introduction to Probability for Computing", Harchol-Balter '24

Comparison of Chernoff Bounds Theorem 18.6:(Sometimes stronger bound) Let ? = ?=1 ?? where ?? ????????? ?? and ? = ? ? = ?=1 ??. Then, ? > 0, Theorem 18.4: (Pretty bound) Let ? ????????(?,?) where ? = ? ? = ??. Then, for any ? > 0, ? ? ? ?? ? ? ?? ? ? 2?2/? ? ? 1 + ? ? < 1+? 1 + ? 1 ? ? Q: Which is the better bound on ?{? 21} if ??= ? = ? ? 21 = ? (? 1 + 20 1 ?20 2121 8.3 10 20 ? ? 21 = ? ? 1 20 ? 2 202 ? 800 ? Much better bound! 1 ? 30 "Introduction to Probability for Computing", Harchol-Balter '24

More general bound: Hoeffdings Inequality Theorem 18.7:(Hoeffding s Inequality) Let ?1,?2, ,?? be independent r.v.s, where ?? ?? ??, ?. ? More general because ?? s don t have to be independent Let: ? = ?? ?=1 Then, 2 ?2 ?? ?? ? ? ? ? ? ??? ? 2 ?=1 2 ?2 ?? ?? ? ? ? ? ? ??? ? 2 ?=1 31 "Introduction to Probability for Computing", Harchol-Balter '24