Understanding Standard Deviation and Standard Error of the Means

Standard deviation measures the variability or spread of measurements in a data set, while standard error of the means quantifies the precision of the mean of a set of means from replicated experiments. Variability is indicated by the range of data values, with low standard deviation corresponding to a narrow range and high standard deviation to a wide range. The order of data sets in terms of standard deviation can provide insights into the variability of measurements. Additionally, confidence intervals reflect the significance level and provide a range within which the true mean is likely to fall.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

STANDARD DEVIATION

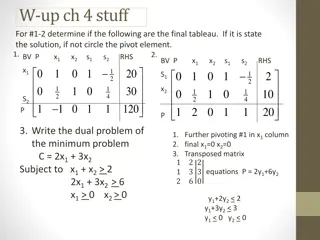

STANDARD DEVIATION (symbol is sigma) measures VARIABILITY or SPREAD of MEASUREMENTS in a data set from repetition of trials within one experiment What is the standard deviation of each data set below? High or Low? Mean LOW standard deviation NARROW range of measured data Mean HIGH standard deviation WIDE range of measured data

3. SMALLEST standard deviation 2. INTERMEDIATE standard deviation 1. LARGEST standard deviation Put these in order from the highest standard deviation to lowest standard deviation. LARGEST standard deviation (most variability of measurements in a data set) 1. red INTERMEDIATE standard deviation (moderate variability of measurements in a data set) 2. green SMALLEST standard deviation (least variability of measurements in a data set) 3. blue

All three have the SAME standard deviation because they all have the same data variability (spread). 10 15 20 Each data set has a different mean. Put these in order from the lowest standard deviation to highest standard deviation. SAME standard deviation (least variability of measurements in a data set) blue green red

1. lowest standard deviation 4. highest standard deviation Put these in order from the lowest standard deviation to highest standard deviation. LOWEST standard deviation (least variability of measurements in a data set) 1. blue Low standard deviation (less variability of measurements in a data set) High standard deviation (more variability of measurements in a data set) HIGHEST standard deviation (most variability of measurements in a data set) 4. yellow 2. green 3. red

STANDARD ERROR of the MEANS (symbol SEM) measures PRECISION OF THE MEAN of a data set of means from multiple replications of an experiment (standard deviation of the means of replicated experiments) how much sampling variation there is if resample & re-estimate 2 SEM means 95% of replications will contain the true mean smaller standard deviation ( ) decreases standard error larger sample size (n) decreases standard error

CONFIDENCE INTERVALS a 95% confidence interval reflects a significance level of 0.05 (p-value < -value of 0.05) confidence interval of 95% means that the means of multiple replications would be within this confidence interval 95% of the time AND YOU MUST REJECT THE NULL HYPOTHESIS Data found out here is like way weird!!