Testing Residuals for Model Appropriateness in ARMA Modeling

This content discusses the importance of checking residuals for white noise in ARMA models, including methods like sample autocorrelations, Ljung-Box test, and other tests for randomness. It also provides examples of examining residuals in ARMA modeling using simulated seasonal data and airline data, emphasizing the significance of proper model evaluation.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Chapter 9 Model Building NOTE: Some slides have blank sections. They are based on a teaching style in which the corresponding blank (derivations, theorem proofs, examples, ) are worked out in class on the board or overhead projector. 1

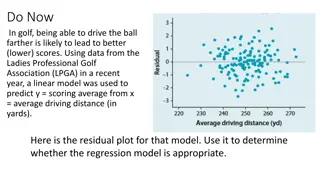

Check for Model Appropriateness I. Residuals should be white noise or, based on parameter estimates - these are calculated after estimates (tswge uses backcasting) 2

Testing Residuals for White Noise 1. Check sample autocorrelations of residuals vs 95% limit lines. 2. Ljung-Box test (Portmanteau test) portmanteau [port man t ] 1. a large travelling case made of stiff leather, esp one hinged at the back so as to open out into two compartments 2. embodying several uses or qualities 3

Testing Residuals for White Noise 1. Check sample autocorrelations of residuals vs 95% limit lines. 2. Ljung-Box test (Portmanteau) - Test the hypotheses: at least one = = = = : : 0 H H 0 1 2 K for 0, 1 k K a k - Test Statistic: 2 k K = + ( 2) L n n n k = 1 k 2 - Under is approximately L with d.f. 0, H K p q 2 1 - Reject if ( ) H L K p q 0 4

3. Other tests for randomness - runs test - etc. 5

Examining Residuals for Some Examples Demo: Generate realization from ARMA(2,1): 2 (1 1.2 .8 ) t B B X + = (1 .9 ) B a t Example 8.8 - Simulated seasonal data (fig8.8) Example 8.9 - Airline data (airlog) 6

tswge demo 2 + = (1 .9 ) , = (1 1.2 .8 ) 200 B B X B a n t t d1=gen.arma.wge(n=200,phi=c(1.2,-.8),theta=.9) plotts.sample.wge(d1) # overfit AR models est.ar.wge(d1,p=10,type='burg') # est.ar.wge(d1,p=12,type='burg') # aic.wge(d1,p=0:6,q=0:2) # d1.est=est.arma.wge(d1,p= # check residuals plotts.sample.wge(d1.est$res,arlimits=TRUE) ljung.wge(d1.est$res,p= # final model mean(d1) 7

Example 8.7 Simulated Seasonal Data Data Sample Autocorrelations Residual Sample Autocorrelations Residuals 8

tswge demo dd1=gen.aruma.wge(n=200,s=12,phi=c(1.25,-.9)) dd1=dd1+50 plotts.sample.wge(dd1) # overfit AR models ov14=est.ar.wge(dd1,p=14, type='burg') # ov16=est.ar.wge(dd1,p=16, type='burg') # dd1.12=artrans.wge(dd1,phi.tr=c(0,0,0,0,0,0,0,0,0,0,0,1)) plotts.sample.wge(dd1.12) aic.wge(dd1.12,p=0:6,q=0:2) # estimate model parameters dd1.12.est=est.arma.wge(dd1.12,p= # check residuals plotts.sample.wge(dd1.12.est$res,arlimits=TRUE) ljung.wge(dd1.12.est$res,p= # final model mean(dd1) 9

Example 8.8 Airline Data Data Sample Autocorrelations Res. Sample Autocorrelations Residuals 10

twge demo log airline data data(airlog) plotts.sample.wge(airlog) # overfit AR models ov14=est.ar.wge(airlog,p=14, type='burg') # ov16=est.ar.wge(airlog,p=16, type='burg') # transform data la.12=artrans.wge(airlog,phi.tr=c(0,0,0,0,0,0,0,0,0,0,0,1)) plotts.sample.wge(la.12) aic.wge(la.12,p=0:13,q=0:2) # estimate parameters of stationary part la.12.est=est.arma.wge(la.12,p= # check residuals plotts.sample.wge(la.12.est$res,arlimits=TRUE) ljung.wge(la.12.est$res,p= # final model 11

Another Important Check for Model Appropriateness Does the model make sense? - stationarity vs. nonstationarity - seasonal vs. non-seasonal - correlation based vs signal-plus-noise model - are characteristics of fitted model consistent with those of the data - forecasts and spectral estimates make sense? - do realizations and their characteristics behave like the data 12

Stationarity vs Nonstationarity? A decision that must be consciously made by the investigator Tools: overfitting unit roots tests (Dickey-Fuller test, etc.) - designed to test the null hypothesis - i.e. decision to include a unit root in the model is based on a decision not to reject a null hypothesis - in reality the models (1 B)Xt = atand (1 .97B)Xt = at will both create realizations for which the null hypothesis is usually not rejected using the Dickey-Fuller test an understanding of the physical problem and properties of the model selected 13

Modeling Global Temperature Data (a) Stationary model: data(hadley) mean(hadley) plotts.sample.wge(hadley) aic.wge(hadley,p=0:6,q=0:1) # estimate stationary model had.est=est.arma.wge(hadley,p= # check residuals plotts.sample.wge(had.est$res,arlimits=TRUE) ljung.wge(had.est$res,p= # other realizations from this model demo=gen.arma.wge(n=160,phi=had.est$phi,theta=had.est $theta) plotts.sample.wge(demo) 14

(b) Nonstationary model: data(hadley) plotts.sample.wge(hadley) # Overfit AR models d8=est.ar.wge(hadley,p=8, type='burg') # d12=est.ar.wge(hadley,p=12, type='burg') # Difference the data h.dif=artrans.wge(hadley,phi.tr=1) plotts.sample.wge(h.dif) # aic.wge(h.dif,p=0:6,q=0:1) # h.dif.est=est.arma.wge(h.dif,p= # Examine residuals plotts.sample.wge(h.dif.est$res,arlimits =TRUE) ljung.wge(h.dif.est$res,p= # other realizations from this model demo=gen.aruma.wge(n=160,d=1,phi=h.dif.e st$phi,theta=h.dif.est$theta) plotts.sample.wge(demo)

Forecasts using Stationary Model data(hadley) fore.arma.wge(hadley,phi=c(1.27,-.47,.19), theta=.63,n.ahead=25,limits=FALSE) Forecasts using Nonstationary Model data(hadley) fore.aruma.wge(hadley,d=1,phi=c(.33,-.18), theta=.7,n.ahead=25,limits=FALSE) 16

Notes: the two models are quite similar but produce very different forecasts it is important to understand the properties of the selected model - the selection of a stationary model will automatically produce forecasts that eventually tend toward the mean of the observed data (i.e. it was the decision to use the stationary model that produced these results) beware of results by investigators who choose a model in order to produce desired results 17

Deterministic Signal-plus-Noise Models Example Signals: , Cconstant 18

Recall -- sometimes its not easy to tell whether a deterministic signal is present in the data Is there a deterministic signal? 19

Realizations - is there a deterministic signal? Recall - Sometimes it s not easy to tell whether a deterministic signal is present in the data. Global Temperature Data 20

Realizations from the stationary model fit to temperature data 21

Question:Should observed increasing temperature trend be predicted to continue? - based on standard ARMA/ARUMA fitting the answer is No Another Possible Model - deterministic signal + noise model - nonstationary due to non-constant mean 22

Common Strategy for Assessing which Model is Appropriate assume Zt AR(p) with zero mean - note that this is different from usual regression since the noise is correlated - in the presence of noise with positive autocorrelations, using the incorrect procedure of testing H0: b = 0 using usual regression methods, results in inflated observed significance levels -testing H0: b = 0 is a difficult problem 23

Important Points: - realizations from AR (ARMA/ARUMA) models have random trends 24

Question: Is there a deterministic trend in the data (That should be predicted to continue)? Technique: Consider the model is a zero mean AR process and t Z = + where X S Z t t t = + S a bt t = Test: vs 0: 0 : 0 H b H b a IFweconclude b=0, fit AR model & trend not predicted to continue IF we conclude b 0, trend is predicted to continue 26

Cochrane-Orcutt Test Test H0: b = 0 using the test statistic ( co SE b co where is the least squares estimate of slope in (9.14) and is its usual standard error. SE b b co = co t ) b b ( ) co Note: Woodward and Gray (1993) Journal of Climate showed: - when using the Cochrane Orcutt method to remove the correlated errors, the resulting test still has inflated observed significance levels. - same is true with ML methods 27

Simulation Results -- b = 0 (i.e. null hypothesis of zero slope is true) -- 1000 replicates generated from each model -- hypothesis of zero slope tested at = .05 for each realization using Cochrane-Orcutt Observed Significance Levels for Tests H0 : b = 0 (nominal level = 5%) 50 18.4 27.2 37.2 100 16.0 20.0 28.4 250 8.0 12.4 17.6 1000 4.8 8.4 9.6 28

Bootstrap Method Woodward, Bottone, and Gray (1997) -- Journal of Agricultural, Biological, and Environmental Statistics(JABES), 403-416. Given a time series realization that may have a deterministic trend : To testH 0 : b = 0 1. Based on the observed data, calculate a test statistic Q that is a test for trend (e.g. Cochrane-Orcutt statistic) for which large Q suggests a trend 2. Fit stationary AR model (with constant mean) to observed time series data and generate K realizations from this model 3. For each realization in (2) calculate the test statistic in (1) 4. Reject H 0 : b = 0 if Q in (1) exceeds the 95th percentile of the bootstrap-based test statistics in (3) 29

Simulation Results a bt Z = + + where -- b = 0 (i.e. null hypothesis of zero slope is true) -- 1000 replicates generated from each model = (1 ) Y B Z a 1 t t t t Observed Significance Levels for Tests H0 : b = 0 1 .8 n = = = .9 .95 1 1 50 18.4 27.2 37.2 5.9 8.1 10.0 100 16.0 20.0 28.4 6.4 5.3 7.6 250 8.0 12.4 17.6 3.8 5.1 6.4 1000 4.8 8.4 9.6 4.1 3.8 6.1 black - Cochrane-Orcutt red - Bootstrap 30

Comments: 1. If a significant slope is detected, then forecasts from the model Xt=a+bt+Zt will forecast an existing trend to continue - such a model should be used with caution if more than short-term forecasts are needed 2. Jon Sanders (2009) developed bootstrap-based procedures to test for significant - monotonic trend - nonparametric trend 31

Checking Realization Characteristics Do realizations generated from the model have characteristics consistent with those of the actual data? - similar realizations? - similar sample autocorrelations? - similar spectral densities (nonparametric)? - ... 32

Note: This data set ss08 contains sunspot numbers for the years 1925-2008 not included in the sunspot data sunspot.classic We consider 2 models: - AR(2) - AR(9) 34

Comparing Realizations Sunspot Data Realizations from the AR(2) Model Realizations from the AR(9) Model 35

Comparing Sample Autocorrelations Sunspot Data Figure 9.10 AR(2) Model AR(9) Model 36

Comparing Nonparametric Spectral Densities Sunspot Data Figure 9.11 AR(2) Model AR(9) Model 37

Time Series Model Checking using Parametric Bootstraps Tsay - Applied Statistics (1992) - generates multiple realizations from the fitted model - checks to see whether actual data are consistent with the realizations from the fitted model Woodward and Gray Journal of Climate (1995) Given a time series realization - generate bootstrap realizations from each of two candidate models - use discriminant analysis to ascertain which model generates realizations that best match characteristics of the observed realization. 38

Comprehensive Analysis of Time Series Data Involves: I. Examination -- of data -- of sample autocorrelations II. Obtaining a model -- stationary or nonstationary -- correlation-based or signal-plus-noise -- identifying p and q -- estimating coefficients III. Checking for model appropriateness IV. Obtaining forecasts, spectral estimates, etc. as dictated by the situation 39

Important Note: Previous discussion only focused on deciding among - ARMA(p,q) - ARUMA(p,d,q) - signal-plus-noise There are MANY other models and tools available to the time series analyst, among which are: - long memory models (Ch. 11) - multivariate and state-space models (Ch. 10) - ARCH/GARCH models (Ch. 4) - wavelet analysis (Ch. 12) - models for data with time varying frequencies (TVF) (Ch. 13) 40