Support Vector Machine: Introduction and History

Discover the fascinating history and evolution of Support Vector Machines, tracing back to key developments in statistical learning theory. Explore the foundational concepts behind SVMs and their significance in the realm of machine learning.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

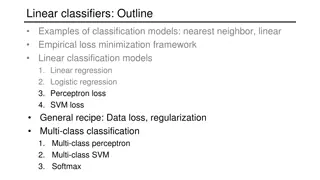

Introduction History of Support Vector Machine SVMs can be said to have started when statistical learning theory was developed further with Vapnik. (1979) (in Russian) Several statistical mechanics papers (for example Anlauf and Biehl (1989)) suggested using large margin hyperplanes in the input space. Poggio and Girosi (1990) and Wahba (1990) discuss the use of kernels. Bennett and Mangasarian (1992) improved upon Smith s 1968 work on slack variables. SVMs close to their current form were first introduced with a paper at the COLT 1992 conference (Boser, Guyon and Vapnik 1992). The papers by Bartlett (1998) and Shawe-Taylor, et al. (1998) gave the first rigorous statistical bound on the generalisation of hard margin SVMs Category Linear SVM Non-Linear SVM 2/19

Linear, Noiseless One Possible Solution Another Possible Solution Other Possible Solution Goal : Find a linear hyperplane(decision boundary) that will separate the data 3/19

Linear, Noiseless 1) Which one is better? B1 or B2? 2) How do you define better? 4/19

Linear, Noiseless Decision boundary data Decision boundary Goal : Find hyperplane maximizes the margin B1 is better than B2 5/19

Linear, Noiseless Parameter Support Vector Support Vector 6/19

Linear, noiseless : 2 ( ) 7/19

Training linear SVM (1) ( ) multiplier , 8/19

Training linear SVM (2) Support vector ??= 0 ? (A) Margin (Support Vector) w , ?, b = ? = ? 9/19

Linear with noise Cost function Slack Variable 10/19

Non-linear SVM (1) What if decision boundary is not linear? 11/19

Non-linear SVM (2) Original Non-Linear separable Mapping Linear separable 12/19

Non-linear SVM (3) Mapping function mapping function mapping function (Kernel Trick ) 1) 2) 13/19

Non-linear SVM (4) Polynomial Kernel function 14/19

Non-linear SVM (5) 15/19

Kernel function 3 kernel function Polynomial : Gaussian : Sigmoid : 16/19

Conclusions SVM Global Optimization ( cf. Local optimization of MLP ) Structural Risk Minimization ( cf. Empirical Risk Minimization of MLP ) SVM Noise Cost function Time Complexity 17/19

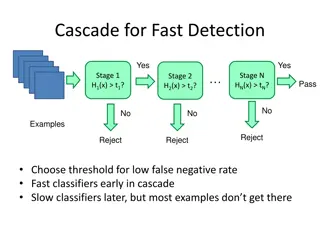

SVM Exercise - Tutorial exercise for using Different SVM kernels n_sample : 2 Data 100 2 (1 , 2) Training Data 90 Test data 10 3 kernel function SVM classifier plot - - - - - - Reference http://scikit-learn.org/stable/auto_ examples/exercises/plot_iris_exerc ise.html#sphx-glr-auto-examples- exercises-plot-iris-exercise-py 18/19

SVM Exercise : Result Training Data 90 Test data 10 2 , 3 Kernel function - - - - - Accuracy : 0.5 / 0.6 / 0.5 19/19