SEAL: Scalable Memory Fabric for Silicon Interposer-Based Systems

SEAL lab focuses on designing a scalable hybrid memory fabric for silicon interposer-based multi-core systems to support memory-intensive applications like in-memory computing. The lab's research aims to provide low-latency, high-bandwidth processor-memory communication through innovative topology, technology, and routing algorithm designs for the memory network on silicon interposer.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Scalable and Energy-efficient Architecture Lab (SEAL) Scalable Memory Fabric for Silicon Interposer-Based Multi-Core Systems Itir Akgun*, Jia Zhan*, Yuangang Wang , and Yuan Xie* *University of California, Santa Barbara Huawei

Scalable and Energy-efficient Architecture Lab (SEAL) One-Page Summary Aim: To design a scalable hybrid memory fabric for silicon-interposer based 2.5D design to enable memory-intensive applications such as in-memory computing by providing a low-latency and high- bandwidth processor-memory communication Design: 1) Topology, 2) technology, and 3) routing algorithm design for the hybrid memory network on silicon interposer Conclusion: Proposed memory network in silicon interposer (MemNiSI) design can provide a scalable fabric 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 2

Scalable and Energy-efficient Architecture Lab (SEAL) Agenda Background and Motivation 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 3

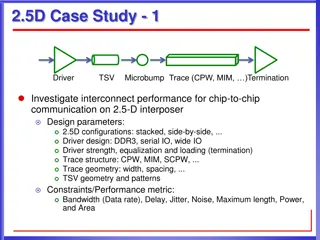

Scalable and Energy-efficient Architecture Lab (SEAL) Background: Silicon interposer in 2.5D Interposer: Silicon die with metal layers that allows face- down integration of chips via micro-bumps Passive vs. active interposers https://upload.wikimedia.org/wikipedia/commons/ e/e7/AMD_Fiji_GPU_package_with_GPU,_HBM_ memory_and_interposer.jpg 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 4

Scalable and Energy-efficient Architecture Lab (SEAL) Background: In-memory computing Keeps an application s working dataset in memory for faster access -> needs large memory capacity Target applications: Big data processing Real-time analytics In-memory databases 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 5

Scalable and Energy-efficient Architecture Lab (SEAL) Motivation Requirements for memory-intensive applications Memory capacity Bandwidth Performance Scalable solutions for the memory requirements 3D stacking (+) memory capacity, bandwidth, power consumption, performance (-) thermals, scalability 2.5D stacking (+) all the benefits of 3D stacking, thermals, scalability 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 6

Scalable and Energy-efficient Architecture Lab (SEAL) Agenda Architecture 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 7

Scalable and Energy-efficient Architecture Lab (SEAL) 3D DRAM Architecture Interposer CMP Multi-core CPU ? ? cores connected in a 2D mesh M M M M C C C C M M Interposer C C C C M M ? 4 3D-stacked memory modules C C C C M M C C C C M M M M M M 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 8

Scalable and Energy-efficient Architecture Lab (SEAL) Agenda Network Design 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 9

Scalable and Energy-efficient Architecture Lab (SEAL) Network Design: Topology Pillar nodes M M M M M M M M C C C C C C C C M M M M C C C C C C C C M M M M C C C C M C C C C M M M C C C C M M C C C C M M M M M M M M M M Point-to-point Daisy chain (+) Fewer hops to memory (-) Poor bandwidth (-) Poor scalability (-) More hops to memory (+) Improved bandwidth (-) Poor scalability 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 10

Scalable and Energy-efficient Architecture Lab (SEAL) Network Design: Topology MemNiSI: Memory Network in Silicon Interposer M M M M M M M M (+) Fewer hops to memory (+) Improved bandwidth (+) Improved scalability C C C C C C C C C C C C M M M M C C C C C C C C M M C C C C M M M M M M C C C C M M C C C C M M M M M M M M M M Logical layout Physical layout 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 11

Scalable and Energy-efficient Architecture Lab (SEAL) Network Design: Technology Active vs. passive interposers 45 nm 45 nm 45 nm 45 nm Process technology 45 nm 45 nm 65 nm 45 nm A combination of these factors may result in network frequency discrepancies 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 12

Scalable and Energy-efficient Architecture Lab (SEAL) Network Design: Routing Algorithm Baseline Topologies Pillar router first (PRF): Modified XY-routing Request Path M M M M M M M M Response Path C C C C C C C C M M M M C C C C C C C C M M M M C C C C C C C C M M M M C C C C C C C C M M M M M M M M M M M M 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 13

Scalable and Energy-efficient Architecture Lab (SEAL) Network Design: Routing Algorithm Memory Network in Silicon Interposer (MemNiSI) 1) Network in Silicon Interposer Heavy (NiSIH) M M M M M M M M Request and Response Path C C C C C C C C C C C C C C C C M M M M M M M M 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 14

Scalable and Energy-efficient Architecture Lab (SEAL) Network Design: Routing Algorithm Memory Network in Silicon Interposer (MemNiSI) 2) Network-on-Chip Heavy (NoCH) M M M M M M M M Request and Response Path C C C C C C C C C C C C C C C C M M M M M M M M 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 15

Scalable and Energy-efficient Architecture Lab (SEAL) Network Design: Routing Algorithm Memory Network in Silicon Interposer (MemNiSI) 3) Choose-Faster-Path (CFP) 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 16

Scalable and Energy-efficient Architecture Lab (SEAL) Agenda Evaluations 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 17

Scalable and Energy-efficient Architecture Lab (SEAL) Simulator Setup Event-driven simulator in C++ to model hybrid network CPU & Memory On-chip Network Interposer Network 16 cores 2GHz 64B cache line size 16 memory nodes 4GB/node 100 cycles memory latency 4-stage router pipeline 4 VCs/port 4 buffers/VC 16B flit size 4 flits maximum packet size 4x4 mesh topology 4-stage router pipeline 4 VCs/port 4 buffers/VC 16B flit size 4 flits maximum packet size 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 18

Scalable and Energy-efficient Architecture Lab (SEAL) Workloads Setup Synthetic traffic: Uniform-random Hotspot In-memory computing workloads: CloudSuite: Pagerank, Tunkrank, Spark-grep, Memcached BigDataBench: Spark-sort Redis 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 19

Scalable and Energy-efficient Architecture Lab (SEAL) Topology Study Average packet latency comparison of the network topologies under uniform-random traffic MemNiSI is more scalable under heavy memory traffic 300 Mean Packet Latency (ns) 250 200 150 100 50 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 Injection Rate Point-to-point Daisy chain MemNiSI 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 20

Scalable and Energy-efficient Architecture Lab (SEAL) Topology Study Average packet latency comparison of the network topologies under hotspot traffic On average, MemNiSI performs 8.9% faster than point-to-point and 15.3% faster than daisy chain topologies under hotspot 300 Mean Packet Latency (ns) 250 200 150 100 50 0 Hotspot_20 Hotspot_40 Hotspot_60 Hotspot_80 Average Point-to-point Daisy chain MemNiSI 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 21

Scalable and Energy-efficient Architecture Lab (SEAL) Sensitivity Analysis Network frequency sensitivity analysis for CFP algorithm Faster NoC 4:1 ; Faster NiSI 1:4 70 60 Algorithm Choice (%) 50 40 30 20 10 0 100:1 20:1 4:1 2:1 1:1 1:2 1:4 1:20 1:100 Network Frequency Ratio (NoC:NiSI) NoCH % NiSIH % 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 22

Scalable and Energy-efficient Architecture Lab (SEAL) Routing Algorithm Study Routing algorithm comparison under synthetic traffic, normalized to NiSIH algorithm CFP can outperform up to 6.85% Uniform-random Hotspot Average Normalized Mean Packet Latency 1.1 1.05 1 0.95 0.9 NiSIH NoCH CFP NiSIH NoCH CFP NiSIH NoCH CFP 1:1 1:4 4:1 Network Frequency Ratio (NoC:NiSI) 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 23

Scalable and Energy-efficient Architecture Lab (SEAL) Routing Algorithm Study Routing algorithm comparison under in-memory computing workloads, normalized to NiSIH algorithm On average, CFP outperforms NiSIH by up to 3.4% and NoCH by up to 10.0%. For 1:1, NiSIH by 1.65% and NoCH by 7.99%. NiSIH NoCH CFP Normalized Mean Packet 1.15 1.1 Latency 1.05 1 0.95 0.9 1:1 1:4 4:1 1:1 1:4 4:1 1:1 1:4 4:1 1:1 1:4 4:1 1:1 1:4 4:1 1:1 1:4 4:1 1:1 1:4 4:1 pagerank tunkrank spark-grep spark-sort memcached redis Average 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 24

Scalable and Energy-efficient Architecture Lab (SEAL) Agenda Conclusion 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 25

Scalable and Energy-efficient Architecture Lab (SEAL) Conclusion Verdict: A memory network approach can provide a scalable fabric for low-latency and high-bandwidth communication for interposer-based 2.5D designs Future work: Evaluating power/area/cost/reliability of the memory network in silicon interposer 9/30/2024 ~ The 34th IEEE International Conference on Computer Design ~ 26

Scalable and Energy-efficient Architecture Lab (SEAL) Scalable Memory Fabric for Silicon Interposer-Based Multi-Core Systems Itir Akgun*, Jia Zhan*, Yuangang Wang , and Yuan Xie* *University of California, Santa Barbara Huawei THANK YOU!