Riemannian Normalizing Flow on Variational Wasserstein Autoencoder for Text Modeling

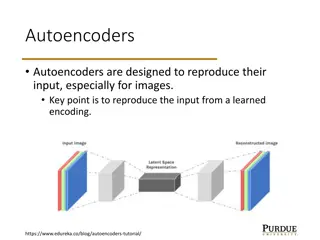

This study explores the use of Riemannian Normalizing Flow on Variational Wasserstein Autoencoder (WAE) to address the KL vanishing problem in Variational Autoencoders (VAE) for text modeling. By leveraging Riemannian geometry, the Normalizing Flow approach aims to prevent the collapse of the posterior distribution to a standard Gaussian prior, enabling more accurate sentence generation from latent codes. Experimental results demonstrate the effectiveness of this method in mitigating the KL vanishing issue.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Riemannian Normalizing Flow on Variational Wasserstein Autoencoder for Text Modeling Prince Wang, William Wang UC Santa Barbara 1

Outline VAE and the KL vanishing problem Motivation: why Riemannian Normalizing flow/WAE Details Experimental Results 2

VAE: KL vanishing Can generate sentences given latent codes z i were to buy any groceries . horses are to buy any groceries . horses are to buy any animal . horses the favorite any animal . KL term, gap between posterior and prior 3

Previous works Generating sentences from Continuous Space, (2015, Bowman) Improved Variational Autoencoder for text Modeling using Dilated Convolution, (2017, Yang) Spherical Latent Spaces for Stable Variational Autoencoder, (2018, Xu) Semi-Amortized VAE, (2018, Kim) Cyclical Annealing Schedule: A Simple Approach to Mitigating KL Vanishing, (2019, Fu) 4

Normalizing Flow Making posterior harder to collapse to a standard Gaussian prior https://lilianweng.github.io/lil-log/2018/10/13/flow- based-deep-generative-models.html 6

Normalizing Flow Tighter likelihood approximation Reconstruction Jacobian KL 7

Why Riemannian VAE? The Latent space is not flat Euclidean. It should be curved. 8

Riemannian Metric Jacobian Rie. Metric 9

Match latent manifold with input manifold Curve Length 10

Modeling curvature by NF Planar Flow Curvature: 11

Modeling curvature by NF To match geometry of latent space with input space, we need this determinant to be large when input manifold has high curvature Jacobian 12

Wasserstein Distance Wasserstein Autoencoder, (ICLR 2018, Ilya Tolstikhin) Replace KL with Maximum Mean Discrepancy (MMD) 13

Wasserstein RNF Reconstruction MMD loss KLD loss with NF 14

Results Language Models: Negative Log-likelihood/KL/Perplexity 15

Results: KL divergence Yelp PTB 16

Results: Negative log-likelihood PTB Yelp 104 198 92 91 184 183 WAE WAE-NF WAE-RNF WAE WAE-NF WAE-RNF 17

Mutual Information Mutual information 18

Conclusion Propose to use Normalizing Flow and Wasserstein Distance for variational language model Design Riemannian Normalizing Flow to learn a smooth latent space Empirical results indicate that Riemannian Normalizing Flow with Wasserstein Distance help avert KL vanishing Code: https://github.com/kingofspace0wzz/wae-rnf-lm 19

Thank you! Q & A :) Code: https://github.com/kingofspace0wzz/wae-rnf-lm Paper: https://arxiv.org/abs/1904.02399 20