Revealing Introduction to Hidden Markov Models

Explore the concepts of Hidden Markov Models (HMMs) and Markov Chains in this revealing introduction. Learn about the applications, benefits, and structure of HMMs through examples and visual representations. Understand how HMMs leverage machine learning techniques for tasks like speech recognition and information security.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

A Revealing Introduction to Hidden Markov Models Mark Stamp A Revealing Introduction to HMMs 1

Hidden Markov Models What is a hidden Markov model (HMM)? o A machine learning technique o trained via a discrete hill climb technique o Two for the price of one! Where are HMMs used? o Speech recognition, information security, and far too many other things to list o Q: Why are HMMs so useful? o A: Widely applicable and efficient algorithms A Revealing Introduction to HMMs 2

Markov Chain Markov chain o Memoryless random process o Transitions depend only on current state (Markov chain of order 1) o and transition probability matrix Example? o See next slide A Revealing Introduction to HMMs 3

Markov Chain 0.7 Suppose we re interested in average annual temperature o Only consider Hot and Cold From recorded history, obtain probabilities for o Year-to-year transitions o Based on thermometer readings for recent years H 0.3 0.4 C 0.6 A Revealing Introduction to HMMs 4

Markov Chain 0.7 Transition probability matrix H 0.3 0.4 Matrix is denoted as A C 0.6 Note, Ais row stochastic A Revealing Introduction to HMMs 5

Markov Chain Can also include begin, end states Matrix for begin state denoted o In this example, 0.7 0.6 H 0.3 0.4 begin end C Note that also row stochastic 0.4 0.6 A Revealing Introduction to HMMs 6

Hidden Markov Model HMM includes a Markov chain o But the Markov process is hidden , i.e., we can t directly observe the Markov process o Instead, observe things that are probabilistically related to hidden states o It s as if there is a curtain between Markov chain and the observations Example on next few slides A Revealing Introduction to HMMs 7

HMM Example Consider H/C annual temp example Suppose we want to know H or C annual temperature in distant past o Before thermometers were invented o Note, we only distinguish between H and C We ll assume transition between Hot and Cold years is same as today o Implies that the A matrix is known A Revealing Introduction to HMMs 8

HMM Example Assume temps follow a Markov process o But, we cannot observe temperature in past We find modern evidence that tree ring size is related to temperature o Discover this by looking at recent data We ll only consider 3 tree ring sizes o Small, Medium, Large (S, M, L, respectively) Measure tree ring sizes and recorded temperatures to determine relationship A Revealing Introduction to HMMs 9

HMM Example We find that tree ring sizes and temperature related by This is known as the B matrix The matrix B is also row stochastic A Revealing Introduction to HMMs 10

HMM Example Can we now find H/C temps in past? We cannot measure (observe) temps But we can measure tree ring sizes and tree ring size is related to temp o Based on probabilities in the B matrix Can we say something intelligent about temps over some interval in the past? A Revealing Introduction to HMMs 11

HMM Notation A lot of notation is required o Notation may be the hardest part A Revealing Introduction to HMMs 12

HMM Notation For notational convenience, observations from V = {0,1, ,M-1} That is, The matrix A = {aij} is N x N, where o The matrix B = {bj(k)} is N x M, where o o Atypical notation for elements of B A Revealing Introduction to HMMs 13

HMM Example Consider our temperature example What are the observations? o V = {0,1,2}, corresponding to S,M,L What are states of Markov process? o Q = {H,C} What are A,B, , and T? o A,B, on previous slides o T is number of tree rings measured What are N and M? o N = 2 and M = 3 A Revealing Introduction to HMMs 14

Generic HMM Generic view of HMM HMM defined by A,B, and We denote HMM model as = (A,B, ) A Revealing Introduction to HMMs 15

HMM Example Suppose that we observe tree ring sizes o Over a 4 year period of interest: S,M,S,L o Then = (0, 1, 0, 2) Most likely (hidden) state sequence? o That is, most likely X = (X0, X1, X2, X3) Let X0be prob. of starting in state X0 Note prob. of initial observation o And aX0,X1 is prob. of transition X0 to X1 And so on A Revealing Introduction to HMMs 16

HMM Example Bottom line? We can compute P(X) for any given X For X = (X0, X1, X2, X3) we have Assuming we observe (0,1,0,2), then what is probability of, say, HHCC? Plug into formula above to find A Revealing Introduction to HMMs 17

HMM Example Do same for all 4-state seq s We find that the winner is o CCCH Not so fast my friend! A Revealing Introduction to HMMs 18

HMM Example The pathCCCH scores the highest In dynamic programming (DP), we find highest scoring path But, in HMM we maximize expected number of correct states o Sometimes called EM algorithm o That is, expectation maximization How does HMM work in this example? A Revealing Introduction to HMMs 19

HMM Example For first position in sequence o Sum probabilities for all paths that have H in 1st position, compare to sum of probs for paths with C in 1st position biggest wins Repeat for each position and we find A Revealing Introduction to HMMs 20

HMM Example So, HMM (or EM) solution gives us CHCH While DP (or path) solution is CCCH Which solution is better? Neither solution is better! o Just using different definitions of best A Revealing Introduction to HMMs 21

HMM Paradox? HMM maximizes expected number of correct states o Whereas DP chooses best overall path Possible for HMM to choose a path that is impossible o Could be a transition probability of 0 Cannot have impossible path with DP Is this a flaw with HMM? o No, it s a feature A Revealing Introduction to HMMs 22

Probability of Observations Table computed for = (0,1,0,2) For this sequence, P( ) = .000412 + .000035 + .000706 + + .000847 = left to the reader Similarly for other observations A Revealing Introduction to HMMs 23

HMM Model An HMM is defined by the three matrices, A, B, and Note that M and N are implied, since they are the dimensions of matrices So, we denote an HMM model as = (A,B, ) A Revealing Introduction to HMMs 24

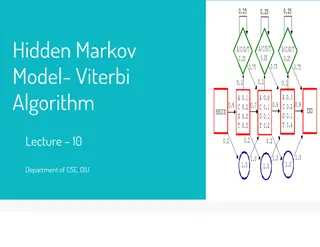

The Three Problems HMMs used to solve 3 problems Problem 1: Given a model = (A,B, ) and observation sequence O, find P(O| ) o That is, we can score an observation sequence to see how well it fits a given model Problem 2: Given = (A,B, ) and O, find an optimal state sequence (in HMM sense) o Uncover hidden part (like previous example) Problem 3: Given O, N, and M, find the model that maximizes probability of O o That is, train a model to fit observations A Revealing Introduction to HMMs 25

HMMs in Practice We often, we use HMMs as follows: Given an observation sequence o Assume that (hidden) Markov process exists Train a model based on observations o That is, solve Problem 3 o Best N can be found by trial and error Then given a sequence of observations, score it versus the model we trained o This is Problem 1 high score implies similar to training data, low score says it s not similar A Revealing Introduction to HMMs 26

HMMs in Practice Previous slide gives sense in which HMM is a machine learning technique o To train model, we do not need to specify anything except the parameter N o Best N often found by trial and error So, we don t need to think too much o Just train HMM and then use it o Practical, since efficient algorithms exist for training HMM and scoring A Revealing Introduction to HMMs 27

The Three Solutions We give detailed solutions to 3 problems o Note: We must find efficient solutions The three problems: o Problem 1: Score an observation sequence versus a given model o Problem 2: Given a model, uncover hidden part o Problem 3: Given an observation sequence, train a model Recall that we considered example for 2 and 1 o But direct solutions are soooooo inefficient A Revealing Introduction to HMMs 28

Solution 1 Score observations versus a given model o Given model = (A,B, ) and observation sequence O=(O0,O1, ,OT-1), find P(O| ) Denote hidden states as X = (X0, X1, . . . , XT-1) Then from definition of B, P(O|X, )=bX0(O0) bX1(O1) bXT-1(OT-1) And from definition of A and , P(X| )= X0 aX0,X1 aX1,X2 aXT-2,XT-1 A Revealing Introduction to HMMs 29

Solution 1 Elementary conditional probability fact: P(O,X| ) = P(O|X, ) P(X| ) Sum over all possible state sequences X, P(O| ) = P(O,X| ) = P(O|X, ) P(X| ) = X0bX0(O0)aX0,X1bX1(O1) aXT-2,XT-1bXT-1(OT-1) This works but way too costly Requires about 2TNT multiplications o Why? There better be a better way A Revealing Introduction to HMMs 30

Forward Algorithm Instead, use forward algorithm o Or alpha pass For t = 0,1, ,T-1 and i=0,1, ,N-1, let t(i) = P(O0,O1, ,Ot,Xt=qi| ) Probability of partial sum to t, and Markov process is in state qi at step t Can be computed recursively, efficiently A Revealing Introduction to HMMs 31

Forward Algorithm Let 0(i) = ibi(O0) for i = 0,1, ,N-1 For t = 1,2, ,T-1 and i=0,1, ,N-1, let t(i) = ( t-1(j)aji)bi(Ot) o Where the sum is from j = 0 to N-1 From definition of t(i) we see P(O| ) = T-1(i) o Where the sum is from i = 0 to N-1 This requires only N2T multiplications A Revealing Introduction to HMMs 32

Solution 2 Given a model, find hidden states o Given = (A,B, ) and O, find an optimal state sequence o Recall that optimal means maximize expected number of correct states o In contrast, DP finds best scoring path For temp/tree ring example, solved this o But hopelessly inefficient approach A better way: backward algorithm o Or beta pass A Revealing Introduction to HMMs 33

Backward Algorithm For t = 0,1, ,T-1 and i = 0,1, ,N-1, let t(i) = P(Ot+1,Ot+2, ,OT-1|Xt=qi, ) Probability of partial sum from t to end and Markov process in state qi at step t Analogous to the forward algorithm As with forward algorithm, this can be computed recursively and efficiently A Revealing Introduction to HMMs 34

Backward Algorithm Let T-1(i) = 1 for i = 0,1, ,N-1 For t = T-2,T-3, ,1 and i = 0,1, ,N-1, let t(i) = aijbj(Ot+1) t+1(j) Where the sum is from j = 0 to N-1 A Revealing Introduction to HMMs 35

Solution 2 For t = 1,2, ,T-1 and i=0,1, ,N-1 define t(i) = P(Xt=qi|O, ) o Most likely state at t is qi that maximizes t(i) We see that t(i) = t(i) t(i)/P(O| ) o And recall P(O| ) = T-1(i) The bottom line? o Forward algorithm solves Problem 1 o Forward/backward algorithms solve Problem 2 (how?) A Revealing Introduction to HMMs 36

Solution 3 Train a model: Given O, N, and M, find that maximizes probability of O We ll iteratively adjust = (A,B, ) to better fit the given observations O o The size of matrices are fixed (N and M) o But elements of matrices can change It is nice that this works o andamazing that it s efficient! A Revealing Introduction to HMMs 37

Solution 3 For t=0,1, ,T-2 and i,j in {0,1, ,N-1}, define di-gammas as t(i,j) = P(Xt=qi, Xt+1=qj|O, ) Note t(i,j) is prob of being in state qi at time t and transiting to state qj at t+1 Then t(i,j) = t(i)aijbj(Ot+1) t+1(j)/P(O| ) And t(i) = t(i,j) o Where sum is from j = 0 to N 1 A Revealing Introduction to HMMs 38

Model Re-estimation Given di-gammas and gammas For i = 0,1, ,N-1 let i = 0(i) For i = 0,1, ,N-1 and j = 0,1, ,N-1 aij = t(i,j)/ t(i) o Where both sums are from t = 0 to T-2 For j = 0,1, ,N-1 and k = 0,1, ,M-1 bj(k) = t(j)/ t(j) o Both sums from from t = 0 to T-1 but only t for which Ot = k are counted in numerator Why does this work? A Revealing Introduction to HMMs 39

Solution 3 To summarize 1. Initialize = (A,B, ) 2. Compute t(i), t(i), t(i,j), t(i) 3. Re-estimate the model = (A,B, ) 4. If P(O| ) increases by more than (where is small), goto 2 A Revealing Introduction to HMMs 40

Solution 3 Some fine points Model initialization o If we have a good guess for = (A,B, ) then we can use it for initialization o If not, let i 1/N, ai,j 1/N, bj(k) 1/M o Subject to row stochastic conditions o But, do not initialize to exactly uniform values Stopping conditions o Stop after some number of iterations and/or o Stop if increase in P(O| ) is too small A Revealing Introduction to HMMs 41

HMM as Discrete Hill Climb Algorithm on previous slides shows that HMM is a discrete hill climb HMM consists of discrete states, Xt o Climb on the elements of the matrices And re-estimation process improves model by modifying parameters o So, climbs toward improved model o This happens in a high-dimensional space A Revealing Introduction to HMMs 42

Dynamic Programming Brief detour For = (A,B, )as above, it s easy to define a dynamic program (DP) Executive summary: o DP is forward algorithm, with sum replaced by max Details on next few slides A Revealing Introduction to HMMs 43

Dynamic Programming Let 0(i) = i bi(O0) for i=0,1, ,N-1 For t=1,2, ,T-1 and i=0,1, ,N-1 compute t(i) = max ( t-1(j)aji)bi(Ot) o Where the max is over j in {0,1, ,N-1} Note that at each t, the DP computes best path for each state, up to that point So, probability of best path is max T-1(j) This max gives the highest probability o Not the best path, for that, see next few slides A Revealing Introduction to HMMs 44

Dynamic Programming To determine optimal path o While computing deltas, keep track of pointers to previous state o When finished, construct optimal path by tracing back points For example, consider temp example: recall that we observe (0,1,0,2) Probabilities for path of length 1: These are the only paths of length 1 A Revealing Introduction to HMMs 45

Dynamic Programming Probabilities for each path of length 2 Best path of length 2 ending with H is CH Best path of length 2 ending with C is CC A Revealing Introduction to HMMs 46

Dynamic Program Continuing, we compute best path ending at H and C at each step And save pointers why? A Revealing Introduction to HMMs 47

Dynamic Program Best final score is .002822 o And thanks to pointers, best path is CCCH But what about underflow? o A serious problem in bigger cases A Revealing Introduction to HMMs 48

Underflow Resistant DP Common trick to prevent underflow: o Instead of multiplying probabilities o add logarithms of probabilities Why does this work? o Because log(xy) = log x + log y o Adding logs does not tend to 0 Note that these logs are negative and we must avoid 0 probabilities A Revealing Introduction to HMMs 49

Underflow Resistant DP Underflow resistant DP algorithm: Let 0(i) = log( i bi(O0)) for i=0,1, ,N-1 For t=1,2, ,T-1 and i=0,1, ,N-1 compute t(i) = max ( t-1(j) + log(aji) + log(bi(Ot))) o Where the max is over j in {0,1, ,N-1} And score of best path is max T-1(j) o As before, must also keep track of paths A Revealing Introduction to HMMs 50