Overview of Interaction Devices and Keyboard Layouts

Explore various interaction devices and keyboard layouts including QWERTY, Dvorak, ABCDE, orbiTouch, phone keyboards, and other text input methods like Dasher and Grafitti. Understand the basics of data entry, general keyboard layouts, and the evolution of input methods through different technologies and designs.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

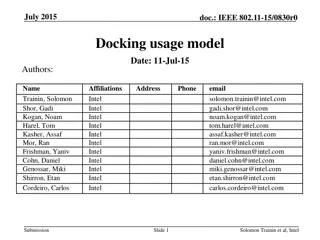

Interaction Devices Chapter 8 Tyler DeFoor, Daniel Lopez, Matthew Molloy, Nicholas Smith, Nicholas Wehrhan

Outline Keyboard Layouts Pointing Devices Nonstandard Interaction and Devices Speech and Auditory Interfaces Displays

The Basics The primary mode of data entry High learning curve Beginners at 1 keystroke Average office worker at 5 keystrokes Some reach up to 15 keystrokes

General Layouts inch square keys inch spacing between keys Slightly concave, matte material Tactile and audio feedback very important State indicators

General Layouts (continued) Cursor movement keys Diagonals are optional Usually in an inverted-T Movement performed with other keys TAB, ENTER, SPACE

Keyboard Layouts - QWERTY 1870 Christopher Latham Sholes Slowed down users Meant to unjam keyboards Puts frequently used letters apart Most common layout

Keyboard Layouts - Dvorak Created in the 1920 s Greatly reduced finger travel Very slow acceptance Will usually take about 1 week to readjust

Other Layouts ABCDE Keys in alphabetical order Perfect for non-typists Keypad Argument between layouts

Phone Keyboards Touchscreen keyboards rising iPhone and Android slightly different Many apps augment Swiftkey popular Learns from user Predicts words in sentences

Other Text Input Methods Dasher Uses eye movement Grafitti Used on Palm Devices

Mechanical Keyboards Uses mechanical switches Smaller actuation force Cherry MX main provider Different switches for different things Reds for gaming

Overview of Pointing Devices Interaction Tasks Direct-Control Indirect-Control Comparison of Devices

Interaction Tasks Selecting Having the User choose from a set of items Ex: Traditional Menu selection File Browsing and Directory Navigation Position Choosing a point in a 1, 2, or 3 Dimensional Space Ex:

Interaction Tasks (Contd) Orient Similar to positioning, Choosing position in 1, 2 or 3 dimensional space Ex: Rotate a symbol on the screen Indicate direction of motion for camera positioning Operation of robot arm Path User rapidly makes series of Position and Orient operations Ex:

Interaction Tasks (Contd) Quantify Specifying a numberical value Ex: Setting real values within a range as parameter Text User Entering/Moving/Editing text within 2D space (screen) Pointing device indicates the location of the insertion/deletion/change Ex:

Direct Control Pointing Devices LIGHTPEN Enables direct control by having user directly point to what they want on the screen Incorporates button for user to click when cursor is over what he/she wants Three Disadvantages Part of the screen obscured User s hand had to leave keyboard

Direct Control Pointing Devices (Contd) Touch Screens Allows direct contact with screen Users lift finger of screen when done with positioning Produce varied displays to suit task Critcisms Fatigue Hand Obscuring Screen

Indirect Pointing Devices Mouse Hands rests in a comfortable position Buttons can be easily pressed Precise positioning Trackball Implemented as rotating ball that moves a cursor offers convenient and precision of touchscreen 3D Mouse

Indirect Pointing Devices (Contd) Joystick Appealing for tracking purposes Graphics Tablet Touch-sensitive surface separate from the screen Touchpad Built-in near the keyboard that offers convenience and precision, but keeps hands off the display

Touch++ Making pointing devices more versatile and available on different surfaces Fulfills 6 main goals of pointing devices

Comparing Pointing Devices Human Factors: Learning Curve User Satisfaction Position accuracy Speed of motion (Fitts Law) Cost Durability Space Requirements

Nonstandard Interaction and Devices And Fitts Law!

Fitts Law A model of human hand movement Used as a predictive model of the time required to point at an object Fitts noticed that the time required to complete hand movements was dependent on the distance users had to move and the target size Increasing distance between targets results in longer completion time Doubling the distance does not double the completion time Increasing a target s size enables users to point it at quicker Works best for adult users

Fitts Law (Contd) MT = a + b * log2(D/W + 1) Example a = 300 ms b = 200 ms/bit D = 14 cm W = 2 cm Several different versions of Fitts law, but the above equation works well in a wide range of situations

Multiple-touch Touchscreens Allow a single user to use both hands or multiple fingers at once Also allows multiple users to work together on a shared surface More precise item selection, zoom in or out

Bimanual Input What is bimanual? Can facilitate multitasking or compound tasks The nondominant hand sets a frame of reference in which the dominant hand operates in a more precise fashion.

Eye-trackers Gaze-detecting controllers User video-camera image recognition Midas-touch problem Mostly use for research and evaluation or aid for users with motor disabilities

Multiple-Degree-of-Freedom Devices Senses multiple dimensions of spatial position and orientation Possible applications include control over 3D objects and virtual reality

Tangible User Interface Allows someone to interact with digital information through a physical environment

Other nonstandard interaction and Devices Paper Mobile Devices Sensors Haptic feedback

Speech and Auditory Interfaces

The Dream Hal Space Odyssey in 1968 set the precedent for the goal of Human computer interaction through speech A comparable SciFi example today Jarvis from Iron Man The Truth is sobering compared to Science Fiction Lately even Science fiction portrayals in movies have shifted to more visual aspects (Ex: Jarvis)

Issues on the Humans Side Better interaction on low cognitive load and low error ideas Hard to remember specific vocal commands Limited speech compared to processing of hand/eye coordination Planning and problem solving can proceed in parallel with hand/eye coordination, but is more difficult to do while speaking Short term memory is associated with vocal cognininess and is often referred to as acoustic memory

Issues on the Computer's side Multiply hardware VS a single display (Mic(s) and Speaker) Unstable recognition across changing users, environments, and time Slow compared to Displays or Visuals Human Speech contains much context and interpretation

Then why Speech and Audio Interfaces? Benefits people with disabilities Success in telephones/cell phones Successful for simple tasks Successful for human like touch on information When speaker s hands are busy Mobility required Speaker's eyes are occupied

Bicycle Analogy It is great to use and has an important role, but it can only carry a light load *In this day and age

Five Variants of Speech and Audio Interfaces 1.Discrete-Word recognition 2.Continuous-speech Recognition 3.Voice Information Systems 4.Speech Generation 5.Non-speech Auditory Interfaces

What is Discrete-word Recognition? Recognize specific individual words spoken by a specific person Works 90% to 98% for 20 to 200 Vocabularies Speaker-Dependent training, repeat multiple times for more accuracy but has hard time with changing variables Speaker-Independent training, no repetition but varies in accuracy due to no base for interpretation

Success of Discrete-word Recognition Successful in telephones/cell phones automated systems Successful for simple instructions or when user is busy Extremely Successful in toys Low cost for lower quality speech chips and mic/speakers Adds a personal touch Errors have little serious repercussions Errors can be brushed off as funny or a challenge

Continuous-speech Recognition

What is Continous-speech Recognition? Continuous can be defined as ongoing speech with an interaction device (Hal or Jarvis) without the need for the user to stop or dictate specific commands or instructions Can also take the form of continuous dictation from a user Or can be used for scanning/deciphering a continuous form of audio for interpretation

Issues with Continuous-speech Recognition Diverse accents Languages with different grammar implying different context and interpretations Variable Speaking rates Dictation from a user needs to either have training or be prepared ahead of time