Introduction to Association Analysis in Data Mining

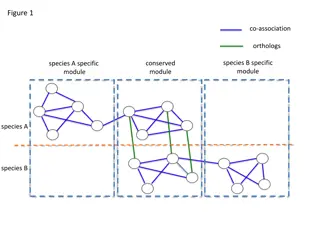

Association analysis, a fundamental concept in data mining, involves finding relationships between items in transactions. It explores the co-occurrence of items to predict future occurrences. Frequent itemsets and association rules play a key role in uncovering patterns within transactional data sets through metrics like support and confidence. The task of association rule mining aims to discover useful rules meeting predefined support and confidence thresholds, facilitating insights into transaction behaviors.

Uploaded on Dec 09, 2024 | 0 Views

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Data Mining Chapter 5 Association Analysis: Basic Concepts Introduction to Data Mining, 2nd Edition by Tan, Steinbach, Karpatne, Kumar 02/14/2018 Introduction to Data Mining, 2ndEdition 1

Association Rule Mining Given a set of transactions, find rules that will predict the occurrence of an item based on the occurrences of other items in the transaction Market-Basket transactions Example of Association Rules TID 1 2 3 4 5 Items Bread, Milk Bread, Diaper, Beer, Eggs Milk, Diaper, Beer, Coke Bread, Milk, Diaper, Beer Bread, Milk, Diaper, Coke {Diaper} {Beer}, {Milk, Bread} {Eggs,Coke}, {Beer, Bread} {Milk}, Implication means co-occurrence, not causality! 02/14/2018 Introduction to Data Mining, 2nd Edition 2

Definition: Frequent Itemset Itemset A collection of one or more items Example: {Milk, Bread, Diaper} k-itemset TID 1 2 3 4 5 Items Bread, Milk Bread, Diaper, Beer, Eggs Milk, Diaper, Beer, Coke Bread, Milk, Diaper, Beer Bread, Milk, Diaper, Coke An itemset that contains k items Support count ( ) Frequency of occurrence of an itemset E.g. ({Milk, Bread,Diaper}) = 2 Support Fraction of transactions that contain an itemset E.g. s({Milk, Bread, Diaper}) = 2/5 Frequent Itemset An itemset whose support is greater than or equal to a minsup threshold 02/14/2018 Introduction to Data Mining, 2nd Edition 3

Definition: Association Rule Association Rule An implication expression of the form X Y, where X and Y are itemsets Example: {Milk, Diaper} {Beer} TID Items 1 2 3 4 5 Bread, Milk Bread, Diaper, Beer, Eggs Milk, Diaper, Beer, Coke Bread, Milk, Diaper, Beer Bread, Milk, Diaper, Coke Rule Evaluation Metrics Support (s) Example: Fraction of transactions that contain both X and Y Confidence (c) Milk { , Diaper } Beer} { = ( Milk , Diaper, Beer ) 2 Measures how often items in Y appear in transactions that contain X = = 4 . 0 s | T | 5 ( Milk, Diaper, Beer ) 2 = = = . 0 67 c ( Milk , Diaper ) 3 02/14/2018 Introduction to Data Mining, 2nd Edition 4

Association Rule Mining Task Given a set of transactions T, the goal of association rule mining is to find all rules having support minsup threshold confidence minconf threshold Brute-force approach: List all possible association rules Compute the support and confidence for each rule Prune rules that fail the minsup and minconf thresholds Computationally prohibitive! 02/14/2018 Introduction to Data Mining, 2nd Edition 5

Mining Association Rules Example of Rules: TID Items 1 2 3 4 5 Bread, Milk Bread, Diaper, Beer, Eggs Milk, Diaper, Beer, Coke Bread, Milk, Diaper, Beer Bread, Milk, Diaper, Coke {Milk,Diaper} {Beer} (s=0.4, c=0.67) {Milk,Beer} {Diaper} (s=0.4, c=1.0) {Diaper,Beer} {Milk} (s=0.4, c=0.67) {Beer} {Milk,Diaper} (s=0.4, c=0.67) {Diaper} {Milk,Beer} (s=0.4, c=0.5) {Milk} {Diaper,Beer} (s=0.4, c=0.5) Observations: All the above rules are binary partitions of the same itemset: {Milk, Diaper, Beer} Rules originating from the same itemset have identical support but can have different confidence Thus, we may decouple the support and confidence requirements 02/14/2018 Introduction to Data Mining, 2nd Edition 6

Mining Association Rules Two-step approach: 1. Frequent Itemset Generation Generate all itemsets whose support minsup 2. Rule Generation Generate high confidence rules from each frequent itemset, where each rule is a binary partitioning of a frequent itemset Frequent itemset generation is still computationally expensive 02/14/2018 Introduction to Data Mining, 2nd Edition 7

Frequent Itemset Generation null A B C D E AB AC AD AE BC BD BE CD CE DE ABC ABD ABE ACD ACE ADE BCD BCE BDE CDE ABCD ABCE ABDE ACDE BCDE Given d items, there are 2d possible candidate itemsets ABCDE 02/14/2018 Introduction to Data Mining, 2nd Edition 8

Frequent Itemset Generation Brute-force approach: Each itemset in the lattice is a candidate frequent itemset Count the support of each candidate by scanning the database Transactions List of Candidates TID Items 1 2 3 4 5 Bread, Milk Bread, Diaper, Beer, Eggs Milk, Diaper, Beer, Coke Bread, Milk, Diaper, Beer Bread, Milk, Diaper, Coke w M N Match each transaction against every candidate Complexity ~ O(NMw) => Expensive since M = 2d !!! 02/14/2018 Introduction to Data Mining, 2nd Edition 9

Frequent Itemset Generation Strategies Reduce the number of candidates (M) Complete search: M=2d Use pruning techniques to reduce M Reduce the number of transactions (N) Reduce size of N as the size of itemset increases Used by DHP and vertical-based mining algorithms Reduce the number of comparisons (NM) Use efficient data structures to store the candidates or transactions No need to match every candidate against every transaction 02/14/2018 Introduction to Data Mining, 2nd Edition 10

Reducing Number of Candidates Apriori principle: If an itemset is frequent, then all of its subsets must also be frequent Apriori principle holds due to the following property of the support measure: , ( : ) ( ) ( ) X Y X Y s X s Y Support of an itemset never exceeds the support of its subsets This is known as the anti-monotone property of support 02/14/2018 Introduction to Data Mining, 2nd Edition 11

Illustrating Apriori Principle null null A A B B C C D D E E AB AB AC AC AD AD AE AE BC BC BD BD BE BE CD CD CE CE DE DE Found to be Infrequent ABC ABC ABD ABD ABE ABE ACD ACD ACE ACE ADE ADE BCD BCD BCE BCE BDE BDE CDE CDE ABCD ABCD ABCE ABCE ABDE ABDE ACDE ACDE BCDE BCDE Pruned supersets ABCDE ABCDE 02/14/2018 Introduction to Data Mining, 2nd Edition 12

Rule Generation Given a frequent itemset L, find all non-empty subsets f L such that f L f satisfies the minimum confidence requirement If {A,B,C,D} is a frequent itemset, candidate rules: ABC D, ABD C, A BCD, B ACD, AB CD, AC BD, BD AC, CD AB, ACD B, C ABD, AD BC, BCD A, D ABC BC AD, If |L| = k, then there are 2k 2 candidate association rules (ignoring L and L) 02/14/2018 Introduction to Data Mining, 2nd Edition 13

Rule Generation In general, confidence does not have an anti- monotone property c(ABC D) can be larger or smaller than c(AB D) But confidence of rules generated from the same itemset has an anti-monotone property E.g., Suppose {A,B,C,D} is a frequent 4-itemset: c(ABC D) c(AB CD) c(A BCD) Confidence is anti-monotone w.r.t. number of items on the RHS of the rule 02/14/2018 Introduction to Data Mining, 2nd Edition 14

Rule Generation for Apriori Algorithm Lattice of rules ABCD=>{ } ABCD=>{ } Low Confidence Rule BCD=>A BCD=>A ACD=>B ACD=>B ABD=>C ABD=>C ABC=>D ABC=>D CD=>AB CD=>AB BD=>AC BD=>AC BC=>AD BC=>AD AD=>BC AD=>BC AC=>BD AC=>BD AB=>CD AB=>CD D=>ABC D=>ABC C=>ABD C=>ABD B=>ACD B=>ACD A=>BCD A=>BCD Pruned Rules 02/14/2018 Introduction to Data Mining, 2nd Edition 15

Association Analysis: Basic Concepts and Algorithms Algorithms and Complexity 02/14/2018 Introduction to Data Mining, 2nd Edition 16

Factors Affecting Complexity of Apriori Choice of minimum support threshold lowering support threshold results in more frequent itemsets this may increase number of candidates and max length of frequent itemsets Dimensionality (number of items) of the data set more space is needed to store support count of each item if number of frequent items also increases, both computation and I/O costs may also increase Size of database since Apriori makes multiple passes, run time of algorithm may increase with number of transactions Average transaction width transaction width increases with denser data sets This may increase max length of frequent itemsets and traversals of hash tree (number of subsets in a transaction increases with its width) 02/14/2018 Introduction to Data Mining, 2nd Edition 17

Factors Affecting Complexity of Apriori 02/14/2018 Introduction to Data Mining, 2nd Edition 18

Maximal Frequent Itemset An itemset is maximal frequent if it is frequent and none of its immediate supersets is frequent null Maximal Itemsets A B C D E AB AC AD AE BC BD BE CD CE DE ABC ABD ABE ACD ACE ADE BCD BCE BDE CDE ABCD ABCE ABDE ACDE BCDE Infrequent Itemsets Border ABCD E 02/14/2018 Introduction to Data Mining, 2nd Edition 19

Maximal vs Closed Itemsets Transaction Ids null TID 1 2 3 4 5 Items ABC ABCD BCE ACDE DE 124 123 1234 245 345 A B C D E 12 124 24 123 4 2 3 24 34 45 AB AC AD AE BC BD BE CD CE DE 12 24 2 2 4 4 3 4 ABC ABD ABE ACD ACE ADE BCD BCE BDE CDE 4 2 ABCD ABCE ABDE ACDE BCDE Not supported by any transactions ABCDE 02/14/2018 Introduction to Data Mining, 2nd Edition 20

Maximal vs Closed Frequent Itemsets Closed but not maximal Minimum support = 2 null 124 123 1234 245 345 A B C D E Closed and maximal 12 124 24 123 4 2 3 24 34 45 AB AC AD AE BC BD BE CD CE DE 12 24 2 2 4 4 3 4 ABC ABD ABE ACD ACE ADE BCD BCE BDE CDE 4 2 ABCD ABCE ABDE ACDE BCDE # Closed = 9 # Maximal = 4 ABCDE 02/14/2018 Introduction to Data Mining, 2nd Edition 21

Maximal vs Closed Itemsets Frequent Itemsets Closed Frequent Itemsets Maximal Frequent Itemsets 02/14/2018 Introduction to Data Mining, 2nd Edition 22

Example question Given the following transaction data sets (dark cells indicate presence of an item in a transaction) and a support threshold of 20%, answer the following questions a. What is the number of frequent itemsets for each dataset? Which dataset will produce the most number of frequent itemsets? Which dataset will produce the longest frequent itemset? Which dataset will produce frequent itemsets with highest maximum support? Which dataset will produce frequent itemsets containing items with widely varying support levels (i.e., itemsets containing items with mixed support, ranging from 20% to more than 70%)? What is the number of maximal frequent itemsets for each dataset? Which dataset will produce the most number of maximal frequent itemsets? What is the number of closed frequent itemsets for each dataset? Which dataset will produce the most number of closed frequent itemsets? b. c. d. e. f. 02/14/2018 Introduction to Data Mining, 2nd Edition 23

Pattern Evaluation Association rule algorithms can produce large number of rules Interestingness measures can be used to prune/rank the patterns In the original formulation, support & confidence are the only measures used 02/14/2018 Introduction to Data Mining, 2nd Edition 24

Computing Interestingness Measure Given X Y or {X,Y}, information needed to compute interestingness can be obtained from a contingency table Contingency table f11: support of X and Y f10: support of X and Y f01: support of X and Y f00: support of X and Y Y Y X f11 f01 f+1 f10 f00 f+0 f1+ fo+ N X Used to define various measures support, confidence, Gini, entropy, etc. 02/14/2018 Introduction to Data Mining, 2nd Edition 25

Drawback of Confidence Custo mers C1 C2 C3 C4 Tea Coffee Coffee 15 75 90 Coffee 5 5 10 0 1 1 1 1 0 1 0 Tea Tea 20 80 100 Association Rule: Tea Coffee Confidence P(Coffee|Tea) = 15/20 = 0.75 Confidence > 50%, meaning people who drink tea are more likely to drink coffee than not drink coffee So rule seems reasonable 02/14/2018 Introduction to Data Mining, 2nd Edition 26

Drawback of Confidence Coffee 15 75 90 Coffee 5 5 10 Tea Tea 20 80 100 Association Rule: Tea Coffee Confidence= P(Coffee|Tea) = 15/20 = 0.75 but P(Coffee) = 0.9, which means knowing that a person drinks tea reduces the probability that the person drinks coffee! Note that P(Coffee|Tea) = 75/80 = 0.9375 02/14/2018 Introduction to Data Mining, 2nd Edition 27

Measure for Association Rules So, what kind of rules do we really want? Confidence(X Y) should be sufficiently high To ensure that people who buy X will more likely buy Y than not buy Y Confidence(X Y) > support(Y) Otherwise, rule will be misleading because having item X actually reduces the chance of having item Y in the same transaction Is there any measure that capture this constraint? Answer: Yes. There are many of them. 02/14/2018 Introduction to Data Mining, 2nd Edition 28

Statistical Independence The criterion confidence(X Y) = support(Y) is equivalent to: P(Y|X) = P(Y) P(X,Y) = P(X) P(Y) If P(X,Y) > P(X) P(Y) : X & Y are positively correlated If P(X,Y) < P(X) P(Y) : X & Y are negatively correlated 02/14/2018 Introduction to Data Mining, 2nd Edition 29

Measures that take into account statistical dependence ( P | ) P Y X = Lift ( ) P Y lift is used for rules while interest is used for itemsets ( X , P ) X Y = Interest ( ) ( ) P Y = ( , ) ( ) ( ) X PS P X Y P X P Y ( , ) ( P ) ( ) P Y P X P Y = coefficien t ( )[ 1 ( )] ( )[ 1 ( )] P X P X Y P Y 02/14/2018 Introduction to Data Mining, 2nd Edition 30

Example: Lift/Interest Coffee 15 75 90 Coffee 5 5 10 Tea Tea 20 80 100 Association Rule: Tea Coffee Confidence= P(Coffee|Tea) = 0.75 but P(Coffee) = 0.9 Lift = 0.75/0.9= 0.8333 (< 1, therefore is negatively associated) So, is it enough to use confidence/lift for pruning? 02/14/2018 Introduction to Data Mining, 2nd Edition 31

Lift or Interest Y 10 0 10 Y 0 90 90 Y 90 0 90 Y 0 10 10 X X 10 90 100 X X 90 10 100 1 . 0 9 . 0 = = 10 Lift = . 1 = 11 Lift 1 . 0 ( ) 1 . 0 )( 9 . 0 ( ) 9 . 0 )( Statistical independence: If P(X,Y)=P(X)P(Y) => Lift = 1 02/14/2018 Introduction to Data Mining, 2nd Edition 32

There are lots of measures proposed in the literature 02/14/2018 Introduction to Data Mining, 2nd Edition 33

Property under Variable Permutation B p r A p q B q s A r s A A B B Does M(A,B) = M(B,A)? Symmetric measures: support, lift, collective strength, cosine, Jaccard, etc Asymmetric measures: confidence, conviction, Laplace, J-measure, etc 02/14/2018 Introduction to Data Mining, 2nd Edition 34

Example: -Coefficient -coefficient is analogous to correlation coefficient for continuous variables Y 60 10 70 Y 10 20 30 Y 20 10 30 Y 10 60 70 X X 70 30 100 X X 30 70 100 7 . 0 7 . 0 3 . 0 3 . 0 6 . 0 2 . 0 = = 3 . 0 7 . 0 3 . 0 3 . 0 7 . 0 3 . 0 7 . 0 7 . 0 = = 5238 . 0 5238 . 0 Coefficient is the same for both tables 02/14/2018 Introduction to Data Mining, 2nd Edition 35

Simpsons Paradox = = = = HDTV ({ Yes} Exercise { Machine Yes} ) 99 / 180 55 % c = = = = HDTV ({ No} Exercise { Machine Yes} ) 54 / 120 45 % c => Customers who buy HDTV are more likely to buy exercise machines 02/14/2018 Introduction to Data Mining, 2nd Edition 36

Simpsons Paradox College students: = = / 1 = = HDTV ({ Yes} Exercise { Machine Yes} ) 10 10 % c = = = = HDTV ({ No} Exercise { Machine Yes} ) 4 / 34 11 8 . % c Working adults: = = = = HDTV ({ Yes} Exercise { Machine Yes} ) 98 / 170 57 7 . % c = = = = HDTV ({ No} Exercise { Machine Yes} ) 50 / 86 58 1 . % c 02/14/2018 Introduction to Data Mining, 2nd Edition 37

Simpsons Paradox Observed relationship in data may be influenced by the presence of other confounding factors (hidden variables) Hidden variables may cause the observed relationship to disappear or reverse its direction! Proper stratification is needed to avoid generating spurious patterns 02/14/2018 Introduction to Data Mining, 2nd Edition 38