Enhancing System Performance with Fine-Grained In-DRAM Data Relocation

This study focuses on improving system performance by reducing DRAM latency through fine-grained data relocation and caching mechanisms. The FIGARO substrate leverages existing structures within a modern DRAM device to enhance data relocation efficiency and cache performance, resulting in notable improvements in system efficiency and energy consumption.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

FIGARO: Improving System Performance via Fine-Grained In-DRAM Data Relocation and Caching Yaohua Wang1, Lois Orosa2, Xiangjun Peng3,1, Yang Guo1, Saugata Ghose4,5, Minesh Patel2, Jeremie S. Kim2, Juan G mez Luna2, Mohammad Sadrosadati6, Nika Mansouri Ghiasi2, Onur Mutlu2,5 3 1 4 2 6 5 MICRO 2020

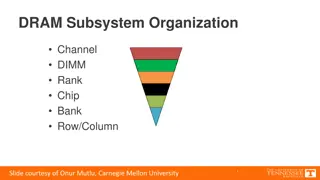

Motivation and Goal Problem: DRAM latency is a performance bottleneck for many applications In-DRAM caches mitigate this latency by augmenting regular-latency DRAM with small-but-fast regions of DRAM that serve as a cache Existing in-DRAM caches have mechanisms for relocating data that have two main inefficiencies: 1) Coarse-grained (i.e., multi-kilobyte) in-DRAM data relocation 2) Relocation latency increases with the physical distance between the slow and fast regions Goal: reduce DRAM latency via an in-DRAM cache that provides 1) Fine-grained (i.e., multi-byte) data relocation 2) Distance-independent relocation latency 2

FIGARO Substrate SRC: Subarray A FIGARO leverages existing shared structures within a modern DRAM device to perform data relocation A0 A1 A2 A3 A3 A4 A5 A6 A7 Observations: Local Row Buffer (LRB) 1) All local row buffers (LRBs) in a bank are connected to a single shared global row buffer (GRB) 1kB DST: Subarray B GRB 2) The GRB has smaller width (e.g., 8B) than the LRBs (e.g., 1kB) B0 B1 B2 B3 8B B4 B5 B6 B7 Key Idea: use the existing shared GRB among subarrays within a DRAM bank to perform fine-grained in-DRAM data relocation Local Row Buffer (LRB) 3

FIGCache (Fine-Grained In-DRAM Cache) Key idea: cache only small, frequently-accessed portions of different DRAM rows in a designated region of DRAM FIGCache uses FIGARO to relocate data into and out of the cache at fine granularity Results: Improves system performance by 16.3% on average Reduces DRAM energy by 7.8% on average Outperforms a state-of-the-art coarse-grained in-DRAM cache Performs close to ideal low-latency DRAM 4

FIGARO: Improving System Performance via Fine-Grained In-DRAM Data Relocation and Caching Yaohua Wang1, Lois Orosa2, Xiangjun Peng3,1, Yang Guo1, Saugata Ghose4,5, Minesh Patel2, Jeremie S. Kim2, Juan G mez Luna2, Mohammad Sadrosadati6, Nika Mansouri Ghiasi2, Onur Mutlu2,5 1 2 3 4 6 5 MICRO 2020